Google’s VP of Product, Robby Stein, recently answered the question of what people should think about in terms of AEO/GEO. He provided a multi-part answer that began with how Google’s AI creates answers and ended with guidance on what creators should consider.

Foundations Of Google AI Search

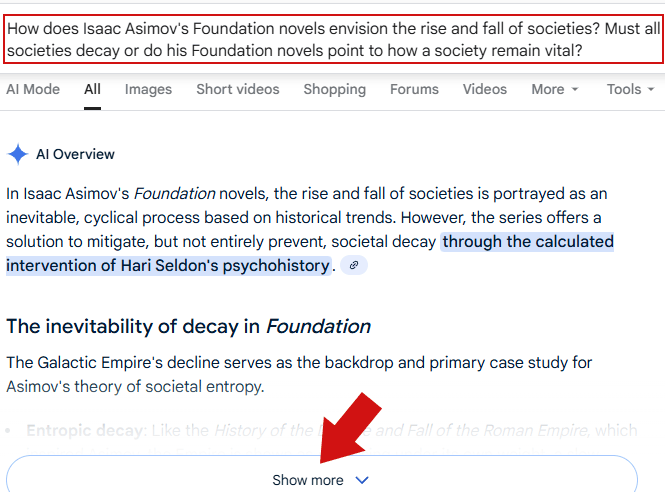

The question asked was about AEO/GEO, which was characterized by the podcast host as the evolution of SEO. Google’s Robby Stein’s answer suggested thinking about the context of AI answers.

This is the question that was asked:

“What’s your take on this whole rise of AEO, GEO, which is kind of this evolution of SEO?

I’m guessing your answer is going to be just create awesome stuff and don’t worry about it, but you know, there’s a whole skill of getting to show up in these answers. Thoughts on what people should be thinking about here?”

Stein began his answer describing the foundations of how Google’s AI search works:

“Sure. I mean, I can give you a little bit of under the hood, like how this stuff works, because I do think that helps people understand what to do.

When our AI constructs a response, it’s actually trying to, it does something called query fan-out, where the model uses Google search as a tool to do other querying.

So maybe you’re asking about specific shoes. It’ll add and append all of these other queries, like maybe dozens of queries, and start searching basically in the background. And it’ll make requests to our data kind of backend. So if it needs real-time information, it’ll go do that.

And so at the end of the day, actually something’s searching. It’s not a person, but there’s searches happening.”

Robby Stein shows that Google’s AI still relies on conventional search engine retrieval, it’s just scaled and automated. The system performs dozens of background searches and evaluates the same quality signals that guide ordinary search rankings.

That means that “answer engine optimization” is basically the same as SEO because the underlying indexing, ranking and quality factors inherent to traditional SEO principles still apply to queries that the AI itself issues as part of the query fan-out process.

For SEOs, the insight is that visibility in AI answers depends less on gaming a new algorithm and more on producing content that satisfies intent so thoroughly that Google’s automated searches treat it as the best possible answer. As you’ll see later in this article, originality also plays a role.

Role Of Traditional Search Signals

An interesting part of this discussion is centered on the kinds of quality signals that Google describes in its Quality Raters Guidelines. Stein talks about originality of the content, for example.

Here’s what he said:

“And then each search is paired with content. So if for a given search, your webpage is designed to be extremely helpful.

And then you can look up Google’s human rater guidelines and read… what makes great information? This is something Google has studied more than anyone.

And it’s like:

- Do you satisfy the user intent of what they’re trying to get?

- Do you have sources?

- Do you cite your information?

- Is it original or is it repeating things that have been repeated 500 times?

And there’s these best practices that I think still do largely apply because it’s going to ultimately come down to an AI is doing research and finding information.

And a lot of the core signals, is this a good piece of information for the question, they’re still valid. They’re still extremely valid and extremely useful. And that will produce a response where you’re more likely to show up in those experiences now.”

Although Stein is describing AI Search results, his answer shows that Google’s AI Search still values the same underlying quality factors found in traditional search. Originality, source citations, and satisfying intent remain the foundation of what makes information “good” in Google’s view. AI has changed the interface of search and encouraged more complex queries, but the ranking factors continue to be the same recognizable signals related to expertise and authoritativeness.

More On How Google’s AI Search Works

The podcast host, Lenny, followed up with another question about how Google’s AI Search might follow a different approach from a strictly chatbot approach.

He asked:

“It’s interesting your point about how it goes in searches. When you use it, it’s like searching a thousand pages or something like that. Is that a just a different core mechanic to how other popular chatbots work because the others don’t go search a bunch of websites as you’re asking.”

Stein answered with more details about how AI search works, going beyond query fan-out, identifying factors it uses to surface what they feel to be the best answers. For example, he mentions parametric memory. Parametric memory is the knowledge that an AI has as part of its training. It’s essentially the knowledge stored within the model and not fetched from external sources.

Stein explained:

“Yeah, this is something that we’ve done uniquely for our AI. It obviously has the ability to use parametric memory and thinking and reasoning and all the things a model does.

But one of the things that makes it unique for designing it specifically for informational tasks, like we want it to be the best at informational needs. That’s what Google’s all about.

- And so how does it find information?

- How does it know if information is right?

- How does it check its work?

These are all things that we built into the model. And so there is a unique access to Google. Obviously, it’s part of Google search.

So it’s Google search signals, everything from spam, like what’s content that could be spam and we don’t want to probably use in a response, all the way to, this is the most authoritative, helpful piece of information.

We’re going link to it and we’re going to explain, hey, according to this website, check out that information and you’re going to probably go see that yourself.

So that’s how we’ve thought about designing this.”

Stein’s explanation makes it clear that Google’s AI Search is not designed to mimic the conversational style of general chatbots but to reinforce the company’s core goal of delivering trustworthy information that’s authoritative and helpful.

Google’s AI Search does this by relying on signals from Google Search, such as spam detection and helpfulness, the system grounds its AI-generated answers in the same evaluation and ranking framework inherent in regular search ranking.

This approach positions AI Search as less a standalone version of search and more like an extension of Google’s information-retrieval infrastructure, where reasoning and ranking work together to surface factually accurate answers.

Advice For Creators

Stein at one point acknowledges that creators want to know what to do for AI Search. He essentially gives the advice to think about the questions people are asking. In the old days that meant thinking about what keywords searchers are using. He explains that’s no longer the case because people are using long conversational queries now.

He explained:

“I think the only thing I would give advice to would be, think about what people are using AI for.

I mentioned this as an expansionary moment, …that people are asking a lot more questions now, particularly around things like advice or how to, or more complex needs versus maybe more simple things.

And so if I were a creator, I would be thinking, what kind of content is someone using AI for? And then how could my content be the best for that given set of needs now?

And I think that’s a really tangible way of thinking about it.”

Stein’s advice doesn’t add anything new but it does reframe the basics of SEO for the AI Search era. Instead of optimizing for isolated keywords, creators should consider anticipating the fuller intent and informational journey inherent in conversational questions. That means structuring content to directly satisfy complex informational needs, especially “how to” or advice-driven queries that users increasingly pose to AI systems rather than traditional keyword search.

Takeaways

- AI Is Search Still Built on Traditional SEO Signals

Google’s AI Search relies on the same core ranking principles as traditional search—intent satisfaction, originality, and citation of sources.

- How Query Fan-Out Works

AI Search issues dozens of background searches per query, using Google Search as a tool to fetch real-time data and evaluate quality signals.

- Integration of Parametric Memory and Search Signals

The model blends stored knowledge (parametric memory) with live Google Search data, combining reasoning with ranking systems to ensure factual accuracy.

- Google’s AI Search Is Like An Extension of Traditional Search

AI Search isn’t a chatbot; it’s a search-based reasoning system that reinforces Google’s informational trust model rather than replacing it.

- Guidance for Creators in the AI Search Era

Optimizing for AI means understanding user intent behind long, conversational queries—focusing on advice- and how-to-style content that directly satisfies complex informational needs.

Google’s AI Search builds on the same foundations that have long defined traditional search, using retrieval, ranking, and quality signals to surface information that demonstrates originality and trustworthiness. By combining live search signals with the model’s own stored knowledge, Google has created a system that explains information and cites the websites that provided it. For creators, this means that success now depends on producing content that fully addresses the complex, conversational questions people bring to AI systems.

Watch the podcast segment starting at about the 15:30 minute mark:

Featured Image by Shutterstock/PST Vector