One of the scariest SEO tasks is a site migration because the stakes are so high and the pitfalls at every step . Here are five tips that will help keep a site migration on track to a successful outcome.

Site Migrations Are Not One Thing

Site Migrations are not one thing, they are actually different scenarios and the only thing they have in common is that there is always something that can go wrong.

Here are examples of some of the different kinds of site migrations:

- Migration to a new template

- Migrating to a new web host

- Merging two different websites

- Migrating to a new domain name

- Migrating to a new site architecture

- Migrating to a new content management system (CMS)

- Migrating to a new WordPress site builder

There are many ways a site can change and more ways for those changes to result in a negative outcome.

The following is not a site migration checklist. It’s five suggestions for things to consider.

1. Prepare For Migration: Download Everything

Rule number one is to prepare for the site migration. One of my big concerns is that the old version of the website is properly documented.

These are some of the ways to document a website:

- Download the database and save it in at least two places. I like to have a backup of the backup stored on a second device.

- Download all the website files. Again, I prefer to save a backup of the backup stored on a second device.

- Crawl the site, save the crawl and export it as a CSV or an XML site map. I prefer to have redundant backups just in case something goes wrong.

An important thing to remember about downloading files by FTP is that there are two formats for downloading files: ASCII and Binary.

- Use ASCII for downloading files that contain code, like CSS, JS, PHP and HTML.

- Use Binary for media like images, videos and zip files.

Fortunately, most modern FTP software have an automatic setting that should be able to distinguish between the two kinds of files. A sad thing that can happen is to download image files using the ASCII format which results in corrupted images.

So always check that your files are all properly downloaded and not in a corrupted state. Always consider downloading a copy for yourself if you have hired a third party to handle the migration or a client is doing it and they’re downloading files. That way if they fail with their download you’ll have an uncorrupted copy backed up.

The most important rule about backups: You can never have too many backups!

2. Crawl The Website

Do a complete crawl of the website. Create a backup of the crawl. Then create a backup of the backup and store it on a separate hard drive.

After the site migration, this crawl data can be used to generate a new list for crawling the old URLs to identify any URLs that are missing (404), are failing to redirect, or are redirecting to the wrong webpage. Screaming Frog also has a list mode that can crawl a list of URLs saved in different formats, including as an XML sitemap, and directly input into a text field. This is a way to crawl a specific batch of URLs as opposed to crawling a site from link to to link.

3. Tips For Migrating To A New Template

Website redesigns can be can be a major source of anguish when they go wrong. On paper, migrating a site to a new template should be a one-to-one change with minimal issues. In practice that’s not always the case. For one, no template can be used off the shelf, it has to be modified to conform to what’s needed, which can mean removing and/or altering the code.

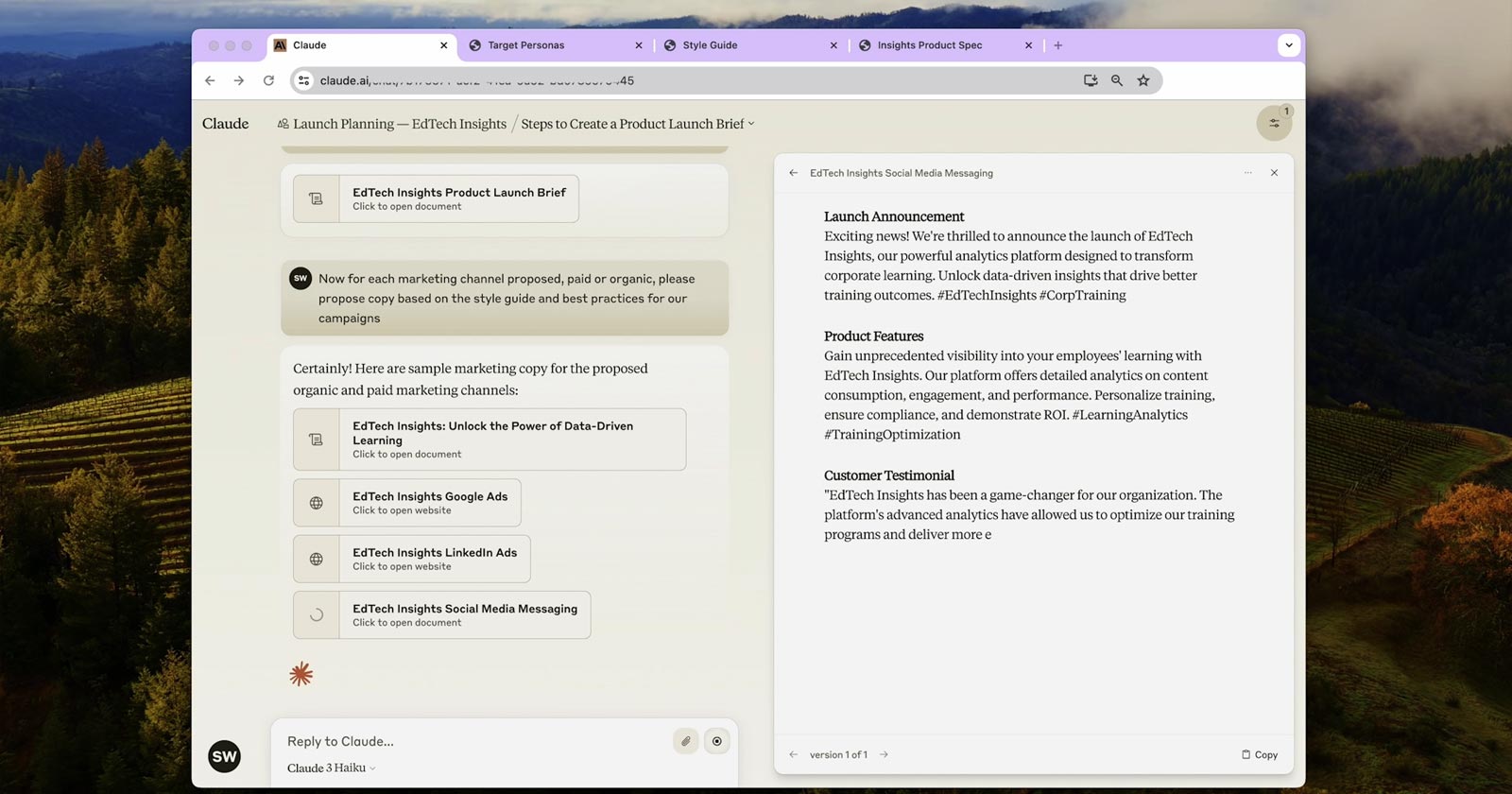

Search marketing expert Nigel Mordaunt (LinkedIn), who recently sold his search marketing agency, has experience migrating over a hundred sites and has important considerations for migrating to a new WordPress template.

This is Nigel’s advice:

“Check that all images have the same URL, alt text and image titles, especially if you’re using new images.

Templates sometimes have hard-coded heading elements, especially in the footer and sidebars. Those should be styled with CSS, not with H tags. I had this problem with a template once where the ranks had moved unexpectedly, then found that the Contact Us and other navigation links were all marked up to H2. I think that was more of a problem a few years ago. But still, some themes have H tags hard coded in places that aren’t ideal.

Make sure that all URLs are the exact same, a common mistake. Also, if planning to change content then check that the staging environment has been noindexed then after the site goes live make sure that the newly uploaded live site no longer contains the noindex robots meta tag.

If changing content then be prepared the site to perhaps be re-evaluated by Google. Depending on the size of the site, even if the changes are positive it may take several weeks to be rewarded, and in some cases several months. The client needs to be informed of this before the migration.

Also, check that analytics and tracking codes have been inserted into the new site, review all image sizes to make sure there are no new images that are huge and haven’t been scaled down. You can easily check the image sizes and heading tags with a post-migration Screaming Frog crawl. I can’t imagine doing any kind of site migration without Screaming Frog.”

4. Advice For Migrating To A New Web Host

Mark Barrera (LinkedIn), VP SEO, Newfold Digital (parent company of Bluehost), had this to say about crawling before a site migration in preparation for a migration to a new web host:

“Thoroughly crawl your existing site to identify any indexing or technical SEO issues prior to the move.

Maintain URL Structure (If Possible): Changing URL structures can confuse search engines and damage your link equity. If possible, keep your URLs the same.

301 Redirects: 301 Redirects are your friend. Search engines need to be informed that your old content now lives at a new address. Implementing 301 redirects from any old URLs to their new counterparts preserves link equity and avoids 404 errors for both users and search engine crawlers.

Performance Optimization: Ensure your new host provides a fast and reliable experience. Site speed is important for user experience.

Be sure to do a final walkthrough of your new site before doing your actual cutover. Visually double-check your homepage, any landing pages, and your most popular search hits. Review any checkout/cart flows, comment/review chains, images, and any outbound links to your other sites or your partners.

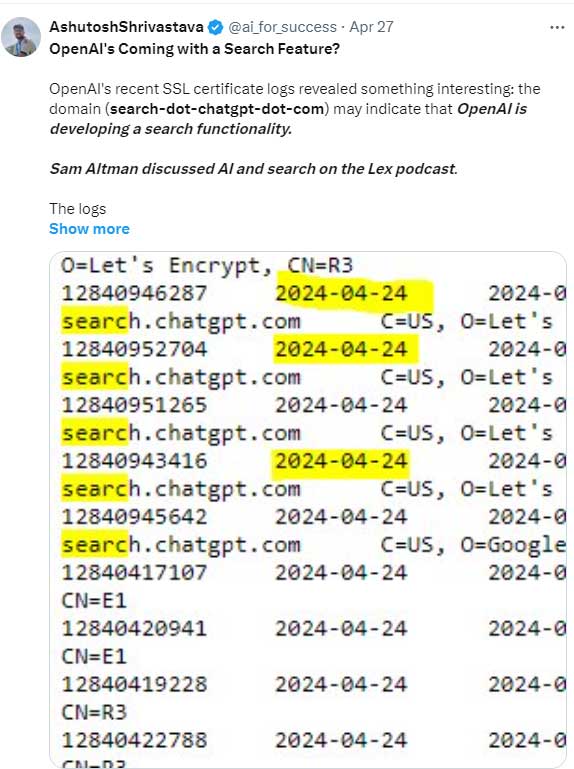

SSL Certificate: A critical but sometimes neglected aspect of hosting migrations is the SSL certificate setup. Ensuring that your new host supports and correctly implements your existing SSL certificate—or provides a new one without causing errors is vital. SSL/TLS not only secures your site but also impacts SEO. Any misconfiguration during migration can lead to warnings in browsers, which deter visitors and can temporarily impact rankings.

Post migration, it’s crucial to benchmark server response times not just from one location, but regionally or globally, especially if your audience is international. Sometimes, a new hosting platform might show great performance in one area but lag in other parts of the world. Such discrepancies can affect page load times, influencing bounce rates and search rankings. “

5. Accept Limitations

Ethan Lazuk, SEO Strategist & Consultant, Ethan Lazuk Consulting, LLC, (LinkedIn, Twitter) offers an interesting perspective on site migrations on the point about anticipating client limitations imposed upon what you are able to do. It can be frustrating when a client pushes back on advice and it’s important to listen to their reasons for doing it.

I have consulted over Zoom with companies whose SEO departments had concerns about what an external SEO wanted to do. Seeking a third party confirmation about a site migration plan is a reasonable thing to do. So if the internal SEO department has concerns about the plan, it’s not a bad idea to have a trustworthy third party take a look at it.

Ethan shared his experience:

“The most memorable and challenging site migrations I’ve been a part of involved business decisions that I had no control over.

As SEOs, we can create a smart migration plan. We can follow pre- and post-launch checklists, but sometimes, there are legal restrictions or other business realities behind the scenes that we have to work around.

Not having access to a DNS, being restricted from using a brand’s name or certain content, having to use an intermediate domain, and having to work days, weeks, or months afterward to resolve any issues once the internal business situations have changed are just a few of the tricky migration issues I’ve encountered.

The best way to handle these situations require working around client restrictions is to button up the SEO tasks you can control, set honest expectations for how the business issues could impact performance after the migration, and stay vigilant with monitoring post-launch data and using it to advocate for resources you need to finish the job.”

Different Ways To Migrate A Website

Site migrations are a pain and should be approached with caution. I’ve done many different kinds of migrations for myself and have assisted them with clients. I’m currently moving thousands of webpages from a folder to the root and it’s complicated by multiple redirects that have to be reconfigured, not looking forward to it. But migrations are sometimes unavoidable so it’s best to step up to it after careful consideration.

Featured Image by Shutterstock/Krakenimages.com