Google Ads Introduces Generative AI Tools For Demand Gen Campaigns via @sejournal, @MattGSouthern

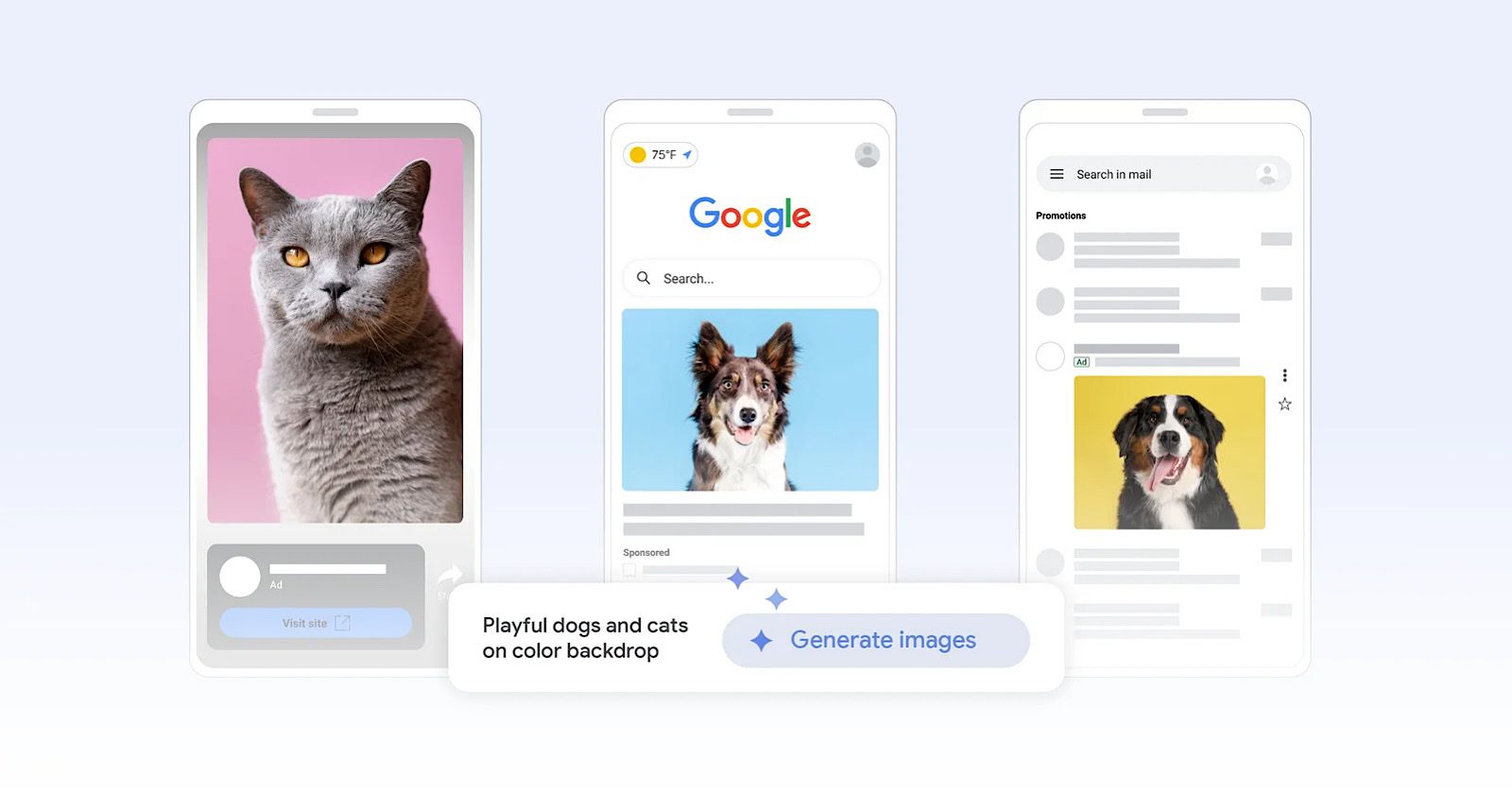

Google announced the rollout of new generative AI capabilities for Demand Gen campaigns in Google Ads today.

The tools, powered by Google’s artificial intelligence, will allow advertisers to create high-quality image assets using text prompts in just a few steps.

The AI-powered creative features aim to enhance visual storytelling to help brands generate new demand across Google’s platforms, including YouTube, YouTube Shorts, Discover, and Gmail.

Michael Levinson, Vice President and General Manager of Social, Local, and Vertical Ads at Google, stated in the announcement:

“Advertisers need to diversify their creative strategy with multi-format ads to keep audiences engaged and deliver results. With generative image tools, you can now test new creative concepts more efficiently – whether it’s experimenting with new types of images or simply building your creatives from scratch.”

How It Works

Starting today, the generative image tools are rolling out globally to advertisers in English, with more languages coming later this year.

Advertisers can provide text prompts to generate original, high-quality images tailored to their branding and marketing needs.

For example, an outdoor lifestyle brand selling camping gear could use a prompt like “vibrantly colored tents illuminated under the Aurora Borealis” to create engaging visuals targeting customers interested in camping trips to Iceland.

Additionally, a “generate more like this” feature allows advertisers to generate new images inspired by their existing high-performing assets.

Responsible AI Development

In the announcement, Google emphasized its commitment to developing generative AI technology responsibly, with principles in place for fairness, privacy, and security.

The company states:

“On top of making sure advertising content adheres to our long-standing Google Ads policies, we also employ additional technical measures to ensure generative image tools in Google Ads produce novel and unique content. Google AI will never create two identical images.”

All generated images will include identifiable markings, such as an open-standard markup and an invisible digital watermark resistant to manipulations like screenshots and filters.

Creative Best Practices

To accompany the new AI tools, Google released a “Creative Excellence Guide” with the following best practices for building Demand Gen campaigns:

- Use a combination of videos and images to engage audiences at different stages of the buyer journey.

- Provide Google with a variety of assets in different aspect ratios to maximize reach across inventory.

- Utilize high-quality, high-resolution visuals to build brand trust and inspire action.

- Adopt a test-and-learn strategy, evaluating performance metrics to optimize creatives.

Why SEJ Cares

Generative AI for Demand Gen campaigns represents a potentially valuable new capability for Google Ads advertisers.

By leveraging AI to streamline creative production, brands can experiment with a broader array of visual concepts.

This positions them to better engage audiences in Google’s premium ad environments, such as YouTube, Discover, and Gmail.

How This Can Help You

For brands, generative AI tools open up new creative possibilities with a level of image sophistication that was previously time-and resource-intensive to produce.

The ability to iterate rapidly on visual ideas can lead to more impactful ad creative.

Small businesses and agencies operating with leaner teams can now create a high volume of diverse, on-brand image assets with minimal design resources.

Additionally, the “Generate more like this” functionality allows advertisers to expand on existing assets while maintaining a consistent look and feel.

Brands need to approach this technology responsibly and strategically, however.

While Google has implemented safeguards, advertisers should still apply human oversight, creativity, and brand governance when using AI-generated assets.

Featured Image: blog.google/products/ads-commerce/, April 2024.