Google Expands iOS App Marketing Capabilities via @sejournal, @brookeosmundson

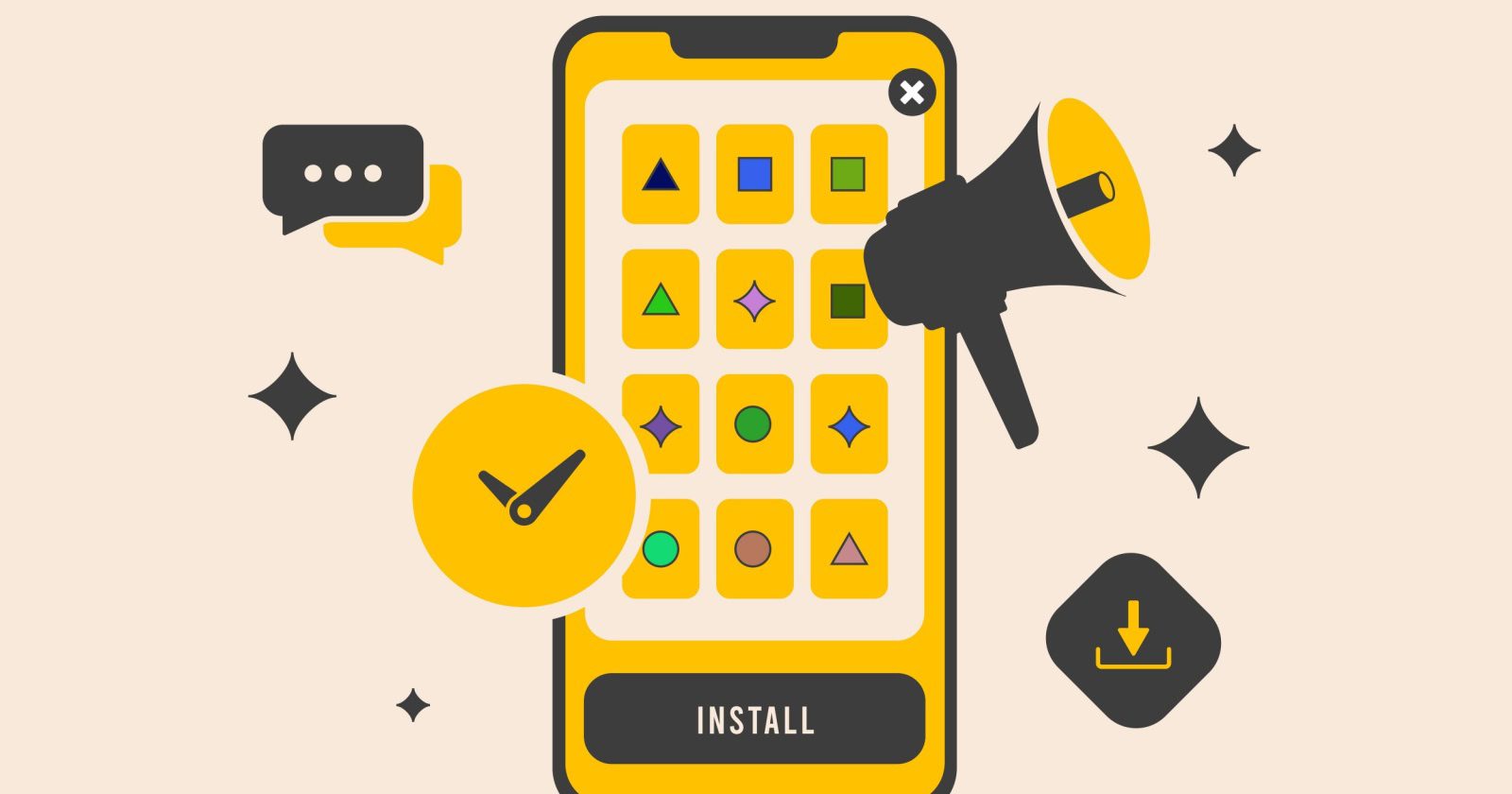

Running iOS app campaigns in Google has never been straightforward. Between Apple’s privacy changes and evolving user behavior, marketers have often felt like they were working with one hand tied behind their backs.

Measurement was limited, signals were weaker, and getting campaigns to scale often required more guesswork than strategy.

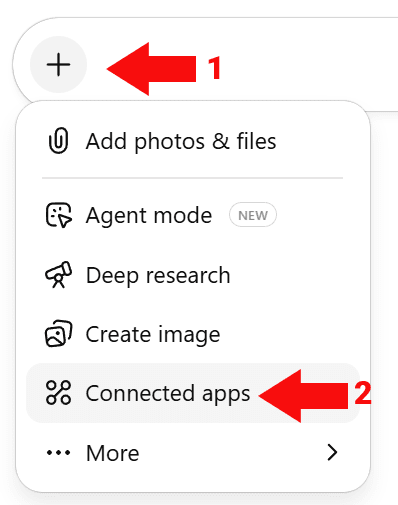

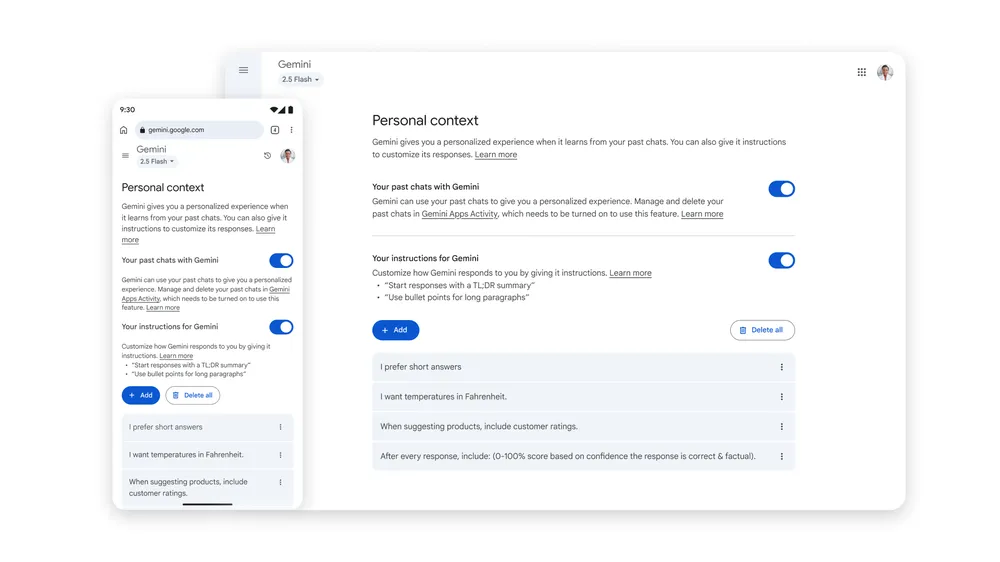

Google Ads Liaison, Ginny Marvin, took to LinkedIn to announce the numerous updates to iOS App Install campaigns/

Google is now making changes to help advertisers navigate this space more confidently. Their latest updates to iOS App Install campaigns are designed to give marketers a stronger mix of creative options, smarter bidding tools, and privacy-respecting measurement features.

While these changes won’t solve every iOS challenge overnight, they do mark a meaningful shift in how advertisers can approach growth on one of the world’s largest mobile ecosystems.

New Ad Formats Bring More Creative Opportunities

One of the biggest updates is the addition of new creative formats designed to improve engagement and give users a clearer picture of an app before they download.

Google is expanding support for co-branded YouTube ads, which integrate creator-driven content directly into placements like YouTube Shorts and in-feed ads.

For advertisers, it’s an opportunity to lean into the authenticity of creator-style ads, which often resonate more strongly than traditional branded spots.

Playable end cards are also being introduced across select AdMob inventory. After watching an ad, users can now interact with a lightweight, playable demo of the app.

Think of it as a “try before you buy” moment: users get a quick preview of the experience, which can lead to higher-quality installs.

For app marketers, this shift matters because it aligns user expectations with actual in-app experiences. The closer someone feels to your product before downloading, the less risk you face with churn or low-value installs.

Both of these creative updates point to a broader trend: ads are becoming less static and more interactive. That’s particularly important on iOS, where advertisers need every edge they can get to capture attention in environments where tracking is constrained.

Target ROAS Bidding Now Available for iOS

Another cornerstone of this announcement is Google’s expansion of value-based bidding on iOS.

Target ROAS (tROAS), a bidding strategy that optimizes for return on ad spend rather than raw install volume, is now fully supported.

This is especially valuable for apps with monetization models that vary widely across users, such as subscription services or in-app purchase businesses. Instead of paying equally for every install, advertisers can now direct spend toward users more likely to generate meaningful revenue.

Beyond tROAS, Google is also expanding the “Maximize Conversions” strategy for iOS. This allows campaigns to optimize not just for installs, but for deeper in-app actions.

By leaning into Google’s AI-driven modeling, advertisers can let the system identify where budget should be allocated to maximize results within daily spend limits.

The takeaway here is simple: volume still matters, but value matters more. With these updates, Google is nudging app marketers away from chasing installs at any cost and toward optimizing for users who truly drive long-term impact.

Measurement That Balances Privacy and Clarity

Perhaps the most challenging part of iOS advertising has been measurement.

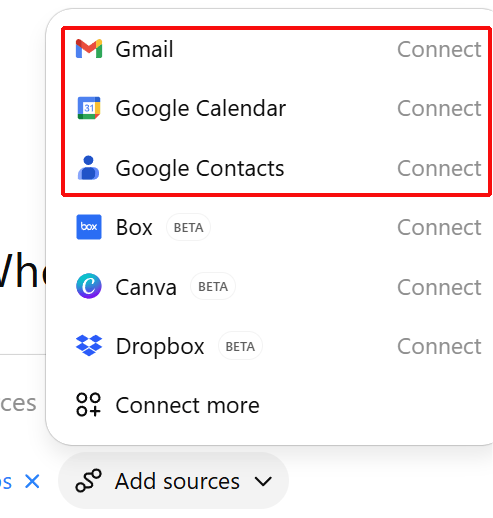

Apple’s App Tracking Transparency framework made it harder to follow users across devices, limiting the signals available for campaign optimization. Google’s new measurement updates are designed to give advertisers more clarity without crossing privacy lines.

On-device conversion measurement is one of the most notable additions. Rather than sending user-level data back to servers, performance signals are processed directly on the device.

This means advertisers can still see which campaigns are working, but without compromising privacy. Importantly, it also reduces latency in reporting, helping marketers make faster decisions.

Integrated conversion measurement (ICM) is another feature being pushed forward. This approach works through app attribution partners (AAPs), giving advertisers cleaner, more near real-time data about installs and post-install actions.

Taken together, these tools signal a future where privacy and measurement don’t have to be opposing forces. Instead, advertisers can get the insights they need while users retain more control over their data.

How App Marketers Can Take Advantage

These updates aren’t the kind that require testing and adaptation.

For most advertisers, the best starting point is experimenting with the new ad formats. Running a co-branded YouTube ad or a playable end card alongside your existing creative can help you see whether engagement and conversion quality improve.

These tests don’t need to be massive, but they should be deliberate enough to give you actionable learnings.

For bidding, marketers should look closely at whether tROAS makes sense for their business model.

If your app has a clear monetization strategy and meaningful differences in user value, tROAS could be a game-changer. Start conservatively with your targets, give the algorithm time to learn, and refine based on observed performance.

On the measurement side, now is the time to talk to your developers and attribution partners about what it would take to implement on-device conversion tracking or ICM. These solutions may involve technical lift, but the payoff is improved data quality in an environment where every signal counts.

It’s also worth noting that these changes won’t transform campaigns overnight. Smart bidding models and new measurement frameworks take time to stabilize, and the impact of new formats might not show up in the first week of a test.

Patience, consistency, and a focus on week-over-week trends are key.

Looking Ahead

Google’s latest iOS updates don’t eliminate the complexities of app marketing, but they do give advertisers sharper tools to work with. From more engaging ad formats to value-based bidding and privacy-first measurement, the changes represent progress in a space that’s been difficult to navigate.

The message for marketers is clear: start testing, invest in measurement infrastructure, and don’t let short-term results cloud the bigger picture.

With the right approach, these updates can help shift iOS campaigns from a defensive play into an opportunity for real growth.