Google Ads has announced a major update to Search campaigns. The new AI Max campaign setting will roll out globally in beta starting later this month.

Per Google’s announcement, advertisers who enable AI Max in their Search campaigns can expect stronger performance through improved query matching, dynamic creative, and better control features.

According to Google, early testing shows advertisers see an average 14% more conversions or conversion value at a similar CPA or ROAS. Campaigns still using mostly exact or phrase match keywords see even greater uplifts, around 27%.

This update follows months of closed beta testing with large brands already reporting positive results.

Let’s take a deeper look at what AI Max brings and why it matters to paid search marketers.

What is AI Max for Search Campaigns?

If you’ve been hearing the term “Search Max” in the wild lately, the official name for it is AI Max for Search.

AI Max is not a new campaign type. Instead, it’s a one-click upgrade available within existing Search campaign settings.

Once activated, it layers in three core enhancements:

-

Search term matching: Uses AI to extend keyword matching into relevant, high-performing queries your current keywords might miss.

-

Text customization: Rebrands the former Automatically Created Assets (ACA) tool. Dynamically generates new headlines and descriptions based on your landing pages, existing ads, and keywords.

-

Final URL expansion: Sends users to the most relevant pages on your site based on query intent.

Advertisers can opt out of text customization or final URL expansion at the campaign level, and opt out of search term matching at the ad group level. However, Google recommends using all three together for maximum performance.

AI Max is designed to complement, not replace, keyword match types. If a user’s search exactly matches a keyword in your campaign, that will always take priority.

Why is Google Introducing AI Max?

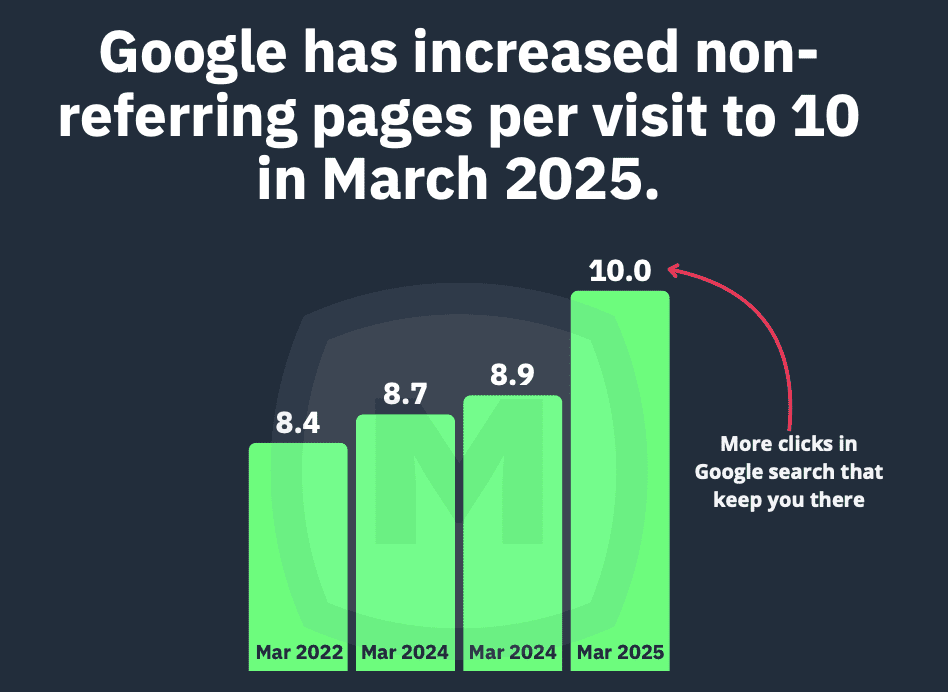

Search behavior is changing fast. As Google integrates more AI-powered experiences like AI Overviews and Google Lens into Search, people are using more complex, conversational, and even visual queries.

Advertisers have also voiced concerns about losing transparency and control as campaign automation expands.

AI Max aims to address both.

- Advertisers keep access to existing Search reports and controls while layering in new targeting and creative tools.

- More granular reporting is rolling out, including search terms by asset and improved URL parameters for detailed tracking.

Essentially, it’s Google’s answer to increasing demand for flexible automation, but with guardrails in place for marketers.

Are There Controls For Brand Safety?

Google added several controls to address a frequent advertiser concern: automation overreaching into irrelevant or risky placements.

Here’s what’s included with the AI Max for Search rollout:

- Brand controls: Choose which brands your ads appear alongside (or exclude specific brands).

- Location of interest controls: Target based on user geo intent at the ad group level (great for multi-location businesses).

- Creative asset controls: Remove generated assets or block them entirely if they don’t meet brand guidelines.

One note of caution: as of now, AI-generated assets will go live before advertisers have the chance to review them.

Advertisers will need to monitor and react quickly to any compliance issues.

Are There Updates Coming to Reporting?

While AI Max integrates into existing Search reporting, the functionality is bringing new insights:

- Search terms reporting will now show associated headlines and URLs.

- Asset reports will measure performance not just by impressions, but by spend and conversions.

- A new URL parameter will offer deeper visibility into search queries and performance across match types.

These reporting improvements will start in the Google Ads online interface as the feature rolls out.

Support for API, Report Editor, and Desktop Editor access is slated for later in 2025.

How Does AI Max Compare to Performance Max or Dynamic Search Ads?

Many marketers are asking how AI Max fits alongside other Google campaign types.

Here’s the current landscape of differences or overlap between other campaign types:

- Performance Max and AI Max for Search may be eligible for the same Search auctions. However, if a user’s search query exactly matches a keyword in your Search campaign, Search will always take priority.

- Dynamic Search Ads (DSA) remain available. AI Max is not a direct replacement, though it does overlap in some areas like final URL expansion and keywordless matching.

- Optimized Targeting for audiences could be seen as a similar concept to AI Max’s query expansion, but applied to audiences rather than keywords.

Additionally, AI Max for Search can be A/B tested against traditional Search setups using drafts and experiments. More customized testing tools are in development.

Who is AI Max Not Ideal For?

While AI Max offers clear benefits to trying out, this new setting may not suit every advertiser verticals.

If you’re an advertiser or a brand with the following scenarios, I’d recommend using caution when testing out AI Max for Search.

- Advertisers with strict creative guidelines or sensitive content policies.

- Brands needing pinning for ad assets (since final URL expansion does not support pinning).

- Businesses with websites that change frequently, making automated creative risky or inaccurate.

For industries like legal or healthcare, where lead quality and content compliance are crucial, AI Max may require careful testing before wide adoption.

What This Means for Search Marketers

AI Max represents a significant shift in how Google Search campaigns can scale.

It brings the adaptive reach and creative flexibility of Performance Max without requiring a new campaign type or sacrificing keyword control.

For advertisers already embracing broad match and automated bidding, AI Max may feel like a natural progression.

For those still relying on exact and phrase match keywords, it offers an opportunity to expand cautiously while maintaining key controls.

The rollout also signals Google’s direction: automation will continue to evolve, but advertiser input and oversight remain essential.

Marketers who test AI Max thoughtfully by balancing automation with strategy are likely to gain a competitive edge as search behavior grows more complex.