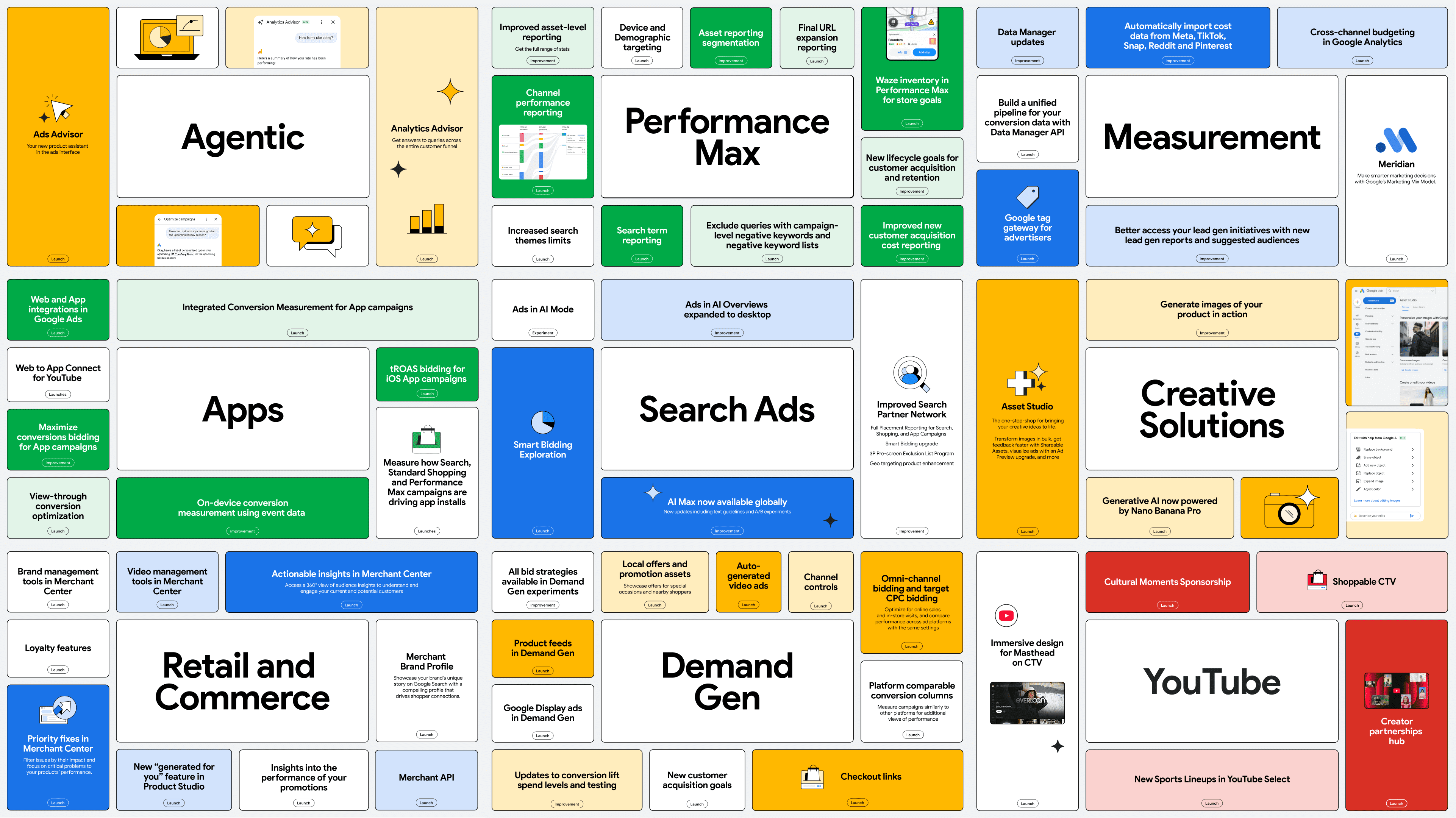

As December is quickly coming to a close, Google released its 2025 Year in Review, with a thorough list of product launches, upgrades, improvements all driven by AI.

These updates showed up across the board in Search, YouTube, Demand Gen, Performance Max, Merchant Center, and more.

Some updates felt like natural progressions from earlier releases. Others pushed Google’s vision for a more automated, more visual, and more data-informed ad system into clearer view.

For PPC managers and directors who spent the year testing generative AI, adjusting to new reporting controls, and rethinking creative workflows, Google’s recap is a useful way to understand what actually shaped paid media in 2025 and what still needs refinement.

The Biggest Releases of 2025

Before breaking down the themes and implications, here is a snapshot of the major updates Google highlighted in its year-end recap:

- Ads in AI Overviews expanded to desktop and new global markets

- AI Mode opened new mid-funnel inventory for deeper conversational queries

- The launch of AI Max for Search, with new beta features being released in Q1 2026

- Smart Bidding Exploration allowed for flexible ROAS targets

- Full placement reporting expanded across the Search Partner Network

- YouTube released Shoppable CTV, new Cultural Moments Sponsorship, new sports lineups, and a creator partnerships hub

- Demand Gen added product feeds, target CPC bidding, campaign-level experiments, and channel controls

- PMax gained channel-level reporting, full Search Terms, asset-level metrics, negative keyword lists, device targeting, and expanded search themes

- App campaigns improved iOS measurement, Web-to-App flows, ROAS bidding, and conversion modeling

- Merchant Center gained brand profiles, AI-powered visuals, loyalty tools, and priority fixes

- Meridian introduced an open-sourced MMM approach with lower lift thresholds

- Data Manager and Google tag gateway made data accuracy and consolidation easier

- Asset Studio launched inside Google Ads with Nano Banana Pro powering image and video creation

- Ads Advisor and Analytics Advisor delivered guided support for campaign building and analysis

Taken together, these updates show Google’s ongoing effort to blend automation with advertiser control, though some areas are maturing faster than others.

Below are details of some of the key updates worth digging into more.

How Google Repositioned Search for the Next Era

Google spent much of 2025 redefining how Search works, particularly around discovery moments and conversational intent. These shifts matter because they determine where ads can appear and how early advertisers can influence a buying journey.

Ads in AI Overviews

Google expanded Ads in AI Overviews across desktop and global markets. This placement sits inside AI-generated summaries and gives advertisers a chance to appear before users have clicked into a traditional results page. While Ads in AI Overviews was announced earlier this year, it wasn’t until the later part of 2025 where users were sharing their screenshots in the wild.

AI Mode

Still in testing, AI Mode answers multi-step or nuanced queries with structured responses. Google now allows ads to appear below and within these responses when relevant. These moments previously had no paid inventory, so this is a new mid-funnel opportunity for advertisers who want to influence complex decision-making.

AI Max for Search

AI Max extended its feature set and remains one of Google’s fastest-growing Search products. Experiments, creative guidelines, and text customization give advertisers more agency over AI-generated assets. The challenge is managing expectations. AI Max simplifies setup but still requires strategic human oversight to shape relevance and cost efficiency.

Smart Bidding Exploration

Google cited an average 18 percent increase in unique converting query categories and a 19 percent conversion lift when advertisers used flexible ROAS targets. For brands that struggle to expand reach without overspending, this may become one of the most practical levers in 2026.

YouTube and Demand Gen Continued Their Growth Spurt

YouTube delivered some of Google’s most impactful upgrades this year. Shoppable CTV allows viewers to browse products directly on the big screen or pass the experience to their phone.

Cultural Moments Sponsorships created a packaged approach for brands that want presence during tentpole events. With new sports lineups across college and women’s leagues, Google is betting heavily on live and fandom-driven environments.

Demand Gen also saw meaningful improvement. Google noted a 26 percent increase in conversions per dollar driven by more than 60 AI-powered enhancements.

Combined with product feeds, channel controls, and full compatibility with Custom Experiments, Demand Gen now feels like a maturing format rather than an experimental successor to Discovery.

Performance Max Became More Transparent and More Controllable

Performance Max received a set of long overdue reporting and control features that changed how many advertisers worked inside the platform.

Channel reporting, full Search terms, asset-level insights, customer acquisition visibility, and segmentation options let PPC managers understand where performance originates. Negative keyword lists, device targeting, demographic controls, and expanded search themes finally gave advertisers the ability to tighten or expand performance intentionally rather than reactively.

For many teams, this was the year PMax felt less like a ‘take-it-or-leave-it’ automation tool and more like a high-powered campaign framework that needs guidance rather than blind trust.

Creativity Became a Central Focus

One theme that Google emphasized more strongly this year was creative quality and workflow efficiency. With Asset Studio and Nano Banana Pro, Google is signaling that creative is no longer a side component of performance. It is a core lever.

Asset Studio

The new in-platform creative workspace lets advertisers generate, edit, and review creative directly inside Google Ads. Nano Banana Pro now supports:

- Natural language editing

- Seasonal variations

- Photorealistic product scenes

- Multi-product compositions

- Bulk image generation

- Shareable assets for team review

For lean teams that struggle to produce enough visual variation for PMax, Demand Gen, or YouTube, this removes a major bottleneck. The quality still varies depending on brand style, texture, or lighting, but Google is clearly positioning AI-assisted creative as a foundational element in campaign setup.

Ad Preview and Workflow Support

Updated previews show ads across channels without guesswork, and shareable previews remove a lot of friction with internal stakeholders. This is one of Google’s more underrated releases because it directly solves a common workflow challenge: aligning creative teams and media teams without lengthy back-and-forth.

Google also introduced Ads Advisor, a guided AI assistant for campaign building and troubleshooting, which reduces operational burden for teams who manage multiple accounts or frequent experiments.

Why the iOS Measurement Updates Are More Important Than It Looks

Buried within Google’s 2025 recap was an update most marketers will skim past, but app-focused advertisers immediately saw as one of the most meaningful improvements of the year.

Google expanded Web-to-App acquisition measurement for iOS, allowing advertisers to track when a user moves from a web campaign into an app install that ultimately leads to a valuable in-app action.

On the surface, this reads like a small reporting enhancement. In practice, it solves one of the most frustrating gaps in iOS app advertising since ATT went live in 2021.

For most advertisers who run traditional lead-gen or ecommerce campaigns, this update will feel distant. But for app marketers, it finally closes the loop on a user journey that used to look fragmented, inconsistent, or completely invisible.

Here’s what makes it so important:

- It brings back visibility that app advertisers lost years ago. After Apple’s App Tracking Transparency rollout, many advertisers lost the ability to see how web campaigns influenced app installs. That meant paid Search, Shopping, and even PMax often undervalued app growth, because installs and in-app actions didn’t get attributed correctly. Google’s new iOS Web-to-App measurement begins restoring that path, which helps app campaigns receive credit where it was previously impossible.

- It allows advertisers to optimize for higher-value actions, not just installs. Before this update, the disconnect between web traffic and app conversions often pushed advertisers toward shallow optimization goals. Now, Google can tie in-app action quality back to upstream campaigns. For app marketers, that means smarter bidding. For finance teams, it means cleaner forecasting.

- It makes cross-surface strategy practical again. Many app brands advertise across Search, YouTube, Shopping, and PMax but had to treat those touchpoints separately. This update reopens the door to a unified approach, where creative, bidding strategies, and budgets can align with actual user behavior instead of being fragmented by platform limitations.

App-focused teams have been navigating blind spots for years. They know how often web traffic influences app installs. They’ve seen how many high-value users start on mobile web before downloading. Without visibility, they’ve had to rely on directional data, blended reporting, or costly workarounds through MMP partners.

This update doesn’t solve every attribution limitation on iOS, but it does give app advertisers something they’ve wanted since ATT: a path to understanding the real value of web-driven app conversions.

It creates a more complete and realistic measurement loop, which is exactly what Google needs if it wants advertisers to invest confidently in App campaigns across Search, YouTube, Demand Gen, and Performance Max in 2026.

Where There’s Room for Improvement

A year-in-review should not only highlight progress but also acknowledge where advertisers still experience friction. My goal here is objective critique without negativity.

AI Overviews need clearer consistency

Advertisers still struggle to predict when AI Overviews will appear and how often ads surface within them. Before this becomes a must-have surface, Google needs more stability and clearer guidelines.

Creative control in AI Max is not fully predictable

Google is expanding customization settings, but advertisers still see unexpected rewrites or over-simplifications. More transparency around why AI chooses certain variations would help creative teams align expectations.

Asset Studio output varies by category

While the new tools are fast and flexible, certain product types still generate inconsistent or overly stylized visuals. This will improve, but brands that rely on strict visual identity may need hybrid workflows for now.

Measurement unification is still a challenge

Meridian is promising, but advertisers want easier alignment between Google’s lift results and those from Meta, Amazon, or independent MMM tools. The industry needs consistency, not isolated attribution logic.

These gaps do not diminish the significance of Google’s updates, but they remind us that AI-led advertising is still developing and requires both experimentation and skepticism.

Wrapping Up the Year

Google’s 2025 recap showed a platform that is evolving quickly but maturing steadily. Automation is no longer something advertisers fear or resist. The conversation has shifted to how PPC teams can direct these systems with clearer insight, smarter testing, and more intentional creative work.

If 2025 was about unlocking visibility and control, 2026 will be about applying those tools with discipline. Marketers who lean into experimentation, creative differentiation, and data strength will be the ones who stay ahead as Google’s ad ecosystem continues to change.

What was your biggest takeaway from Google’s updates this year?