Why Do Web Standards Matter? Google Explains SEO Benefits via @sejournal, @MattGSouthern

Google Search Relations team members recently shared insights about web standards on the Search Off the Record podcast.

Martin Splitt and Gary Illyes explained how these standards are created and why they matter for SEO. Their conversation reveals details about Google’s decisions that affect how we optimize websites.

Why Some Web Protocols Become Standards While Others Don’t

Google has formally standardized robots.txt through the Internet Engineering Task Force (IETF). However, they left the sitemap protocol as an informal standard.

This difference illustrates how Google determines which protocols require official standards.

Illyes explained during the podcast:

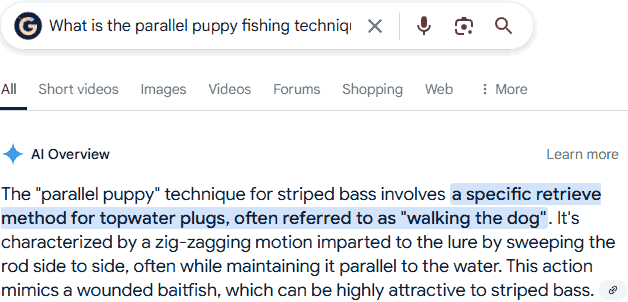

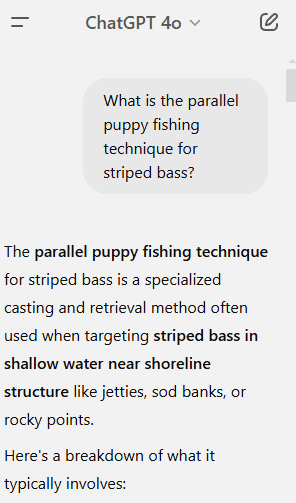

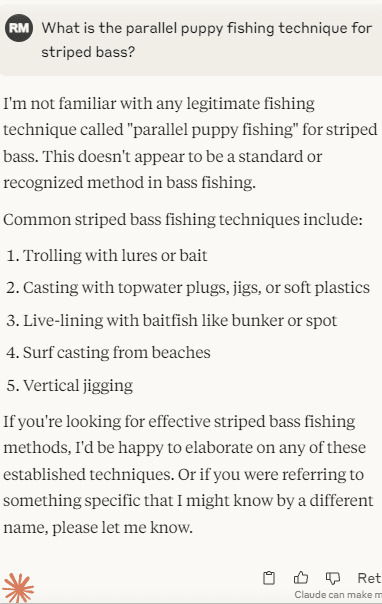

“With robots.txt, there was a benefit because we knew that different parsers tend to parse robots.txt files differently… With sitemap, it’s like ‘eh’… it’s a simple XML file, and there’s not that much that can go wrong with it.”

This statement from Illyes reveals Google’s priorities. Protocols that confuse platforms receive more attention than those that work well without formal standards.

The Benefits of Protocol Standardization for SEO

The standardization of robots.txt created several clear benefits for SEO:

- Consistent implementation: Robots.txt files are now interpreted more consistently across search engines and crawlers.

- Open-source resources: “It allowed us to open source our robots.txt parser and then people start building on it,” Illyes noted.

- Easier to use: According to Illyes, standardization means “there’s less strain on site owners trying to figure out how to write the damned files.”

These benefits make technical SEO work more straightforward and more effective, especially for teams managing large websites.

Inside the Web Standards Process

The podcast also revealed how web standards are created.

Standards groups, such as the IETF, W3C, and WHATWG, work through open processes that often take years to complete. This slow pace ensures security, clear language, and broad compatibility.

Illyes explained:

“You have to show that the thing you are working on actually works. There’s tons of iteration going on and it makes the process very slow—but for a good reason.”

Both Google engineers emphasized that anyone can participate in these standards processes. This creates opportunities for SEO professionals to help shape the protocols they use on a daily basis.

Security Considerations in Web Standards

Standards also address important security concerns. When developing the robots.txt standard, Google included a 500-kilobyte limit specifically to prevent potential attacks.

Illyes explained:

“When I’m reading a draft, I would look at how I would exploit stuff that the standard is describing.”

This demonstrates how standards establish security boundaries that safeguard both websites and the tools that interact with them.

Why This Matters

For SEO professionals, these insights indicate several practical strategies to consider:

- Be precise when creating robots.txt directives, since Google has invested heavily in this protocol.

- Use Google’s open-source robots.txt parser to check your work.

- Know that sitemaps offer more flexibility with fewer parsing concerns.

- Consider joining web standards groups if you want to help shape future protocols.

As search engines continue to prioritize technical quality, understanding the underlying principles behind web protocols becomes increasingly valuable for achieving SEO success.

This conversation shows that even simple technical specifications involve complex considerations around security, consistency, and ease of use, all factors that directly impact SEO performance.

Hear the full discussion in the video below: