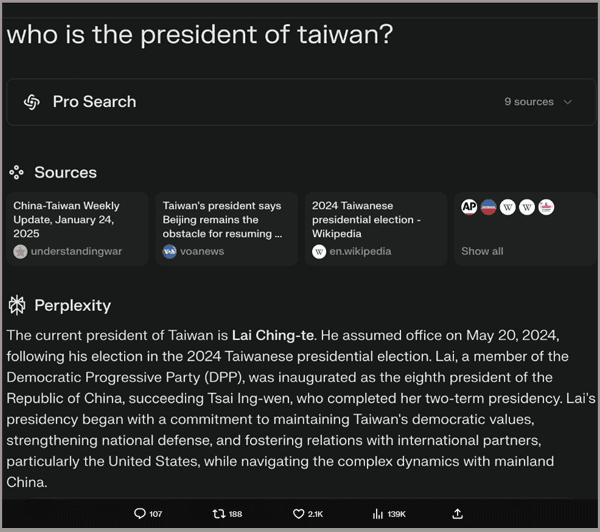

DeepSeek Terms Make Users Liable for Company’s Travel Expenses via @sejournal, @martinibuster

DeepSeek’s terms of use contain requirements that may make users reconsider using the app, as they could shift the balance between benefits and perceived risks by imposing significant financial obligations. One such requirement makes users liable for travel and litigation expenses if they violate the terms and the violation results in legal action.

Terms Of Use

Nobody reads the terms of use and sometimes businesses will have fun with that by burying Easter eggs in the terms to see how long it takes before someone notices. For example, Amazon used to have an acceptable use policy for a game engine they distributed that said they don’t apply in the event of an actual zombie apocalypse.

Here’s an excerpt from an archived Amazon TOS Easter egg:

“However, this restriction will not apply in the event of the occurrence (certified by the United States Centers for Disease Control or successor body) of a widespread viral infection transmitted via bites or contact with bodily fluids that causes human corpses to reanimate and seek to consume living human flesh, blood, brain or nerve tissue and is likely to result in the fall of organized civilization.”

But the terms of use published by DeepSeek in section 7.2 are no joke and users of the service and app should consider reading the them.

Users Liable For DeepSeek Travel Expenses

The section has three parts that are fairly standard.

The first part establishes their right to “independently” make decisions about moderating the use of their services including taking “measures against you.” Again, this is fairly standard.

“In response to your violation of these Terms or other service terms, DeepSeek reserves the right to independently judge and take measures against you, including issuing warnings, setting deadlines for correction, restricting account functions, suspending usage, closing accounts, prohibiting re-registration, deleting relevant content, etc., without the need for prior notification. We have the right to announce the results of the actions taken and, based on the actual circumstances, decide whether to restore usage.”

The second part affirms their right to keep records of activities that may violate laws or regulations and turn them over to the “competent authorities.”

“For behaviors suspected of violating laws and regulations or involving illegal activities, relevant records will be retained, and reports will be made to the competent authorities in accordance with the law, cooperating with their investigations.”

The following part shifts a load of legal liabilities on users, including travel expenses and the costs for collecting evidence and for paying fines.

It reads:

“You shall be solely responsible for any legal liabilities, claims, demands, or losses asserted by third parties resulting therefrom, and you shall compensate us for any losses incurred, including litigation fees, arbitration fees, attorney fees, notary fees, announcement fees, appraisal fees, travel expenses, investigation and evidence collection fees, compensation, liquidated damages, settlement costs, and administrative fines incurred in protecting our rights.”

DeepSeek Terms Do Not Override Consumer Legal Protections

A key point about DeepSeek’s terms of use is that there’s a section that says a consumer’s legal rights cannot be changed or taken away by agreeing to the terms of use. So any laws that protect a consumer cannot be overridden by agreeing to the terms of use.

DeepSeek’s terms affirms those legal rights:

“Nothing in these terms shall affect any statutory rights that you cannot contractually agree to alter or waive and are legally always entitled to as a consumer.”

Should You Delete The DeepSeek App?

I recently was messaging with friends who are a part of the digital marketing industry and they mentioned that they had downloaded the DeepSeek app because it’s a part of their business to be aware of the latest technologies. I showed them the above terms of use and one of my friends commented that this specific section went far beyond what they were comfortable with. Another friend in that conversation also decided to immediately deleted the app.

Terms of use are fairly comprehensive in what they cover, and it’s not unusual for companies to use them to shield themselves from legal consequences. However, because the company is based in China, where information control, censorship, and data transparency issues are well-documented, some may be more cautious, while others may see the benefits as outweighing any perceived risks.

Read the DeepSeek terms of use here.