We’ve all heard the phrase, “The customer’s always right.” It’s a common mantra in customer service and hospitality – and, I’d argue, should carry over into the world of SEO as well!

After all, we SEO pros are in the business of not only pleasing Google but also appealing to the needs and interests of prospective consumers.

Customers do the searching, navigate your website, and ultimately decide whether your brand is worth buying from.

While many SEO tactics focus on rankings and clicks, customer-centric SEO asserts that the user experience on-site should be the top priority.

So, if you’re looking to enhance customer experiences and build brand loyalty, consider these customer-first SEO strategies.

What Is Customer-Centric SEO?

Customer-centric SEO prioritizes the user’s online experience, from navigating your website to the content that appeals to their needs and interests.

Instead of implementing SEO improvements for the sake of performance metrics or rankings, customer-centric SEO combines optimization best practices with a deeper, purposeful understanding of user behavior and customer goals.

The importance of user experience (UX) in SEO has grown, in no small part, due to the rollout of a page experience report in Google Search Console analytics (April 2021, now depreciated) and Google’s Page Experience Update in May 2021.

SEO Improvements To Enhance Customer Experience, Behavior

Designing a great website UX and providing valuable content are two important aspects of customer-centric SEO, but many other factors can affect user behavior, trust, and brand loyalty.

Here are a few ways to enhance the user experience, increase conversions, and build a loyal customer base.

1. Make Information Easy To Find With User-Friendly Navigation

One of the best ways to facilitate a friendly user experience is through simple, organized site navigation.

A website’s structure should not be convoluted; rather, it should make it easy for users to find information, navigate to related pages, learn about your products or services, and proceed with their inquiry or purchase.

An example of poor site navigation might include a cluttered menu with too many drop-down options, broken links resulting in 404 pages, a lack of clear categories or topical hierarchy, or the absence of a search bar to assist users in finding specific information.

On the other hand, user-friendly site navigation will have an intuitive menu with clear page categories (e.g., Services, Products, About Us, etc.), breadcrumbs that indicate where users are within the site, and a search function that helps people find results quickly.

It will also maintain a shallow page depth, keeping the number of clicks required for users to access important pages at a minimum.

Optimized site navigation will not only help enable faster search engine crawling and indexation, but also reduce friction in website visitors’ path to purchase.

2. Reduce Visual Bloat And Page Speed Issues

It’s no secret that page speed is an important factor in user experience and search engine rankings.

Today’s consumers expect a fast and easy-to-use website, and search engines tend to prioritize sites that have a fast loading speed.

PageSpeed Insights is the go-to test to assess a website’s loading speed and experience across desktop and mobile devices.

If a website yields a low score (any less than 60/100), this indicates a slow loading time, which can deter prospective customers.

Some factors that may contribute to slow loading speed include unoptimized page code (CSS, unnecessary JavaScript, etc.), visual bloat through large images and video files, hosting your website on a slow hosting server, and the presence of other render-blocking scripts/stylesheets that delay loading of your main page content.

That said, there are many more elements that may be hindering your site’s speed and rendering, so be sure to assess your specific site and review the items outlined in the PageSpeed Insights report.

Data from a page speed report reveals that nearly 70% of online shoppers factor in loading time before making a purchase.

Customer-centric SEO prioritizes loading speed as a matter of user experience, knowing that a slow website can be a huge deterrent to new site visitors.

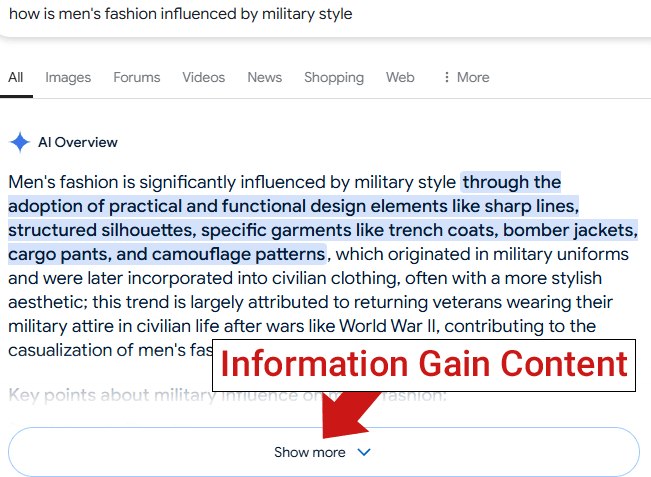

3. Audit Content For Timeliness And Relevance

In the SEO space, there’s a strong draw toward evergreen content due to its promise of long-running organic traffic.

While evergreen content has its place, it sometimes does a disservice to new website visitors if it is not regularly updated and maintained.

For example, a previously published “Social Media Marketing Best Practices” page might have included relevant statistics from 2023, but new visitors are likely looking for more timely information, up-to-date examples, and current sources.

Thus, auditing and updating existing content should be an ongoing practice in customer-centric SEO. This allows one to identify outdated information, link to more recent sources, add information as relevant, and provide more value to prospective customers.

Here are a few things to look for when refreshing old content:

- Is the information still accurate and relevant to today’s audience?

- Are there outdated statistics, articles, or references that need updating?

- Do all internal and external links still work? Are they pointing to the best sources?

- Has search intent changed? Does the content still align with user expectations?

- Are there new insights, case studies, or examples that could enhance the content?

- Are there opportunities to improve formatting and readability (e.g., adding images, videos, or bullet points)?

- Does the content reflect the latest industry guidelines and/or technology updates?

- Are there opportunities to expand or consolidate content for better clarity?

- Is the metadata still optimized to drive clicks in the search results?

I highly recommend running through these questions like a checklist, at least every quarter.

Not only will this allow you to catch missed SEO opportunities (in terms of content length, metadata updates, etc.), but it also will ensure that your content is still relevant and valuable to incoming visitors.

4. Implement A Mobile-First Website Design

In 2024, mobile devices generated the majority (64.54%) of all global website traffic.

With so many users searching for information via mobile devices (smartphones, tablets, etc.), having a mobile-friendly website is absolutely essential.

In addition to ensuring a fast load speed, your website should be responsive (load correctly, be easy to navigate, etc.) on all types of devices.

Best practices for mobile-friendliness include disabling intrusive pop-ups and display ads, formatting the mobile version of your site to adapt to various screen sizes, and using large and easily “clickable” buttons so users can navigate your site.

One significant component that often changes between a site’s desktop and mobile versions is the navigation.

The menu bar on the mobile version of your site may be larger; include a more compressed hamburger menu, and perhaps larger text. This makes it easier for users to click on the menu items even when using a smaller screen.

Mobile-friendliness is not a “nice to have.” In this fast-paced world, users expect a positive experience no matter where they search. This makes mobile optimization an essential aspect of customer-centric SEO.

5. Put Trust Factors At The Forefront

In keeping with the theme of making information easy to find, your site’s case studies, reviews, and testimonials should also be at the forefront.

Avoid nesting this important info – what I call “trust factors” – deep in your website (as many do, at the bottom of a webpage).

Instead, embed testimonials strategically in your content. Accompany these testimonials with product or service examples, customer names, and even videos to add to the visual appeal.

Build out robust case studies that explain how your products/services work and the benefits they’ve provided to your customers.

By making this information highly visible, you’ll build trust with prospective customers more quickly, eliminating the need for them to search through your site to find proof of your credibility.

6. Make Content Skimmable And Well-Organized

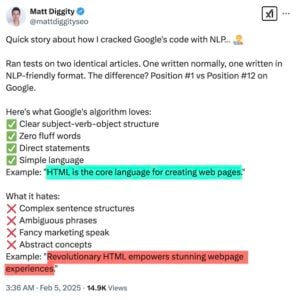

When it comes to webpage and blog post content, customer-centric SEO makes the body content easier to scan and digest.

This means adding strategic headings that establish a hierarchy between sections and adding bulleted or numbered lists to better organize the text.

Few people want to read a wall of text that reads more like an essay than an informative guide.

Formatting gives readers “breathing room” as they skim the information, contemplate the value provided, and search for references within your content.

Ideally, your content should be formatted in such a way that readers can:

- Immediately know what the content is about (i.e., a clear title).

- Easily scan the most important sections, clearly labeled with specific headers.

- Readily find sources cited and internal links to related content.

7. CRO Your CTAs

Calls-to-action (CTAs) are often a driving force in getting users to take action on your site – whether that’s calling your business, signing up for your newsletter, or making a purchase.

With customer-centric SEO, you make sure these CTAs are prominent, concise, and easy to navigate.

First, there’s the design aspect of your CTAs. Are they visually appealing? Is the color contrast such that users can easily find them? Do they stand out from other parts of your content?

Then, there’s the text itself. Is the CTA clear in what action you want users to take? Does the text imply where the user will be directed next? Does it use action verbs to compel clicks?

Some examples of poor CTAs can include:

- “See here.”

- “More.”

- “Read.”

However, some more compelling CTAs may include:

- “Browse products.”

- “Book a free demo.”

- “Schedule a consultation.”

- “Read related articles.”

Both the visual design and the text are important in appealing to users and driving the desired action.

8. Break Up Text With Media Elements And Eye-Catching Graphics

Add intrigue to your content by breaking up walls of text with custom graphics, videos, downloadables, GIFs, and other types of media.

Many studies have pointed to the value of images in content, indicating that engagement rates improve when high-quality visuals are used.

Media adds to the visual appeal of your content, but it can also bring SEO benefits as well.

For instance, pre-recorded videos embedded on your website and uploaded to YouTube can drive additional traffic via search engines, YouTube Search, and similar methods.

There’s an accessibility component as well.

Media optimized with descriptive alt text is more readily accessible by e-readers, devices used by visually impaired individuals to understand content on the web. This is an SEO best practice and a user experience best practice.

A Confident Future In Customer-Centric SEO

Chances are, many of the SEO best practices you’ve been implementing to date were designed with user experience in mind.

Search engines have a long history of considering the user experience, from algorithm changes to emerging technologies in analytics tools.

But there are up-and-coming practices as well – ones that SEO pros would be remiss to neglect in future forward strategies.

Customer-centric SEO is a philosophy that puts the customer first.

Outside of optimization, for optimization’s sake, it involves purposeful changes to make a user’s experience more enjoyable.

This can bring tangible benefits for the brand – in terms of traffic and sales – and meaningful benefits to the user, in terms of ease of use, accessibility, and trust.

More Resources:

Featured Image: PeopleImages.com – Yuri A/Shutterstock