The 4 Principles Of Effective Retail Marketing via @sejournal, @jasonhennessey

From window displays and newspaper ads, to sidewalk sandwich boards and pop-up events, there are many ways to market a retail store.

Whether your goal is to draw in casual passersby or increase online sales, having a well-planned (and well-executed) marketing strategy is key to wooing more customers.

But before you get fancy with flashy ads or influencer partnerships, it’s best to start with the fundamentals. That’s what makes this guide essential reading for any savvy retail business owner.

Master the four pillars of retail marketing – often referred to as “the 4 Ps” – and you’re well on your way to having an iron-clad marketing plan.

What Is Retail Marketing?

Retail marketing refers to the various activities, whether in-store, locally, or online, that are used to attract customers to a retail business.

While the exact tactics may vary, retail marketing at its core is about establishing a brand identity, promoting your products, and engaging with potential customers (often across multiple channels).

Marketing as a whole has changed over the years, evolving from traditional media (print ads, flyers, in-person networking, etc.) to more technologically advanced methods (social media, online ads, email marketing, etc.), but the fundamentals have remained mostly the same.

That said, it’s important to know that retail marketing differs from other types of marketing.

How Retail Marketing Differs From Other Types Of Marketing

Like all types of marketing, retail marketing is all about connecting the product or service with the consumer. But retail marketing is different from other types of marketing – like Business to Business (B2B) marketing or service marketing – in a few distinct ways:

- Customer Needs: Retail marketing focuses on individual consumers (B2C), whereas B2B marketing targets other businesses. Retail customers are typically driven by personal needs, while B2B decisions are often based on business requirements, return on investment (ROI), and long-term objectives.

- Sales Cycle: Retail marketing usually involves a shorter sales cycle, with consumers making relatively faster purchasing decisions than B2B buyers.

- Tangible Products: Retail marketing primarily deals with tangible products that consumers can see and touch, whereas other types of marketing (B2B or Service) often deal with intangible offerings like consulting or software.

- Physical Presence: Retail marketing often (but not always) involves a physical presence, usually via a brick-and-mortar store. Digital marketing, while it can support retail efforts, primarily operates online using tools like social media and email to reach customers.

Retail marketing is different from other types of marketing in its focus on the close interaction between the business and the consumer at the point of sale.

Many retail business owners understand that the success of their marketing efforts often comes down to face-to-face interactions and personalized experiences.

What Are The 4 Principles Of Retail Marketing?

When it comes to something as broad as “marketing,” simplicity is key. The essential elements of retain marketing revolve around four primary pillars:

- Product.

- Price.

- Place.

- Promotion.

We’ll refer to these as “the 4 Ps” throughout this article. They have even been known to extend beyond to include “Presentation” and “Personnel.” But for our purposes, we’ll stick to the primary four.

1. Product: What You Sell

The first pillar, product, pertains to the actual item or service you offer customers. This might involve a single category of products (e.g., novelty candles) or, most often, a variety of products (e.g., candles, home decor, furniture, etc.) offered by your brand.

Before you market your product(s), you need to understand it. This means not only its physical attributes and design but also the value it provides to customers. This also includes its material quality, branding, and even post-sale support resources.

Your product (again, it can pertain to a category of products) should speak to the needs, challenges, or interests of your prospective customers. You must fundamentally understand what it is that you sell and how that provides a benefit to customers.

For example:

- If you sell office chairs, your product could address the challenge of reducing back pain or increasing comfort for people who spend long hours at a desk.

- If you sell natural skincare products, your product could appeal to customers interested in natural ingredients and being environmentally conscious.

- If you sell durable running shoes, your product could cater to athletes looking for footwear that lasts long, provides support, and prevents injuries.

- If you sell gourmet coffee, your product might connect with coffee enthusiasts looking for unique flavors, high-quality beans, and a connection to Fair Trade growers.

The key is to gain a deeper understanding of your product’s connection to your customers. Ask yourself: What do they need? What are their challenges? How does your product address a need or a problem?

Try This To Better Understand Your Product

Every retail business owner can benefit from some practice in examining their products and how they might appeal to the needs of their customers.

If you aren’t crystal clear on the “why” behind your product(s), start with this activity:

- Workshop: Gather your team (sales, marketing, and service) to identify the key features of your most important products. Off the cuff, what are the primary features that stand out?

- Map: Then, outline the customer journey, from the time someone first discovers your product to the after-sale experience. Discuss what points of interaction a customer is likely to have during this process (e.g., entering your store, being welcomed by a sales rep, trying on clothes, weighing pricing options, etc.)

- Empathize: At each touchpoint, put yourself in the customer’s shoes. How might the customer feel? What else might they need?

- Apply: Based on your customer journey map, consider any improvements to made to your product or process. Could merchandise be laid out differently? How might you enhance the customer experience? Could post-sale support be improved?

Refining your product is a continuous process, influenced often by customer feedback and actual sales numbers.

Train your team on how they should communicate about your product, associate products with related offerings (cross-selling), and answer customers’ questions to direct them to the most appropriate product (read: solution).

2. Price: What People Pay For The Product

The second pillar, price, refers to the amount of money customers are willing to pay for your product.

This is more than just the number you put on the price tag. It is a representation of your product’s perceived value and the benefit it provides to your customers.

Some things to consider are your own brand’s positioning in your market, your competitors’ pricing, and the quality of materials used to create the product.

For example, if your product is of superior quality, has unique features, and conveys a sense of luxury, premium pricing may be the way to go.

On the other hand, if you’re in a saturated market and can’t outshine your competitors based on quality, you could undercut them on price.

The objective is to find that sweet spot – where your pricing generates a profit but also feels appropriate based on your customer’s perception of the product’s value.

Developing Your Pricing Strategy

Not sure how to price your products? Pricing is both an art and a science.

Here are some steps to follow to develop a profitable yet appropriate pricing strategy:

- Research the Competition: Scope out what your competitors are charging for similar products. Consider the materials used to create your product relative to your competitors. Determine where your product stands in terms of quality, features, convenience, and brand positioning.

- Consider Your Audience: As stated, pricing isn’t just about quality and materials, but also customer perception. Think about who your target customer is, what they need, and what they’re willing to spend. Consider their income level, spending habits, location, and desire/necessity for the product.

- Count the Costs: Figure out how much it costs for you to acquire, market, and sell the product. How many products do you need to sell to turn a profit? Make sure all the associated costs are covered by the price, plus a healthy margin.

- Edit and Adjust: Over time, you might need to test different pricing models to determine what resonates with your customers and still turns a profit. When you apply discounts or bundled pricing, observe how these changes impact sales. Monitor your sales data and customer behavior to adjust your pricing strategy accordingly.

Simple Retail Pricing Formula

Here’s a simple retail pricing formula to help you:

Retail Price = Cost of Goods Sold (COGS) / (1 − Desired Profit Margin )

Where:

- Cost of Goods Sold (COGS): The total cost of producing or purchasing the product, including materials, labor, shipping, marketing, etc.

- Desired Profit Margin: The percentage of profit you want to make on the product, expressed as a decimal.

Your prices aren’t set in stone. Prices often fluctuate due to market conditions, operational costs, customer behavior, and many other factors.

The key is to effectively communicate the value behind your pricing – and train your team to understand your product’s offerings – so your customers feel confident that the product is worth the price.

3. Place: Where You Sell The Product

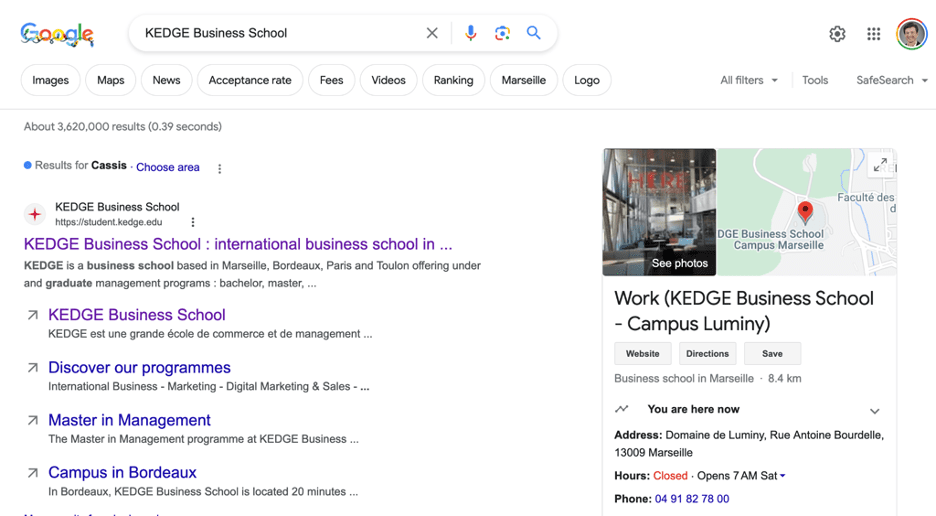

The third pillar of retail marketing, place, refers to the channels through which you advertise and sell your product. This might include your physical storefront, but also includes online marketplaces, an ecommerce website, digital marketing channels, pop-up events, partnerships, and more.

When considering a place, think about where prospective customers are most likely to look for products like yours. Are they scrolling social media? Window shopping while on vacation? Searching blogs for product reviews? Put yourself in their shoes when it comes to searching for products.

For example:

- If you sell luxury handbags, your place might be a high-end boutique located in the prime shopping district.

- If you sell fresh produce, your place could be a local farmers’ market on the weekends.

- If you sell handmade gifts, your place could be a mix of local craft fairs, pop-up shops, and online marketplaces like Etsy.

The Place(s) To Sell For Retail

When it comes to place, the key is to ensure that your products are available where your customers are looking for them. This might include several different channels, in fact.

While you don’t need to (and probably shouldn’t) attempt to sell in all of these places, here are the most common sales channels for retail:

- Brick-and-mortar stores.

- Ecommerce website.

- Online marketplaces (like Amazon, eBay, Etsy, or Faire).

- Social media (Instagram, Facebook, Pinterest, etc.).

- Pop-up shops.

- Mobile apps (e.g., Shopify App, Etsy App, InstaCart, etc.).

- Wholesale (selling products in bulk to other retailers).

- Direct sales (via parties, door-to-door sales, etc.).

It’s best to focus on one to three channels where your target customers will most likely spend their time. This helps ensure that your marketing budget is allocated to those channels most likely to yield the best return.

4. Promotion: How You Advertise The Product

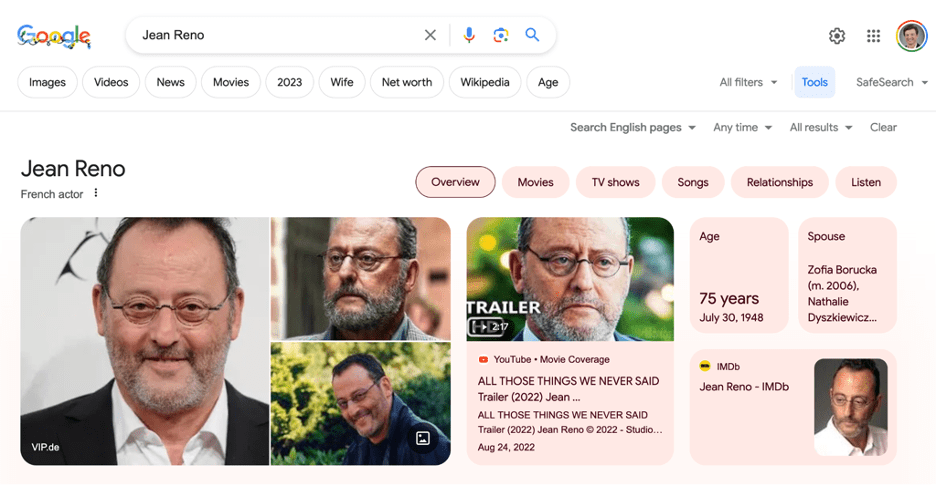

The fourth pillar, promotion, is all about connecting with your target customers and making them more aware of your brand and products.

Making sales isn’t just about being visible, but also about using marketing strategically to draw customers in and convince them to buy from you!

Rarely do people stumble upon a retail store online and immediately make a purchase. They might require multiple touchpoints to discover, research, compare, and finally purchase your product.

The length of this sales cycle can look different for different types of retail businesses, but the idea is the same: Make sure customers have the experience and information they need to make their purchase decision.

For example:

- If you run a clothing store, a customer might first discover your brand through a social media ad, and then visit your website to browse your products. They might sign up for your newsletter to receive a discount code, check out reviews on your blog, and finally make a purchase.

- If you sell electronics, your customers may initially see your new gadget on YouTube, visit your online store to compare specs, read customer reviews, and then make a purchase.

- If you sell home decor, your potential buyers might find your post on Pinterest, visit your website and add a product to their cart, consult a friend, and finally decide to buy a product to complete their home aesthetic.

Obviously, there are many different channels and means of promoting your products. The channels and approach you use will vary depending on what you sell, who your customers are, and your budget.

Increase The Visibility Of Your Retail Business

Once you’ve determined where (place) you want to sell your products, it’s time to use those channels for promotion.

Using the examples listed in the previous section, here are a few ways to promote your retail business:

- Brick-and-mortar store: Use eye-catching window displays and signage to draw in passersby. Host in-store events like product launches or workshops, and offer in-store discounts to incentivize customers.

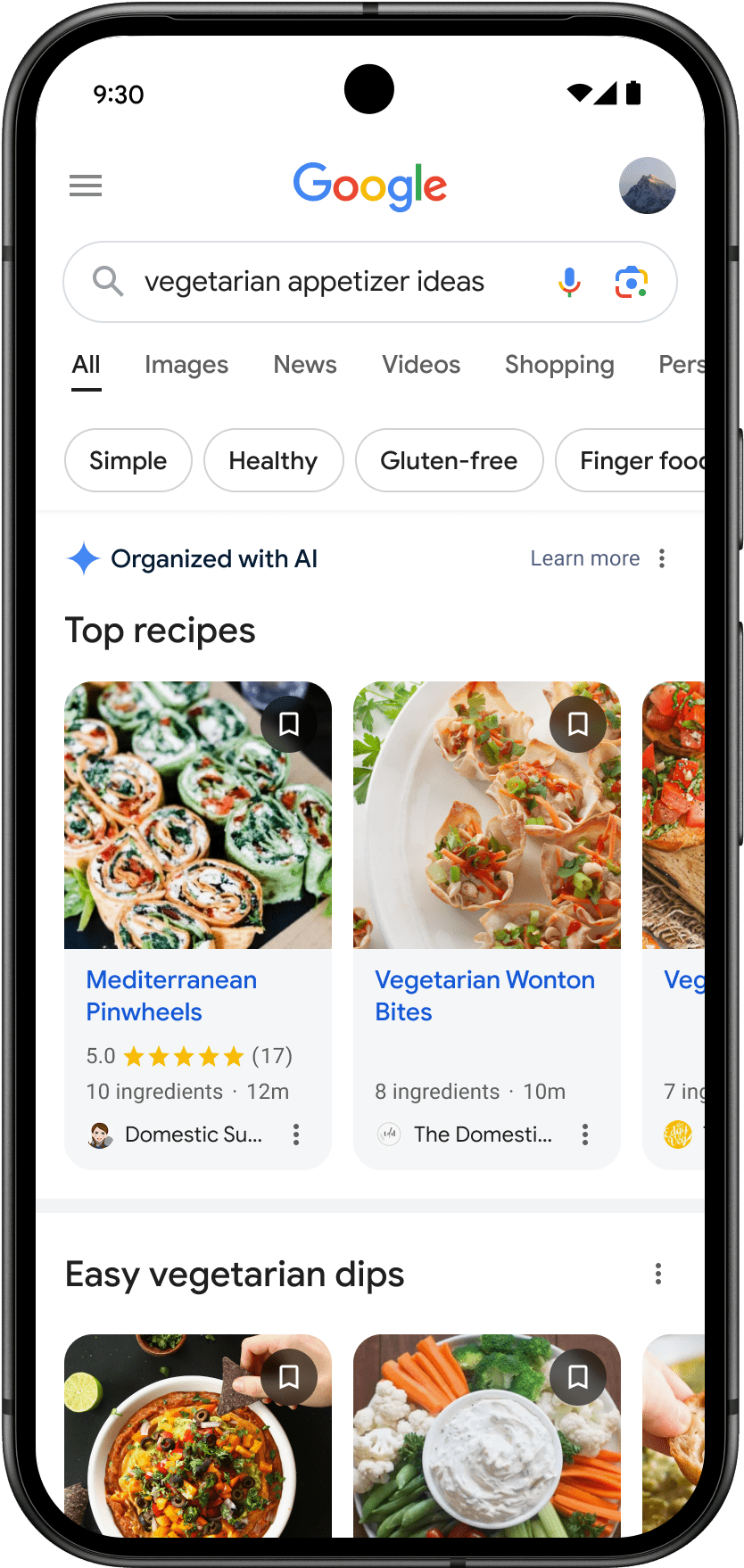

- Ecommerce website: Optimize your website for search engines to drive organic website visitors from Google. Use email marketing to keep customers engaged, send personalized offers, and offer product recommendations.

- Online marketplace: Showcase your products on websites like Amazon, Faire, or Etsy. Optimize your product listings with high-quality images, detailed descriptions, features, and customer reviews. Consider running sponsored ads on the marketplace to increase product visibility.

- Social media: Stay active online with engaging posts, videos, reels, and stories. Reply to customer comments and re-share happy customer reviews. Consider running social media ads to reach your target audience based on shopping behavior, demographics, location, etc.

- Pop-up shop: Partner with other local businesses to attract more customers and foot traffic. Promote your pop-up or event on social media, via email, and through local community channels.

- Mobile apps: Consider connecting your store with a third-party app like Shopify, Uber, or InstaCart. Entice customers to subscribe for access to special offers and discounts. Add delivery options to make shopping more convenient for your customers.

- Wholesale: Partner with wholesalers or distributors to close more deals in bulk. Attend trade shows or industry events to showcase your products to potential retail partners.

- Direct sales: Host product demonstrations or home parties to create a personalized shopping experience. Incentivize happy customers or other brands to become referral partners.

- Paid ads: Use Google Ads, Meta Ads, LinkedIn Ads, etc. to reach target customers online. Consider implementing retargeting ads to re-engage visitors who have joined your email list but haven’t made a purchase.

Develop Your Retail Marketing Strategy

Your retail business is unique in the experience and products that it offers. But how do you make your store the obvious choice for potential customers?

With an effective retail marketing strategy, you’ll have everything you need to Price, Place, and Promote your Product, attracting more customers to you!

By focusing on the key pillars of product presentation, pricing strategies, distribution channels, and customer experience, you’ll create an environment that resonates with your ideal customers.

You can use a variety of channels – from in-store sales to ecommerce to social media – to promote your business and keep your sales strong.

Ultimately, the success of your retail business depends on your ability to connect with customers and communicate the value your brand has to offer.

Ready to master the 4 Ps? You got this!

More resources:

Featured Image: PeopleImages.com – Yuri A/Shutterstock