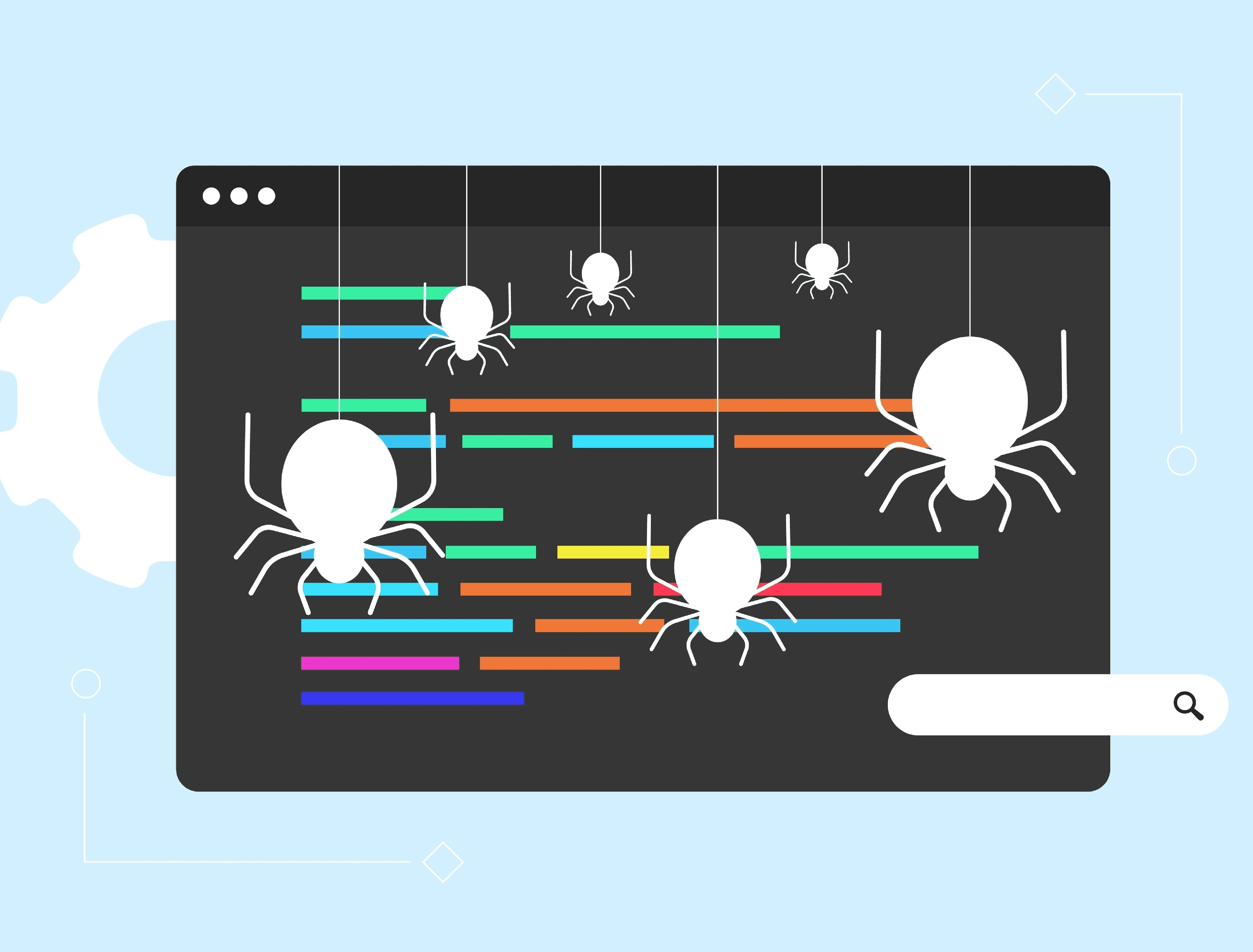

One of the most important elements of search engine optimization, often overlooked, is how easily search engines can discover and understand your website.

This process, known as crawling and indexing, is fundamental to your site’s visibility in search results. Without being crawled your pages cannot be indexed, and if they are not indexed they won’t rank or display in SERPs.

In this article, we’ll explore 13 practical steps to improve your website’s crawlability and indexability. By implementing these strategies, you can help search engines like Google better navigate and catalog your site, potentially boosting your search rankings and online visibility.

Whether you’re new to SEO or looking to refine your existing strategy, these tips will help ensure that your website is as search-engine-friendly as possible.

Let’s dive in and discover how to make your site more accessible to search engine bots.

1. Improve Page Loading Speed

Page loading speed is crucial to user experience and search engine crawlability. To improve your page speed, consider the following:

- Upgrade your hosting plan or server to ensure optimal performance.

- Minify CSS, JavaScript, and HTML files to reduce their size and improve loading times.

- Optimize images by compressing them and using appropriate formats (e.g., JPEG for photographs, PNG for transparent graphics).

- Leverage browser caching to store frequently accessed resources locally on users’ devices.

- Reduce the number of redirects and eliminate any unnecessary ones.

- Remove any unnecessary third-party scripts or plugins.

2. Measure & Optimize Core Web Vitals

In addition to general page speed optimizations, focus on improving your Core Web Vitals scores. Core Web Vitals are specific factors that Google considers essential in a webpage’s user experience.

These include:

To identify issues related to Core Web Vitals, use tools like Google Search Console’s Core Web Vitals report, Google PageSpeed Insights, or Lighthouse. These tools provide detailed insights into your page’s performance and offer suggestions for improvement.

Some ways to optimize for Core Web Vitals include:

- Minimize main thread work by reducing JavaScript execution time.

- Avoid significant layout shifts by using set size attribute dimensions for media elements and preloading fonts.

- Improve server response times by optimizing your server, routing users to nearby CDN locations, or caching content.

By focusing on both general page speed optimizations and Core Web Vitals improvements, you can create a faster, more user-friendly experience that search engine crawlers can easily navigate and index.

3. Optimize Crawl Budget

Crawl budget refers to the number of pages Google will crawl on your site within a given timeframe. This budget is determined by factors such as your site’s size, health, and popularity.

If your site has many pages, it’s necessary to ensure that Google crawls and indexes the most important ones. Here are some ways to optimize for crawl budget:

- Using a clear hierarchy, ensure your site’s structure is clean and easy to navigate.

- Identify and eliminate any duplicate content, as this can waste crawl budget on redundant pages.

- Use the robots.txt file to block Google from crawling unimportant pages, such as staging environments or admin pages.

- Implement canonicalization to consolidate signals from multiple versions of a page (e.g., with and without query parameters) into a single canonical URL.

- Monitor your site’s crawl stats in Google Search Console to identify any unusual spikes or drops in crawl activity, which may indicate issues with your site’s health or structure.

- Regularly update and resubmit your XML sitemap to ensure Google has an up-to-date list of your site’s pages.

4. Strengthen Internal Link Structure

A good site structure and internal linking are foundational elements of a successful SEO strategy. A disorganized website is difficult for search engines to crawl, which makes internal linking one of the most important things a website can do.

But don’t just take our word for it. Here’s what Google’s search advocate, John Mueller, had to say about it:

“Internal linking is super critical for SEO. I think it’s one of the biggest things that you can do on a website to kind of guide Google and guide visitors to the pages that you think are important.”

If your internal linking is poor, you also risk orphaned pages or pages that don’t link to any other part of your website. Because nothing is directed to these pages, search engines can only find them through your sitemap.

To eliminate this problem and others caused by poor structure, create a logical internal structure for your site.

Your homepage should link to subpages supported by pages further down the pyramid. These subpages should then have contextual links that feel natural.

Another thing to keep an eye on is broken links, including those with typos in the URL. This, of course, leads to a broken link, which will lead to the dreaded 404 error. In other words, page not found.

The problem is that broken links are not helping but harming your crawlability.

Double-check your URLs, particularly if you’ve recently undergone a site migration, bulk delete, or structure change. And make sure you’re not linking to old or deleted URLs.

Other best practices for internal linking include using anchor text instead of linked images, and adding a “reasonable number” of links on a page (there are different ratios of what is reasonable for different niches, but adding too many links can be seen as a negative signal).

Oh yeah, and ensure you’re using follow links for internal links.

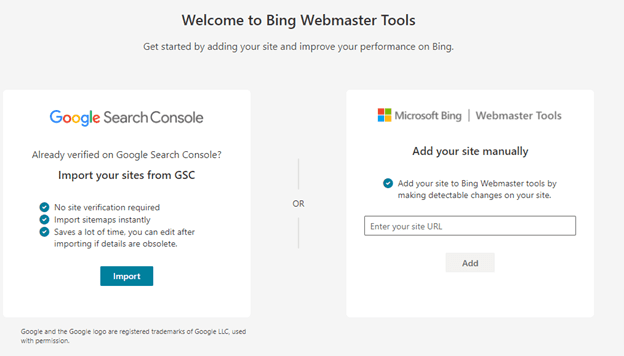

5. Submit Your Sitemap To Google

Given enough time, and assuming you haven’t told it not to, Google will crawl your site. And that’s great, but it’s not helping your search ranking while you wait.

If you recently made changes to your content and want Google to know about them immediately, you should submit a sitemap to Google Search Console.

A sitemap is another file that lives in your root directory. It serves as a roadmap for search engines with direct links to every page on your site.

This benefits indexability because it allows Google to learn about multiple pages simultaneously. A crawler may have to follow five internal links to discover a deep page, but by submitting an XML sitemap, it can find all of your pages with a single visit to your sitemap file.

Submitting your sitemap to Google is particularly useful if you have a deep website, frequently add new pages or content, or your site does not have good internal linking.

6. Update Robots.txt Files

You’ll want to have a robots.txt file for your website. It’s a plain text file in your website’s root directory that tells search engines how you would like them to crawl your site. Its primary use is to manage bot traffic and keep your site from being overloaded with requests.

Where this comes in handy in terms of crawlability is limiting which pages Google crawls and indexes. For example, you probably don’t want pages like directories, shopping carts, and tags in Google’s directory.

Of course, this helpful text file can also negatively impact your crawlability. It’s well worth looking at your robots.txt file (or having an expert do it if you’re not confident in your abilities) to see if you’re inadvertently blocking crawler access to your pages.

Some common mistakes in robots.text files include:

- Robots.txt is not in the root directory.

- Poor use of wildcards.

- Noindex in robots.txt.

- Blocked scripts, stylesheets, and images.

- No sitemap URL.

For an in-depth examination of each of these issues – and tips for resolving them, read this article.

7. Check Your Canonicalization

What a canonical tag does is indicate to Google which page is the main page to give authority to when you have two or more pages that are similar, or even duplicate. Although, this is only a directive and not always applied.

Canonicals can be a helpful way to tell Google to index the pages you want while skipping duplicates and outdated versions.

But this opens the door for rogue canonical tags. These refer to older versions of a page that no longer exist, leading to search engines indexing the wrong pages and leaving your preferred pages invisible.

To eliminate this problem, use a URL inspection tool to scan for rogue tags and remove them.

If your website is geared towards international traffic, i.e., if you direct users in different countries to different canonical pages, you need to have canonical tags for each language. This ensures your pages are indexed in each language your site uses.

8. Perform A Site Audit

Now that you’ve performed all these other steps, there’s still one final thing you need to do to ensure your site is optimized for crawling and indexing: a site audit.

That starts with checking the percentage of pages Google has indexed for your site.

Check Your Indexability Rate

Your indexability rate is the number of pages in Google’s index divided by the number of pages on your website.

You can find out how many pages are in the Google index from the Google Search Console Index by going to the “Pages” tab and checking the number of pages on the website from the CMS admin panel.

There’s a good chance your site will have some pages you don’t want indexed, so this number likely won’t be 100%. However, if the indexability rate is below 90%, you have issues that need investigation.

You can get your no-indexed URLs from Search Console and run an audit for them. This could help you understand what is causing the issue.

Another helpful site auditing tool included in Google Search Console is the URL Inspection Tool. This allows you to see what Google spiders see, which you can then compare to actual webpages to understand what Google is unable to render.

Audit (And request Indexing) Newly Published Pages

Any time you publish new pages to your website or update your most important pages, you should ensure they’re being indexed. Go into Google Search Console and use the inspection tool to make sure they’re all showing up. If not, request indexing on the page and see if this takes effect – usually within a few hours to a day.

If you’re still having issues, an audit can also give you insight into which other parts of your SEO strategy are falling short, so it’s a double win. Scale your audit process with tools like:

9. Check For Duplicate Content

Duplicate content is another reason bots can get hung up while crawling your site. Basically, your coding structure has confused it, and it doesn’t know which version to index. This could be caused by things like session IDs, redundant content elements, and pagination issues.

Sometimes, this will trigger an alert in Google Search Console, telling you Google is encountering more URLs than it thinks it should. If you haven’t received one, check your crawl results for duplicate or missing tags or URLs with extra characters that could be creating extra work for bots.

Correct these issues by fixing tags, removing pages, or adjusting Google’s access.

10. Eliminate Redirect Chains And Internal Redirects

As websites evolve, redirects are a natural byproduct, directing visitors from one page to a newer or more relevant one. But while they’re common on most sites, if you’re mishandling them, you could inadvertently sabotage your indexing.

You can make several mistakes when creating redirects, but one of the most common is redirect chains. These occur when there’s more than one redirect between the link clicked on and the destination. Google doesn’t consider this a positive signal.

In more extreme cases, you may initiate a redirect loop, in which a page redirects to another page, directs to another page, and so on, until it eventually links back to the first page. In other words, you’ve created a never-ending loop that goes nowhere.

Check your site’s redirects using Screaming Frog, Redirect-Checker.org, or a similar tool.

11. Fix Broken Links

Similarly, broken links can wreak havoc on your site’s crawlability. You should regularly check your site to ensure you don’t have broken links, as this will hurt your SEO results and frustrate human users.

There are a number of ways you can find broken links on your site, including manually evaluating every link on your site (header, footer, navigation, in-text, etc.), or you can use Google Search Console, Analytics, or Screaming Frog to find 404 errors.

Once you’ve found broken links, you have three options for fixing them: redirecting them (see the section above for caveats), updating them, or removing them.

12. IndexNow

IndexNow is a protocol that allows websites to proactively inform search engines about content changes, ensuring faster indexing of new, updated, or removed content. By strategically using IndexNow, you can boost your site’s crawlability and indexability.

However, using IndexNow judiciously and only for meaningful content updates that substantially enhance your website’s value is crucial. Examples of significant changes include:

- For ecommerce sites: Product availability changes, new product launches, and pricing updates.

- For news websites: Publishing new articles, issuing corrections, and removing outdated content.

- For dynamic websites, this includes updating financial data at critical intervals, changing sports scores and statistics, and modifying auction statuses.

- Avoid overusing IndexNow by submitting duplicate URLs too frequently within a short timeframe, as this can negatively impact trust and rankings.

- Ensure that your content is fully live on your website before notifying IndexNow.

If possible, integrate IndexNow with your content management system (CMS) for seamless updates. If you’re manually handling IndexNow notifications, follow best practices and notify search engines of both new/updated content and removed content.

By incorporating IndexNow into your content update strategy, you can ensure that search engines have the most current version of your site’s content, improving crawlability, indexability, and, ultimately, your search visibility.

13. Implement Structured Data To Enhance Content Understanding

Structured data is a standardized format for providing information about a page and classifying its content.

By adding structured data to your website, you can help search engines better understand and contextualize your content, improving your chances of appearing in rich results and enhancing your visibility in search.

There are several types of structured data, including:

- Schema.org: A collaborative effort by Google, Bing, Yandex, and Yahoo! to create a unified vocabulary for structured data markup.

- JSON-LD: A JavaScript-based format for encoding structured data that can be embedded in a web page’s or .

- Microdata: An HTML specification used to nest structured data within HTML content.

To implement structured data on your site, follow these steps:

- Identify the type of content on your page (e.g., article, product, event) and select the appropriate schema.

- Mark up your content using the schema’s vocabulary, ensuring that you include all required properties and follow the recommended format.

- Test your structured data using tools like Google’s Rich Results Test or Schema.org’s Validator to ensure it’s correctly implemented and free of errors.

- Monitor your structured data performance using Google Search Console’s Rich Results report. This report shows which rich results your site is eligible for and any issues with your implementation.

Some common types of content that can benefit from structured data include:

- Articles and blog posts.

- Products and reviews.

- Events and ticketing information.

- Recipes and cooking instructions.

- Person and organization profiles.

By implementing structured data, you can provide search engines with more context about your content, making it easier for them to understand and index your pages accurately.

This can improve search results visibility, mainly through rich results like featured snippets, carousels, and knowledge panels.

Wrapping Up

By following these 13 steps, you can make it easier for search engines to discover, understand, and index your content.

Remember, this process isn’t a one-time task. Regularly check your site’s performance, fix any issues that arise, and stay up-to-date with search engine guidelines.

With consistent effort, you’ll create a more search-engine-friendly website with a better chance of ranking well in search results.

Don’t be discouraged if you find areas that need improvement. Every step to enhance your site’s crawlability and indexability is a step towards better search performance.

Start with the basics, like improving page speed and optimizing your site structure, and gradually work your way through more advanced techniques.

By making your website more accessible to search engines, you’re not just improving your chances of ranking higher – you’re also creating a better experience for your human visitors.

So roll up your sleeves, implement these tips, and watch as your website becomes more visible and valuable in the digital landscape.

More Resources:

Featured Image: BestForBest/Shutterstock