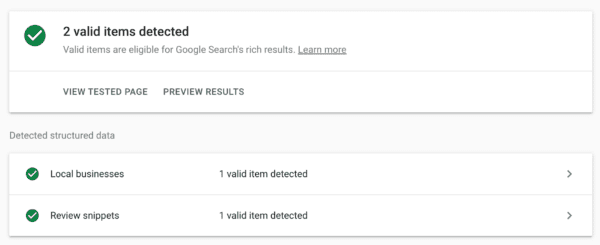

8 Common Robots.txt Issues And How To Fix Them via @sejournal, @TaylorDanRW

Robots.txt is a useful and powerful tool to instruct search engine crawlers on how you want them to crawl your website. Managing this file is a key component of good technical SEO.

It is not all-powerful – in Google’s own words, “it is not a mechanism for keeping a web page out of Google” – but it can help prevent your site or server from being overloaded by crawler requests.

If you have this crawl block on your site, you must be certain it’s being used properly.

This is particularly important if you use dynamic URLs or other methods that generate a theoretically infinite number of pages.

In this guide, we will look at some of the most common issues with the robots.txt file, their impact on your website and your search presence, and how to fix these issues if you think they have occurred.

But first, let’s take a quick look at robots.txt and its alternatives.

What Is Robots.txt?

Robots.txt uses a plain text file format and is placed in the root directory of your website.

It must be in the topmost directory of your site. Search engines will simply ignore it if you place it in a subdirectory.

Despite its great power, robots.txt is often a relatively simple document and a basic robots.txt file can be created in seconds using an editor like Notepad. You can have fun with them and add additional messaging for users to find.

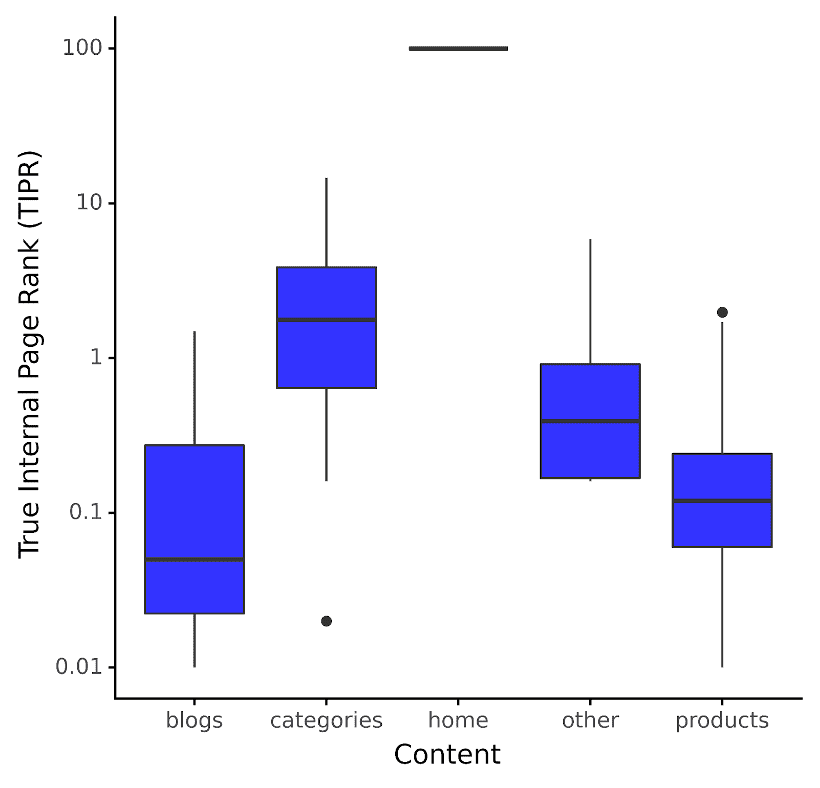

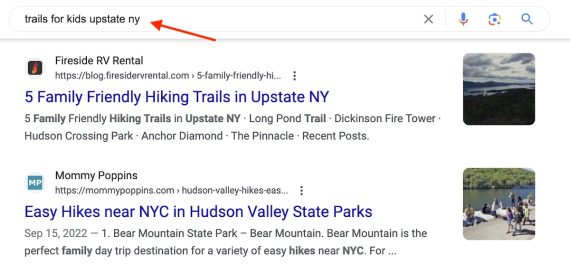

Image from author, February 2024

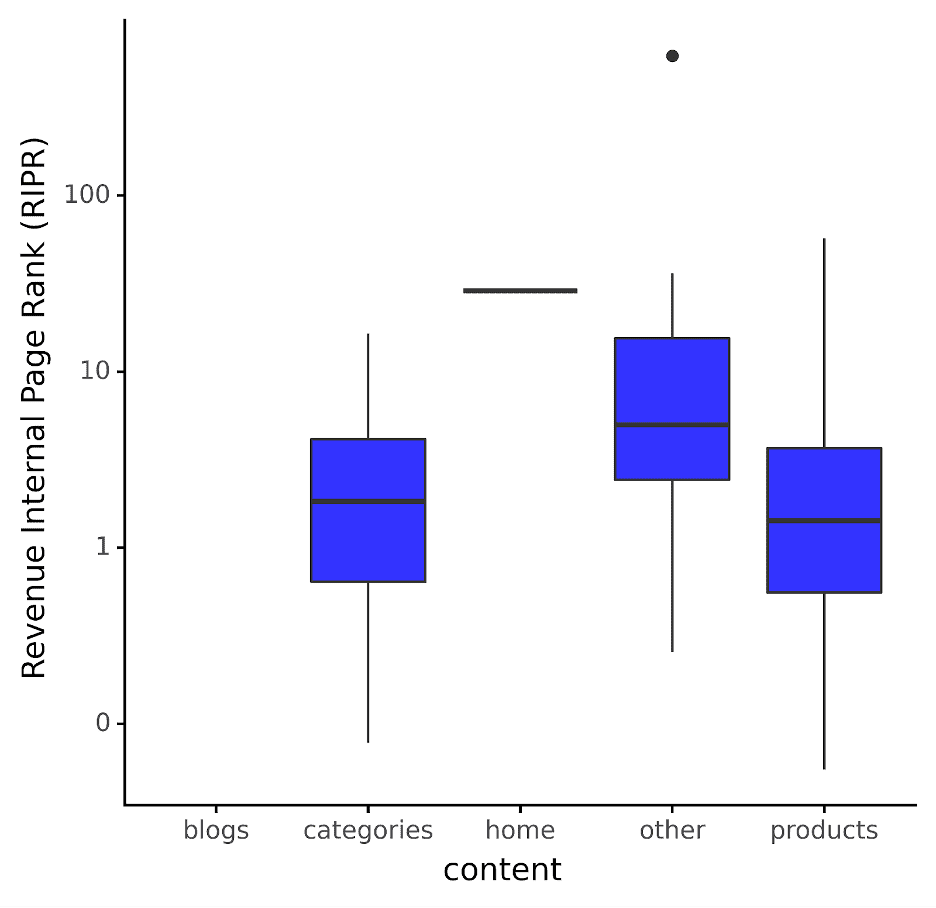

Image from author, February 2024

There are other ways to achieve some of the same goals that robots.txt is usually used for.

Individual pages can include a robots meta tag within the page code itself.

You can also use the X-Robots-Tag HTTP header to influence how (and whether) content is shown in search results.

What Can Robots.txt Do?

Robots.txt can achieve a variety of results across a range of different content types:

Webpages can be blocked from being crawled.

They may still appear in search results, but they will not have a text description. Non-HTML content on the page will not be crawled either.

Media files can be blocked from appearing in Google search results.

This includes images, video, and audio files.

If the file is public, it will still “exist” online and can be viewed and linked to, but this private content will not show in Google searches.

Resource files like unimportant external scripts can be blocked.

But this means if Google crawls a page that requires that resource to load, the Googlebot robot will “see” a version of the page as if that resource did not exist, which may affect indexing.

You cannot use robots.txt to completely block a webpage from appearing in Google’s search results.

To achieve that, you must use an alternative method, such as adding a noindex meta tag to the head of the page.

How Dangerous Are Robots.txt Mistakes?

A mistake in robots.txt can have unintended consequences, but it’s often not the end of the world.

The good news is that by fixing your robots.txt file, you can recover from any errors quickly and (usually) in full.

Google’s guidance to web developers says this on the subject of robots.txt mistakes:

“Web crawlers are generally very flexible and typically will not be swayed by minor mistakes in the robots.txt file. In general, the worst that can happen is that incorrect [or] unsupported directives will be ignored.

Bear in mind though that Google can’t read minds when interpreting a robots.txt file; we have to interpret the robots.txt file we fetched. That said, if you are aware of problems in your robots.txt file, they’re usually easy to fix.”

8 Common Robots.txt Mistakes

- Robots.txt Not In The Root Directory.

- Poor Use Of Wildcards.

- Noindex In Robots.txt.

- Blocked Scripts And Stylesheets.

- No Sitemap URL.

- Access To Development Sites.

- Using Absolute URLs.

- Deprecated & Unsupported Elements.

If your website behaves strangely in the search results, your robots.txt file is a good place to look for any mistakes, syntax errors, and overreaching rules.

Let’s take a look at each of the above mistakes in more detail and see how to ensure you have a valid robots.txt file.

1. Robots.txt Not In The Root Directory

Search robots can only discover the file if it’s in your root folder.

That’s why there should be only a forward slash between the .com (or equivalent domain) of your website, and the ‘robots.txt’ filename, in the URL of your robots.txt file.

If there’s a subfolder in there, your robots.txt file is probably not visible to the search robots, and your website is probably behaving as if there was no robots.txt file at all.

To fix this issue, move your robots.txt file to your root directory.

It’s worth noting that this will need you to have root access to your server.

Some content management systems will upload files to a “media” subdirectory (or something similar) by default, so you might need to circumvent this to get your robots.txt file in the right place.

2. Poor Use Of Wildcards

Robots.txt supports two wildcard characters:

- Asterisk (*) – represents any instances of a valid character, like a Joker in a deck of cards.

- Dollar sign ($) – denotes the end of a URL, allowing you to apply rules only to the final part of the URL, such as the filetype extension.

It’s sensible to adopt a minimalist approach to using wildcards, as they have the potential to apply restrictions to a much broader portion of your website.

It’s also relatively easy to end up blocking robot access from your entire site with a poorly placed asterisk.

Test your wildcard rules using a robots.txt testing tool to ensure they behave as expected. Be cautious with wildcard usage to prevent accidentally blocking or allowing too much.

3. Noindex In Robots.txt

This one is more common on websites that are over a few years old.

Google has stopped obeying noindex rules in robots.txt files as of September 1, 2019.

If your robots.txt file was created before that date or contains noindex instructions, you will likely see those pages indexed in Google’s search results.

The solution to this problem is to implement an alternative “noindex” method.

One option is the robots meta tag, which you can add to the head of any webpage you want to prevent Google from indexing.

4. Blocked Scripts And Stylesheets

It might seem logical to block crawler access to external JavaScripts and cascading stylesheets (CSS).

However, remember that Googlebot needs access to CSS and JS files to “see” your HTML and PHP pages correctly.

If your pages are behaving oddly in Google’s results, or it looks like Google is not seeing them correctly, check whether you are blocking crawler access to required external files.

A simple solution to this is to remove the line from your robots.txt file that is blocking access.

Or, if you have some files you do need to block, insert an exception that restores access to the necessary CSS and JavaScript.

5. No XML Sitemap URL

This is more about SEO than anything else.

You can include the URL of your XML sitemap in your robots.txt file.

Because this is the first place Googlebot looks when it crawls your website, this gives the crawler a headstart in knowing the structure and main pages of your site.

While this is not strictly an error – as omitting a sitemap should not negatively affect the actual core functionality and appearance of your website in the search results – it’s still worth adding your sitemap URL to robots.txt if you want to give your SEO efforts a boost.

6. Access To Development Sites

Blocking crawlers from your live website is a no-no, but so is allowing them to crawl and index your pages that are still under development.

It’s best practice to add a disallow instruction to the robots.txt file of a website under construction so the general public doesn’t see it until it’s finished.

Equally, it’s crucial to remove the disallow instruction when you launch a completed website.

Forgetting to remove this line from robots.txt is one of the most common mistakes among web developers; it can stop your entire website from being crawled and indexed correctly.

If your development site seems to be receiving real-world traffic, or your recently launched website is not performing at all well in search, look for a universal user agent disallow rule in your robots.txt file:

User-Agent: *

Disallow: /

If you see this when you shouldn’t (or don’t see it when you should), make the necessary changes to your robots.txt file and check that your website’s search appearance updates accordingly.

7. Using Absolute URLs

While using absolute URLs in things like canonicals and hreflang is best practice, for URLs in the robots.txt, the inverse is true.

Using relative paths in the robots.txt file is the recommended approach for indicating which parts of a site should not be accessed by crawlers.

This is detailed in Google’s robots.txt documentation, which states:

A directory or page, relative to the root domain, that may be crawled by the user agent just mentioned.

When you use an absolute URL, there’s no guarantee that crawlers will interpret it as intended and that the disallow/allow rule will be followed.

8. Deprecated & Unsupported Elements

While the guidelines for robots.txt files haven’t changed much over the years, two elements that are oftentimes included are:

- Crawl-delay.

- Noindex.

While Bing supports crawl-delay, Google doesn’t, but it is often specified by webmasters. You used to be able to set crawl settings in Google Search Console, but this was removed towards the end of 2023.

Google announced it would stop supporting the noindex directive in robots.txt files in July 2019. Before this date, webmasters were able to use the noindex directive in their robots.txt file.

This was not a widely supported or standardized practice, and the preferred method for noindex was to use on-page robots, or x-robots measures at a page level.

How To Recover From A Robots.txt Error

If a mistake in robots.txt has unwanted effects on your website’s search appearance, the first step is to correct robots.txt and verify that the new rules have the desired effect.

Some SEO crawling tools can help so you don’t have to wait for the search engines to crawl your site next.

When you are confident that robots.txt is behaving as desired, you can try to get your site re-crawled as soon as possible.

Platforms like Google Search Console and Bing Webmaster Tools can help.

Submit an updated sitemap and request a re-crawl of any pages that have been inappropriately delisted.

Unfortunately, you are at the whim of Googlebot – there’s no guarantee as to how long it might take for any missing pages to reappear in the Google search index.

All you can do is take the correct action to minimize that time as much as possible and keep checking until Googlebot implements the fixed robots.txt.

Final Thoughts

Where robots.txt errors are concerned, prevention is always better than the cure.

On a large revenue-generating website, a stray wildcard that removes your entire website from Google can have an immediate impact on earnings.

Edits to robots.txt should be made carefully by experienced developers, double-checked, and – where appropriate – subject to a second opinion.

If possible, test in a sandbox editor before pushing live on your real-world server to avoid inadvertently creating availability issues.

Remember, when the worst happens, it’s important not to panic.

Diagnose the problem, make the necessary repairs to robots.txt, and resubmit your sitemap for a new crawl.

Your place in the search rankings will hopefully be restored within a matter of days.

More resources:

Featured Image: M-SUR/Shutterstock