This edited excerpt is from B2B Content Marketing Strategy by Devin Bramhall, ©2025, and is reproduced and adapted with permission from Kogan Page Ltd.

Modern content strategy is no longer about being a brand megaphone, shouting messages across digital space.

Modern content strategy that works is a blended approach designed to create community around shared experiences, build lasting relationships, and establish genuine trust and influence. It’s about leaning into individuality within niche communities by creating content that resonates with individuals and small groups rather than trying to appeal to the masses.

And it’s definitely not a pursuit of ubiquity, in the ways brands used to do it by creating a dominant presence on every platform and community space.

Instead, it’s about taking fewer actions to accomplish more. Playing a supporting role in the community sometimes by elevating others. It’s about building relationships that motivate action rather than force it. Mostly, it’s about creating frameworks and principles to guide and evaluate your decisions so you can develop your own “playbook” that works for your company and community.

Principles Of Good Content Marketing Strategy

Content marketing exists to serve business goals by solving customer pain points. It accomplishes this through education and relationship-building:

Education attracts potential buyers and influencers by providing immediate value in the form of short-term solutions (awareness and affinity).

Establishing trust allows your brand to become an ongoing part of your community’s lives by speaking their language, empathizing with their challenges, and solving their problems (nurture and engage).

Relationship formation creates alignment between external promises and internal experiences – the product delivers on the expectations set by content (convert, grow LTV, and upsell).

The goal is to help first and sell second – at which point customers often feel they reached decisions independently. They become eager to invest in both the product and the relationship. This is how content marketing works organically based on human behavior.

It’s also the stuff you already know.

Content marketing teams guided by the following principles consistently achieve superior results.

Create Unique Advantage

No other company exists with your exact combination of product, people, and resources. Your first job as a marketer is to identify what you already have that can be leveraged for growth.

This could be your founder’s network, your CMO’s substantial LinkedIn following that overlaps with your target buyers, or a product feature that solves a previously unaddressed problem. It might be an upcoming conference where your CEO is speaking to 300 decision-makers who gather only once per year.

Other advantages might include:

- Budget, software, and technological resources.

- Existing audiences, email lists, or content archives.

- Market position (whether as an established leader or disruptive newcomer).

- Opportunistic events like funding announcements or key hires.

- Your own unique talents, experiences, and connections.

The goal is to create a content strategy that:

- Competitors can’t easily duplicate because they lack your specific advantages.

- Generates exponential impact by leveraging opportunistic events, efficient execution, and activities that serve multiple outcomes simultaneously.

- Is scalable with repeatable elements that compound over time and can expand with relative ease.

A prime example comes from Gong, the revenue intelligence platform. While competitors focused on standard SaaS marketing playbooks, Gong leveraged their unique advantage: Access to millions of sales conversations and the data patterns within them. By sharing insights from this proprietary data, they created content no competitor could replicate, establishing themselves as the definitive source of sales intelligence while simultaneously demonstrating their product’s value.

Serve Outcomes It Can Logically Impact (Better Than Other Approaches)

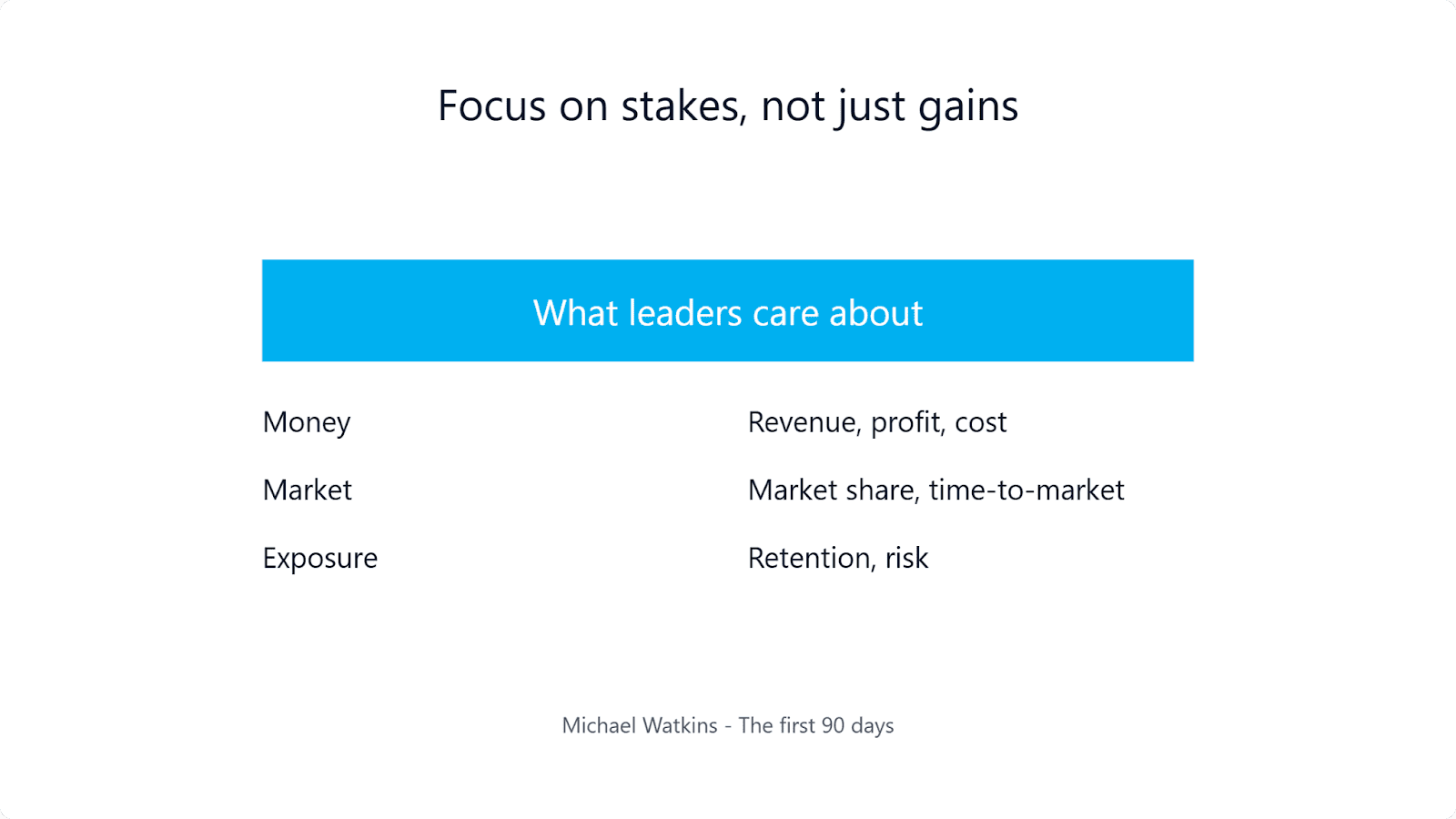

Strategy that serves business goals does need to be measured to ensure it’s serving those outcomes, and ideally, how well it achieves them. Yes, I’m talking about ROI.

The benefit of having clearly defined, quantifiable, time-based outcomes is twofold:

- It helps you narrow down tactics.

- It gives you a target to “bump up against” to extract learnings for continuous improvement.

This principle forces you to evaluate each potential marketing activity against a simple standard: Is this the best way to reach the business outcome we want, or are we doing it because it’s the way we’ve always done it?

Can Be Executed With Existing Resources

A strategy is only as good as your ability to execute it.

Your plan is only strategic if you factor in all constraints, including budget and resources. If you come up with a “brilliant” idea that you know is unlikely to be funded, then it’s not brilliant in the context in which you want to apply it.

So, if you come up with something that could really move the needle and you want to get funding for it, come up with an MVP and call it a test. Once you’ve shown impact and dazzled the purse-holders, then it’ll be easier to get budget to expand and do more. So start by getting buy-in on only those resources you need to execute a bare minimum version that demonstrates enough impact to justify additional investment. One approach that has worked for me (though it’s not a silver bullet) is to treat it like a sales activity. All I need is enough of the right kind of information that whoever I’m pitching to will:

- Understand without a complex explanation.

- See a type of business impact they recognize as valuable.

- Not care too much about it (i.e., the investment is negligible to them).

Your best-case scenario at this stage is not enthusiasm; it’s disinterest. You want them to feel like saying yes is an errand, almost like it’s a waste of their time.

This requires keeping a ton of details to yourself – especially the ones your leadership will question. Also useful, make it feel familiar and demonstrate you listened to them by pointing out areas where you intentionally factored in something they wanted or advised. Think of it like landing page copy. Your “conversion” is a yes, so what details and messaging will get you that conversion?

This doesn’t mean your strategy can’t be ambitious. Rather, it means being realistic about what you can sustain long enough to see results.

Serves Outcomes It Can Logically Impact (Better Than Other Activities)

It doesn’t matter what size your marketing team is – at some point, you’ll be tasked with showing impact beyond what seems possible with your current resources. This is where strategic thinking becomes essential.

Content marketing strategy plays a crucial role in driving business results. What sets a strategy apart from a simple plan is its ability to serve as a unified and thoughtful response to a significant challenge, as emphasized by Richard Rumelt in his book “Good Strategy, Bad Strategy.”

A plan is simply a list of activities you know you can accomplish, like running errands in a particular order to minimize time. Strategy, by contrast, is using the resources you have to show enough impact that decision-makers will recognize, making sure you remind them over and over in different ways about that impact, then using that as leverage to get the budget to do what you wanted to in the first place.

This doesn’t mean your strategy can’t be ambitious. Rather, it means being realistic about what you can sustain long enough to see results that you can use to do more later.

Grounded In Facts, Not Best Practices

Choose channels, tactics, and messages based on YOUR customers, not on what others are doing or what industry best practices dictate.

At some point, nothing we currently do in marketing existed before. SEO, for example, was once considered a growth hack. It wasn’t in the content marketing lexicon, let alone on any list of best practices. Someone discovered it could provide unique advantage for their company to appear first when people searched for specific solutions.

This principle requires you to reason from your specific facts:

- How do YOUR customers make purchase decisions?

- What channels do THEY genuinely use for discovery and research?

- What unique circumstances does YOUR company face?

What might appear as constraints – limited budget, market position, team size – can often become advantages if you approach them with curiosity and objectivity.

Designed To Have Exponential Impact

Most “strategies” content marketers present are just action plans that itemize tactics they will execute over a period of time to hit a goal.

Create content, distribute, convert people, measure results, repeat.

But think about how content marketing itself came to exist. It was all about leverage. Take SEO, for example. It was essentially a “free” way to get more people to visit your site without paying for ads. And for a while, it was an ROI multiplier, meaning that the amount of investment required to execute was minuscule compared to the long-term impact it would have over time. That’s a strategic ratio.

Now, SEO is a part of B2B marketing modus operandi. The ratio is more incremental; thus, it’s not really a strategic activity, it’s more of a table stakes tactic.

The opportunity for marketers now is to come up with a scalable way to transform bespoke interactions between people from the company and community across multiple mediums into ROI for the company that they can sustain. This means designing your strategy such that some activities serve more than one purpose or outcome, as well as having “self-sustaining” elements (i.e., automations, workflows, etc.) built in.

To read the full book, SEJ readers have an exclusive 25% discount code and free shipping to the US and UK. Use promo code “SEJ25” at koganpage.com here.

More Resources:

Featured Image: Anton Vierietin/Shutterstock