How To Cultivate Brand Mentions For Higher AI Search Rankings via @sejournal, @martinibuster

Building brand awareness has long been an important but widely overlooked part of SEO. AI Search has brought this activity to the forefront. The following ideas should assist in forming a strategy for achieving brand name mentions at a ubiquitous scale, with the goal of achieving similar ubiquity in AI search results.

Tell People About The Site

SEOs and businesses can become overly concerned with getting links and forget that the more important thing to do is to get the word out about a website. A website must have unique qualities that will positively impress people and make them enthusiastic about the brand. If the site you’re trying to build traffic to lacks those unique qualities then building links or brand awareness can become a futile activity.

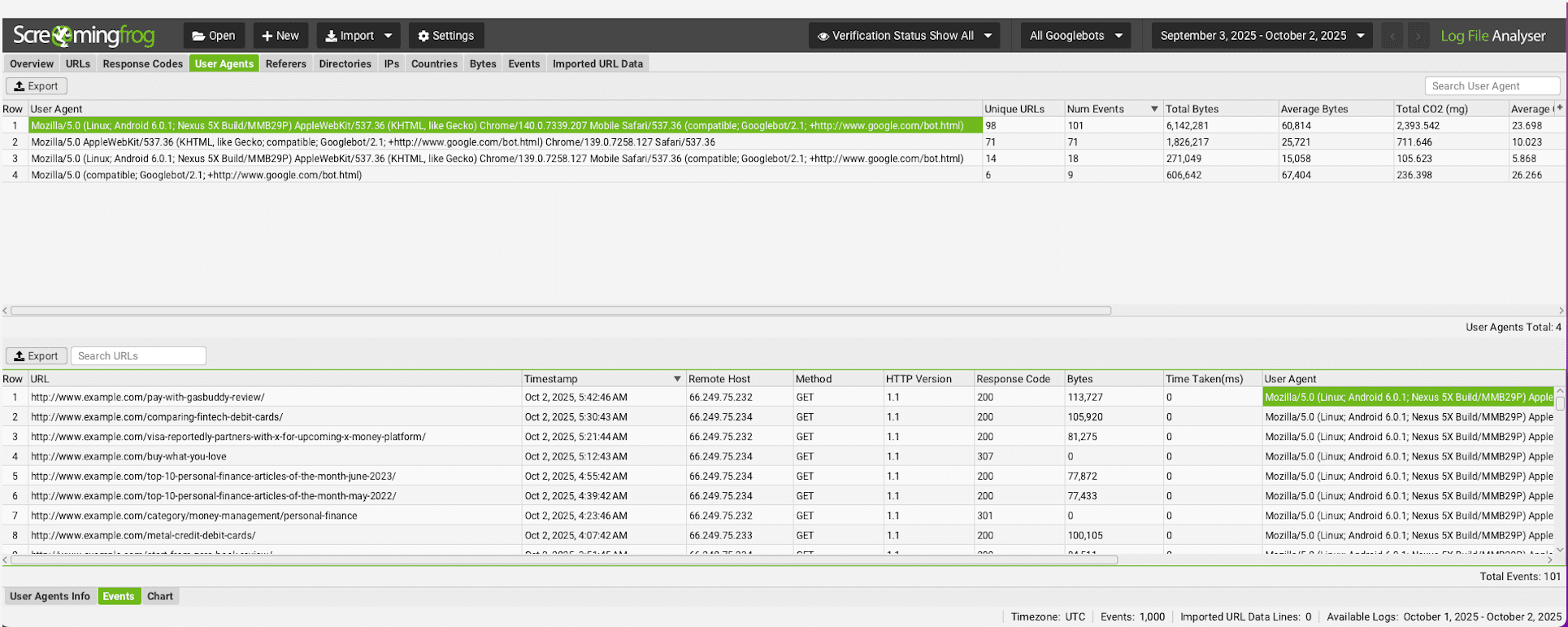

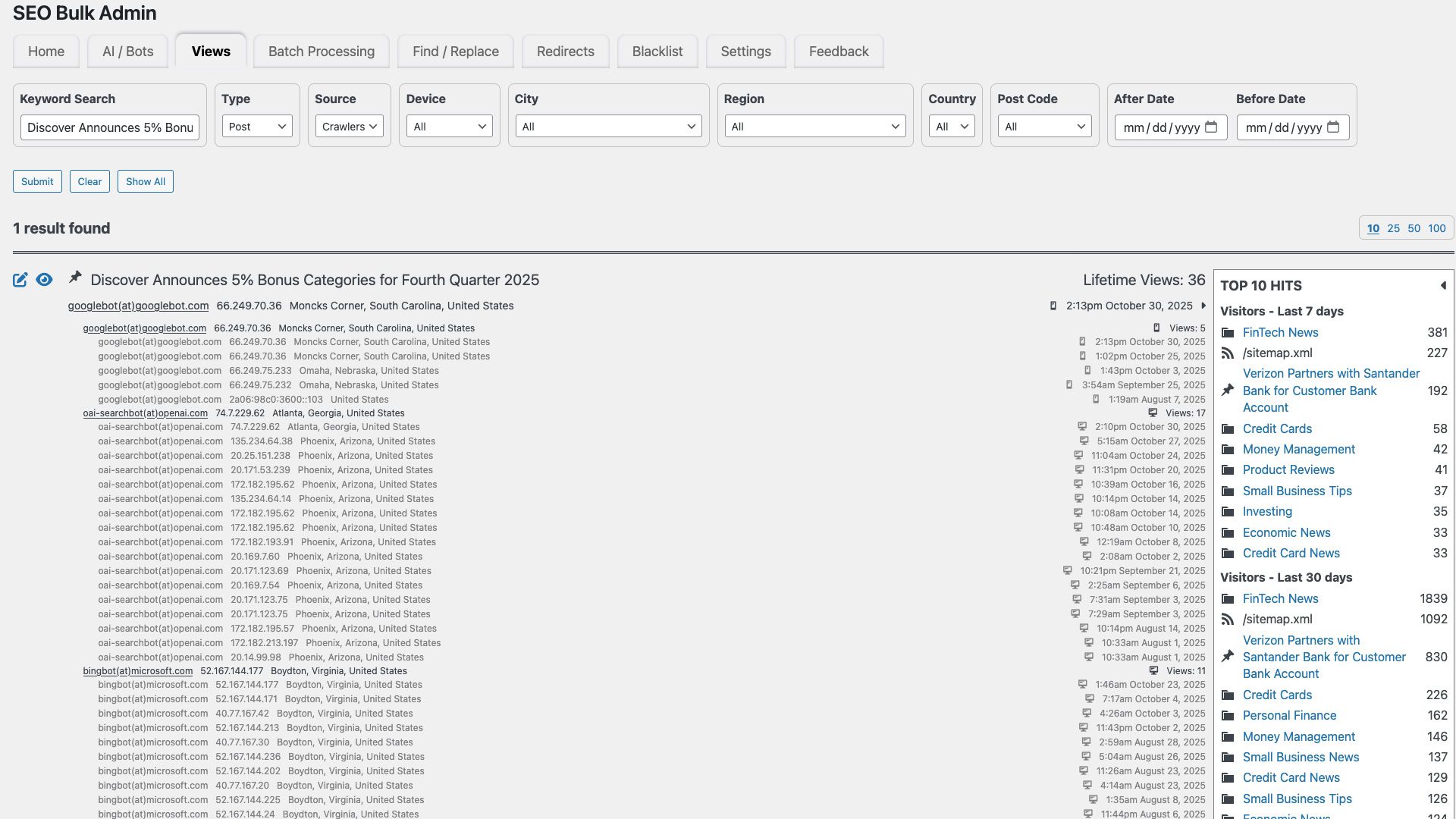

User behavior signals have been a part of Google’s algorithms since the 2004 Navboost signals were kicking in and the recent Google antitrust lawsuit shows that user behavior signals have continued to play a role. What has changed is that SEOs have noticed that AI search results tend to recommend sites that are recommended by other sites, brand mentions.

The key to all of this has been to tell other sites about your site and make it clear to potential consumers or website visitors what makes your site special.

- So the first task is always to make a site special in every possible way.

- The second task is to tell others about the site in order to build word of mouth and top-of-mind brand presence.

Optimizing a website for users and cultivating awareness of that site are the building blocks of the external signals of authoritativeness, expertise, and popularity that Google is always talks about.

Downside of Backlink Searches

Everyone knows how to do a backlink search with third-party tools but a lot of the data consists of garbage-y sites; that’s not the tool’s fault, it’s just the state of the Internet. In any case, a backlink search is limited, it doesn’t surface the conversations real people are having about a website.

In my experience, a better way to do it is to identify all instances of where a site is linked from another site or discussed by another site.

Brand And Link Mentions

Some websites have bookmark and resource pages. These are low hanging fruit.

Search for a competitor’s links:

example.com site:.com “bookmarks” -site:example.com

example.com site:.com “resources” -site:example.com

The “-site:example.com” removes the competitor site from the search results, showing you just the sites that might mention the full URL of the site which may or may not be linked.

The TLD segmented variants are:

example.com site:.net "resources" example.com site:.org "resources" example.com site:.edu "resources" example.com site:.ai "resources" example.com site:.net "links" example.com site:.org "links" example.com site:.edu "links" example.com site:.ai "links" Etc.

The goal is not necessarily to get links. It’s to build awareness of the site and build popularity.

Brand Mentions By Company Name

One way to identify brand mentions is to search by company name using the TLD segmentation technique. Making a broad search for a company’s name will only get you some of the brand mentions. Segmenting the search by TLD will reveal a wider range of sites.

Segmented Brand Mention Search

The following assumes that the competitor’s site is on the .com domain and you’re limiting the search to .com websites.

Competitor's Brand Name site:.com -site:example.com

Segmented Variants:

Competitor's Brand Name site:.org Competitor's Brand Name site:.edu Competitor's Brand Name site:.Reddit.com Competitor's Brand Name site:.io etc.

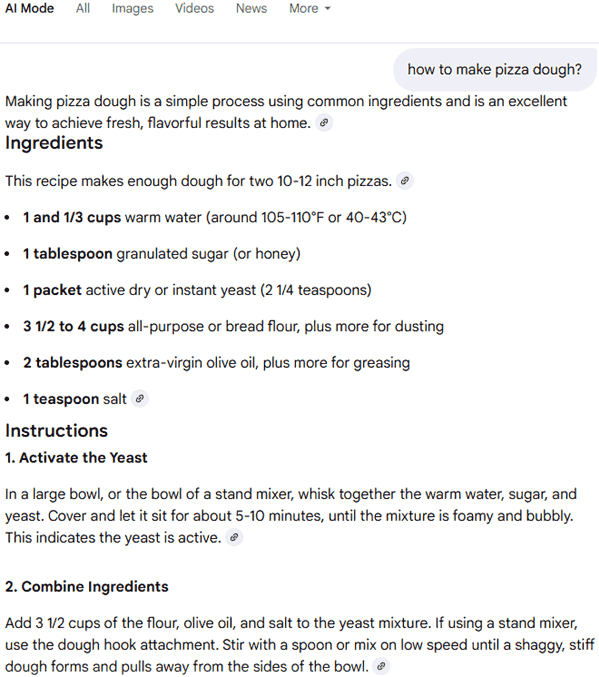

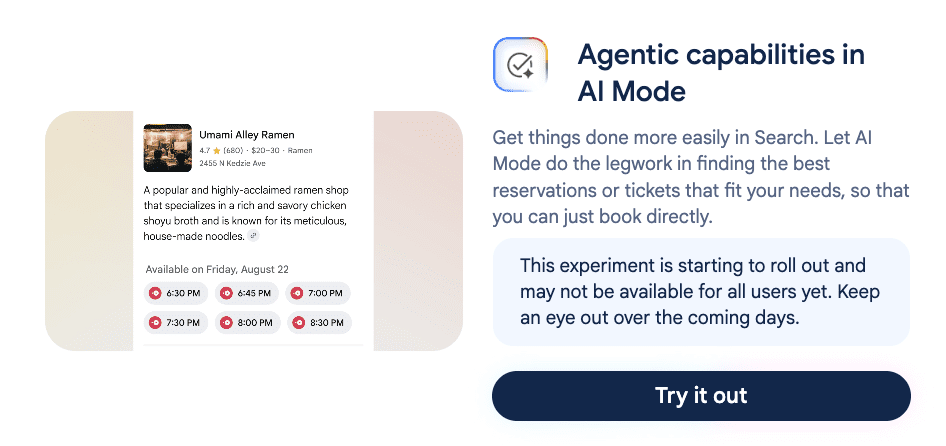

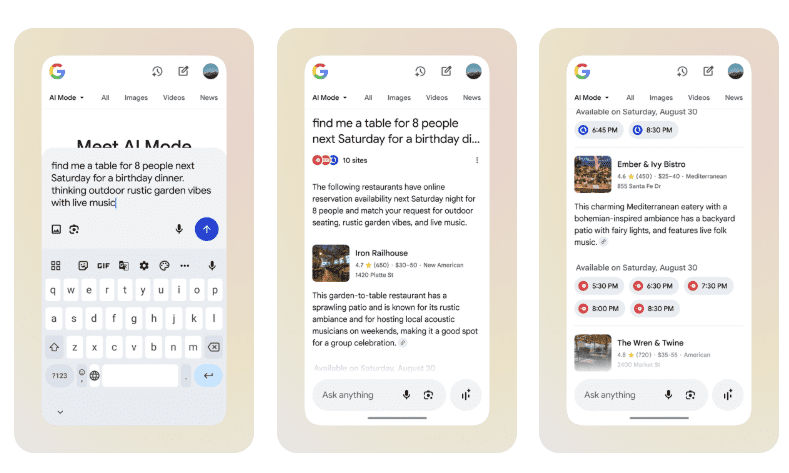

Sponsored Articles

Sponsored articles are indexed by search engines and ranked in AI search surfaces like AI Mode and ChatGPT. These can present opportunities to purchase a sponsored post that enables you to present your message with links that are nofollow and a prominent “sponsored post” disclaimer at the top of the web page – all in compliance with Google and FTC guidelines.

Brand Mentions: Authoritativeness Is Key

The thing that some SEOs never learned is that authoritativeness is important and quite likely millions of dollars have been wasted on paying for links from low-quality blogs and higher quality sites.

ChatGPT and AI Mode are found to recommend sites that are mentioned in high quality authoritative sites. Do not waste time or money paying for mentions on low quality sites.

Some Ways To Search

Product/Service/Solution Search

Name Of Product Or Service Or Problem Needing Solving site:.com “sponsored article”

Name Of Product Or Service Or Problem Needing Solving site:.net “sponsored article”

Name Of Product Or Service Or Problem Needing Solving site:.org “sponsored article”

Name Of Product Or Service Or Problem Needing Solving site:.edu “sponsored article”

Name Of Product Or Service Or Problem Needing Solving site:.io “sponsored article”

etc.

Sponsored Post Variant

Name Of Product Or Service Or Problem Needing Solving site:.com “sponsored post”

Name Of Product Or Service Or Problem Needing Solving site:.net “sponsored post”

Name Of Product Or Service Or Problem Needing Solving site:.org “sponsored post”

Name Of Product Or Service Or Problem Needing Solving site:.edu “sponsored post”

Name Of Product Or Service Or Problem Needing Solving site:.io “sponsored post”

etc.

Key insight: Test whether “sponsored post” or “sponsored article” provides better results or just more results. Using quotation marks, or if necessary the verbatim search tool, will stop Google from stemming the search results and prevents it from showing a mix of both “post” and “article” results. By forcing Google to be specific, you’re forcing Google to show more search results.

Competitor Search

Competitor’s Brand Name site:.com “sponsored post”

Competitor’s Brand Name site:.net “sponsored post”

Competitor’s Brand Name site:.org “sponsored post”

Competitor’s Brand Name site:.edu “sponsored post”

Competitor’s Brand Name site:.io “sponsored post”

etc.

Pure Awareness Building With Zero Internet Presence

This method of getting the word out is pure gold, especially for B2B but also for professional businesses such as in the legal niches. There are organizations and associations that print magazines or send out newsletters to thousands, sometimes tens of thousands, of people who are an exact match for the people you want to build top of mind brand name recognition with.

Emails and magazines do not have links and that’s okay. The goal is to build name brand recognition with positive associations. What better way than getting interviewed in a newsletter or magazine? What better way than submitting an article to a newsletter or magazine?

Don’t Forget PDF Magazines

Not all magazines are print, many magazines are in the form of a PDF. For example, I subscribe to a surf fishing magazine that is entirely in a proprietary web format that can only be viewed by subscribers. If I were a fishing company, I would make an effort to meet some of article authors, in addition to the publishers, at fishing industry conferences where they appear as presenters and in product booths.

This kind of outreach is in-person, it’s called relationship building.

Getting back to the industry organizations and associations, this is an entire topic in itself and I’ll follow up with another article, but many of the techniques covered in this guide will work with this kind of brand building.

Using the filetype search operator in combination with the TLD segmentation will yield some of these kinds of brand building opportunities.

[product/service/keyword/niche] filetype:pdf site:.com newsletter [product/service/keyword/niche] filetype:pdf site:.org newsletter

1. Segment the search for opportunities search by TLD .net/.com/.org/.us/.edu, etc.

Segmenting by TLD will help you discover different kinds of brand building opportunities. Websites on a Dot Org domain often link to a site for different reasons than a Dot Com website. Dot org domains represent article writing projects, free links on a links page, newsletter article opportunity, and charity link opportunities, just to name a few.

2. Consider Segmenting Dot Com Searches

The Dot Com TLD will yields an overabundance of search results, not all of them useful. This makes it imperative to segment the results to find all available opportunities. Even if you’re

Ways to segment the Dot Com are by:

- A. Kinds of sites (blog/shopping related keywords/product or service keywords/forum/etc.)

This is pretty straightforward. If you’re looking for brand mentions be sure to add keywords to the searches that are directly relevant to what your business is about. If your site is about car injuries then sites about cars as well as specific makes, models, and kinds of automobiles are how you would segment a .com search - B. Context – Audience Relevance Not Keyword Match

Context of a sponsored article is important. This is not about whether the website content matches what your site, business, product, or service are about. What’s important is to identify if the audience reach is an exact match to the audience that will be interested in your product, business, or service. - C. Quality And Authoritativeness

This is not about third-party metrics related to links. This is just about making a common sense judgment about whether a site where you want a mention is well-regarded by those who are likely to be interested in your brand. That’s it.

Takeaway

The thing I want you to walk away with is that it’s useful to just tell people about a site and to get as many people as possible aware of it. Identify opportunities for ways to get them to tell a friend. There is no better recommendation than the one you can get from a friend or from a trusted organization. This is the true source of authoritativeness and popularity.

Featured Image by Shutterstock/Bird stocker TH