Factors To Consider When Implementing Schema Markup At Scale via @sejournal, @marthavanberkel

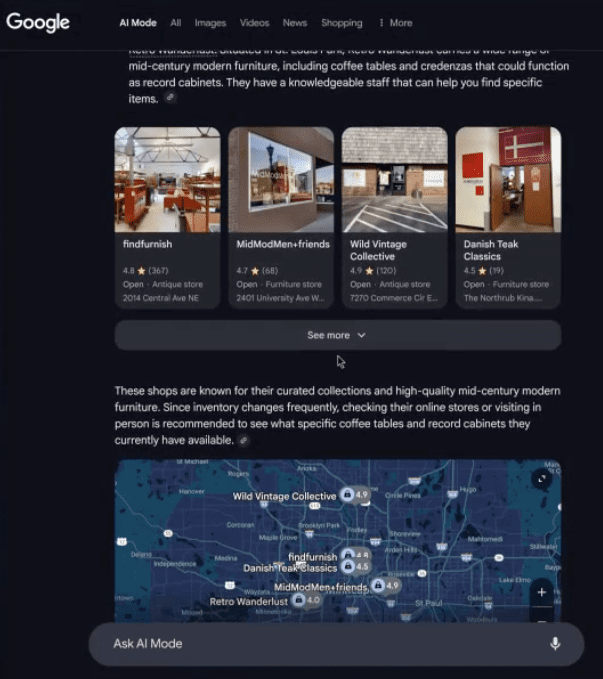

Organizations adopting schema markup at scale often see a boost in non-branded search queries, signaling broader topic authority and improved discoverability.

It has also become a powerful answer to a pressing executive question: “What are we doing about generative AI?” One smart answer is, “We’re implementing schema markup.”

In March 2025, Fabrice Canel, principal program manager at Bing, confirmed that Microsoft uses structured data to support how its large language models (LLMs) interpret web content.

Just a day later, at Google’s Search Central Live event in New York, Google structured data engineer Ryan Levering shared that schema markup plays a critical role in grounding and scaling Google’s own generative AI systems.

“A lot of our systems run much better with structured data,” he noted, adding that “it’s computationally cheaper than extracting it.”

This is unsurprising to hear since schema markup, when done semantically, creates a knowledge graph, a structured framework of organizing information that connects concepts, entities, and their relationships.

A 2023 study by Data.world found that enterprise knowledge graphs improved LLM response accuracy by up to 300%, underscoring the value structured data brings to AI initiatives.

With Google continuing to dominate both search and AI – most recently launching Gemini 2.5 in March 2025, which topped the LMArena leaderboard – the intersection between structured data and AI is only growing more critical.

With that in mind, let’s explore the four key factors to consider when implementing schema markup at scale.

1. Establish Your Goal For Implementing Schema Markup

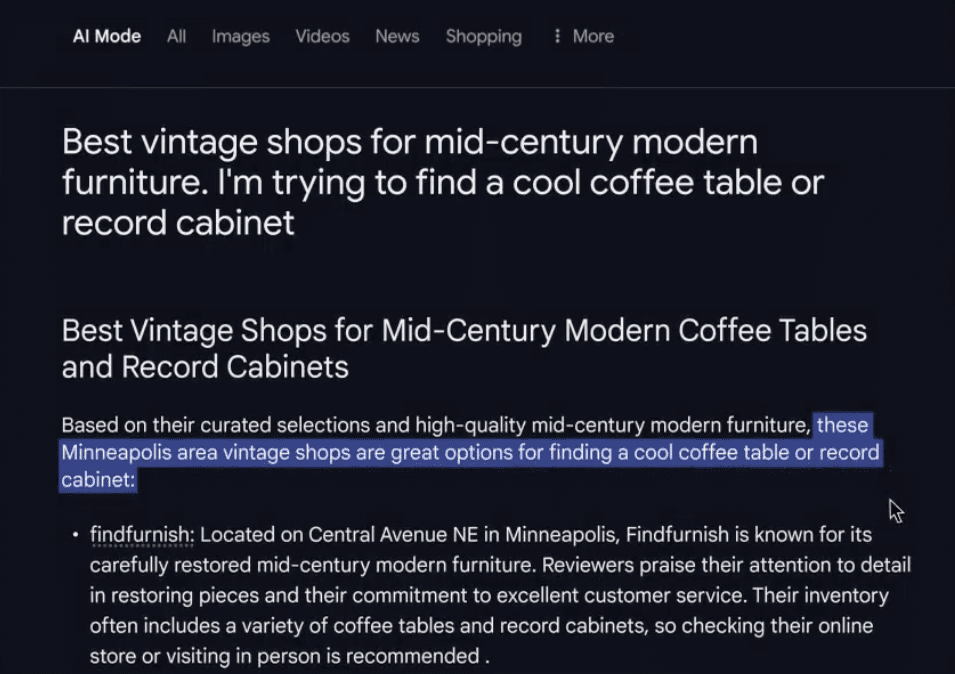

Before you invest in doing schema markup at scale, let’s explore the business outcomes you can achieve with the different schema markup implementations.

There are three different levels of schema markup complexity:

- Basic schema markup.

- Internal and external linked schema markup.

- Full representation of your content with a content knowledge graph.

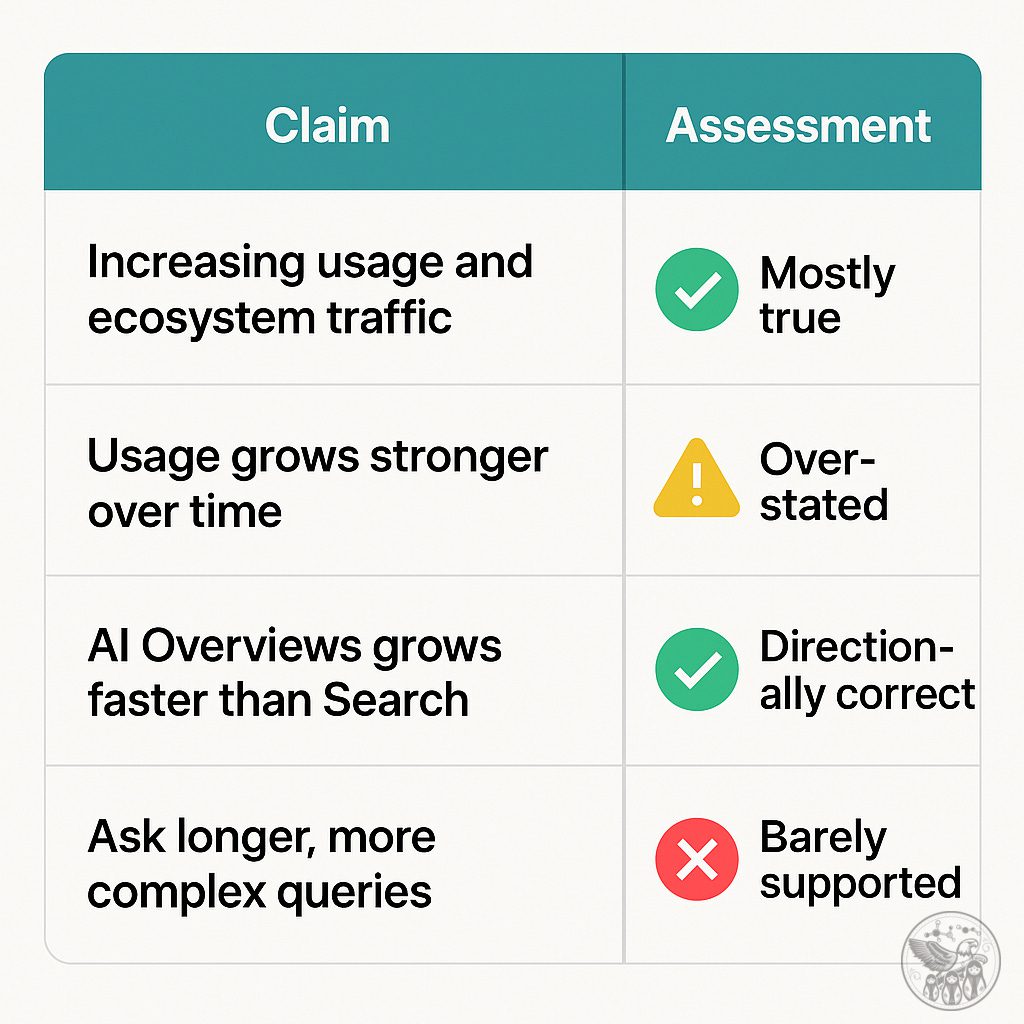

| Level Of Schema Markup | Outcome | Strategy |

| Basic Schema Markup | Rich results with higher click-through rates. | Implement schema markup for required properties. |

| Internal and external linked entities within schema markup | Increase in non-branded queries.

Entities can be fully understood by AI and search engines. |

Define key entities within the page and add them to your schema markup. Link entities within the website and to external knowledge bases for clarity. |

| Content knowledge graph: A full representation of your content as a content knowledge graph. | Content is fully understood in context.

A reusable semantic data layer that enables accurate inferencing and supports LLMs. |

Define all important elements of a page using the Schema.org vocabulary and elaborate entity linking to enable accurate extraction of facts about your brand. |

Basic Schema Markup

Basic schema markup is when you choose to optimize a page specifically to achieve a rich result.

You look at the minimum required properties from Google’s Documentation and add them to the markup on your page.

The benefits of basic schema markup come from being eligible for a rich result. Achieving this enhanced search result can help your page stand out on the search engine results page (SERP), and it typically yields a higher click-through rate.

Internal And External Linked Entities Within The Schema Markup

Building on your basic schema markup, you can use the Schema.org vocabulary to clarify the entities on your website and how they connect with each other.

An entity refers to a single, unique, well-defined, and distinguishable thing or idea. Examples of an entity on your website include your organization, employees, products, services, blog articles, etc.

You can clarify a topic by linking an entity mentioned on your page to a corresponding external entity definition on Wikidata, Wikipedia, or Google’s knowledge graph.

This enables search engines to clearly understand the entity mentioned on your website, which results in measurable increases in non-branded queries related to that entity or topic.

You can also provide context on how entities on your site are connected by using the appropriate property to link your entity and its identifier.

For example, if you had a page that outlined your product geared toward women, you would use external entity linking to clarify that the audience is women.

If the page also lists related products or services, your schema markup would be used to point to where those related products and services are defined on your site.

When you do this, you provide a holistic and complete view of the content on your page.

With these internal and external entities fully defined, AI and search engines can understand and contextualize your entities accurately.

Full Representation Of Your Content As A Content Knowledge Graph

The final level of schema markup involves using Schema.org to define all page content. This creates a content knowledge graph, which is the most strategic use case of schema markup and has the greatest potential impact on the business.

The benefit of building a content knowledge graph lies in providing an accurate semantic data layer to both search engines and AI to fully understand your brand and the content on your website.

By defining the relationships between things on the website, you give them what they need to get accurate, clear answers.

In addition to how search engines use this robust schema markup, internal AI initiatives can use it to accelerate training on your web data.

Now that you have decided what kind of schema markup you need to achieve your business goals, let’s talk about the role cross-functional stakeholders play in helping you do schema markup at scale.

2. Cross-Departmental Collaboration And Buy-In

The SEO team often initiates Schema markup. They define the strategy, map Schema.org types to key pages, and validate the markup to ensure it’s indexed by search engines.

However, while SEO professionals may lead the charge, schema markup is not just an SEO task.

Successful schema markup implementation at scale requires alignment across multiple departments that can all derive business results from this strategy.

To maximize the value of your schema markup strategy, consider these key stakeholders before you get started:

Content Team

Whether it’s your core content team, lines of business, or a center of excellence, the teams who own the content on the website play a critical role.

Your schema markup is only as good as the content on the page. If you want to achieve a rich result and gain visibility for a specific entity, you need to ensure your page has the required content to make it eligible for this result.

Help your content team understand the value of structured data and how it helps them achieve their goals, so they’ll be motivated to make the content adjustments needed to support your schema markup strategy.

IT Team

No matter how you plan to implement schema markup, whether internally or through a vendor, your IT team’s buy-in is essential.

If you’re working with a vendor, IT will support setting up integrations and enforce security protocols. Their support is critical for enabling deployment while protecting your infrastructure.

If you’re managing schema markup in-house, IT will be responsible for the technical implementation, building advanced capabilities such as entity recognition, and ongoing maintenance.

Without their partnership, scaling and creating an agile, high-value schema markup strategy will be a challenge.

Either way, securing IT’s support early on ensures smoother implementation, stronger data governance, and long-term success.

Executive Team

Your executive leadership team ultimately determines where you should put your dollars to get the best return on investment (ROI).

They want to see the ROI and understand how this strategy helps them prepare for AI, and also stay competitive in the market.

Clear reporting on the outcomes of your structured data efforts will help secure ongoing executive support.

Educating them on how schema markup can help their brand visibility, AI search understanding, and accelerate internal AI initiatives can often help get them on board.

Innovation Team

As mentioned earlier, you can use schema markup to develop a semantic data layer, also known as a content knowledge graph.

This can be useful for your innovation or AI governance team as they could use this data layer to ground their LLMs and accelerate internal AI programs.

Your innovation team will want to understand this potential, especially if AI is a priority on the roadmap.

Pro tip: Communicate early and often. Sharing both the why and the wins will keep cross-functional teams aligned and invested as your schema markup strategy scales.

3. Capability Readiness For Doing Schema Markup At Scale

Now that you know what type of schema markup you want to implement at scale and have the cross-functional team aligned, there are some technical capabilities you need to consider.

When looking to do schema markup at scale, here are key capabilities required from either your IT team or vendor to achieve your desired outcomes.

Basic Schema Markup Capabilities

For basic schema markup for rich results, the capabilities required to implement at scale are the ability to map content to required properties to achieve a rich result and integrate it to show up on page load to be seen by Google. The key factor that simplifies this process is having a well-templated website.

Your team or vendor can map the schema markup and required properties from Google to the appropriate content elements on the page and generate the JSON-LD using these mappings.

Internal And External Entity Linking Capabilities

If you want to do internal and external entity linking within your schema markup at scale, you require more complex capabilities to identify, define, and nest entities within your schema markup.

To identify your internal and external entities and nest them within your schema markup to showcase their relationships, your team or vendor will need the ability to do Named Entity Recognition (NER).

NER extracts named entities and disambiguates the terms.

In addition to extracting proper nouns, you will want the technology to be able to recognize your business terms, your products, people, and events that perhaps aren’t notable yet to warrant a Wikipedia page.

Once the entity is identified, you will need the capability to look up the Entity Definition in a reference knowledge base. This is often done with an API to Wikidata or Google’s knowledge graph.

Now that the entity is defined, you will need the capability to dynamically insert the entity with the appropriate relationship within your schema markup.

To ensure accuracy and completeness on entity identification and relationship mapping, you want controls for the human in the loop to fine-tune matches in your domain.

Full Content Knowledge Graph Representation

For a full representation of your content knowledge graph, which can scale and update dynamically with your content, you will need to add further natural language processing capabilities.

Specifically, your vendor or IT will need to have the ability to identify the semantic relationship between entities in the text (relation extraction) and the ability to identify the concepts within sentences (semantic parsing).

Alternatively, you can do these three functions (NER, relation extraction, and semantic parsing) with a large language model.

LLMs dramatically improve this functionality with some caveats, which include high cost, lack of explainability, and hallucinations.

Once the semantic schema markup is created, your IT or vendor will store the schema markup in a database or knowledge graph and monitor the data to ensure business outcomes.

Finally, depending on the business case, you’ll want the capability to re-use your knowledge graph, so ensure that your knowledge graph data is available to be queried by other tools and systems.

4. The Maintenance Factor

Schema markup isn’t a “set it and forget it” strategy.

Your website content is constantly evolving, especially in enterprise organizations, where different teams may be publishing new content daily.

To remain accurate and effective, your schema markup needs to be dynamic and stay up to date alongside any content changes.

Apart from your website, the broader search landscape is also rapidly shifting.

Between Google’s frequent updates and the growing influence of AI platforms that consume and interpret your content, your schema markup strategy needs to be agile and adaptable.

Consider having someone on your team focused on evolving your schema markup in alignment with business goals and desired outcomes.

Whether it’s an internal resource or a vendor partner, this individual should be adaptable and bear a growth mindset.

They’ll measure the impact of your schema markup, as well as test and measure new strategies (like those mentioned above) to help you thrive in search and AI-driven experiences.

In this ever-changing search landscape, agility is key. The ability to iterate quickly is critical to staying ahead of your competitors in today’s fast-moving digital environment.

Finally, don’t overlook the importance of ongoing monitoring.

Ensuring your markup remains valid and accurate across all key pages is where long-term value is realized.

Many organizations forget this step, but it’s often where the biggest gains in performance and visibility happen.

Schema Markup Is A Business Growth Lever

Schema markup is not just an SEO tactic to achieve rich results. It’s a business growth lever that can drive discoverability, support AI readiness, and fuel long-term business growth.

Depending on the business outcome your organization is targeting – whether it’s improved search visibility, AI initiatives, deeper content intelligence, or all of the above – different factors will take priority.

That’s why CMOs and digital leaders must treat structured data as a core component of their marketing and digital transformation strategy and carefully consider how they will scale it for the best outcomes.

More Resources:

Featured Image: Just Life/Shutterstock