Internal WordPress Conflict Spills Out Into The Open via @sejournal, @martinibuster

An internal dispute within the WordPress core contributor team spilled into the open, causing major confusion among people outside the organization. The friction began with a post from more than a week ago and culminated in a remarkable outburst, exposing latent tensions within the core contributor community.

Mary Hubbard Announcement Triggers Conflict

The incident seemingly began with a September 15 announcement by Mary Hubbard, the Executive Director of WordPress. She announced a new Core Program Team that is meant to improve how Core contributor groups coordinate with each other and improve collaboration between Core contributor teams. But this was just the trigger for the conflict, which was actually part of a longer-term friction.

Hubbard explained the role of the new team:

“The goal of this team is to strengthen coordination across Core, improve efficiency, and make contribution easier. It will focus on documenting practices, surfacing roadmaps, and supporting new teams with clear processes.

The Core Program Team will not set product direction. Each Core team remains autonomous. The Program Team’s role is to listen, connect, and reduce friction so contributors can collaborate more smoothly.”

That announcement was met with the following response by a member of the documentation team (Jenni McKinnon), which was eventually removed:

“For the public record: This Core Program Team announcement was published during an active legal and procedural review that directly affects the structural governance of this project.

I am not only subject to this review—I am one of the appointed officials overseeing it under my legal duty as a recognized lead within SSRO (Strategic Social Resilience Operations). This is a formal governance, safety, and accountability protocol—bound by national and international law—not internal opinion.

Effective immediately:

• This post and the program it outlines are to be paused in full.

• No action is to be taken under the name of this Core Program Team until the review concludes and clearance is formally issued.

• Mary Hubbard holds no valid authority in this matter. Any influence, instruction, or decision traced to her is procedurally invalid and is now part of a legal evidentiary record.

• Direction, oversight, and all official governance relating to this matter is held by SSRO, myself, and verified leadership under secured protocol.This directive exists to protect the integrity of WordPress contributors, prevent governance sabotage, and ensure future decisions are legally and ethically sound.

Further updates will be provided only through secured channels or when review concludes. Thank you for respecting this freeze and honoring the laws and values that underpin open source.”

The post was followed by astonishment and questions in various Slack and Facebook WordPress groups. The roots of the friction begin with events from a week ago centered on documentation team participation.

Documentation Team Participation

A September 10 post by documentation team member Estela Rueda informed the Core contributor community that the WordPress 6.9 release squad is experimenting with a smaller team that excludes documentation leads, with only a temporary “Docs Liaison” in place. Her post explained why this exclusion is a problem, detailed the importance of documentation in the release cycle, and urged that a formal documentation lead role be reinstated in future releases.

Estela Rueda wrote (in the September 10 post):

“The release team does not include representation from the documentation team. Why is this a problem? Because often documentation gets overlooked in release planning and project-wide coordination: Documentation is not a “nice-to-have,” it is a survival requirement. It’s not something we might do if someone has time; it’s something we must do — or the whole thing breaks down at scale. Removing the role from the release squad, we are not just sending the message that documentation is not important, we are showing new contributors that working on docs will never get them to the top of the credits page, therefore showing that we don’t even appreciate contributing to the Docs.”

Jenni McKinnon, who is a member of the docs team, responded with her opinions:

“This approach isn’t in line with genuine open-source values — it’s exclusionary and risks reinforcing harmful, cult-like behaviors.

By removing the Docs Team from the release squad under the guise of “reducing overhead,” this message sends a stark signal: documentation is not essential. That’s not just unfair — it actively erodes the foundations of transparency, contributor morale, and equitable participation.”

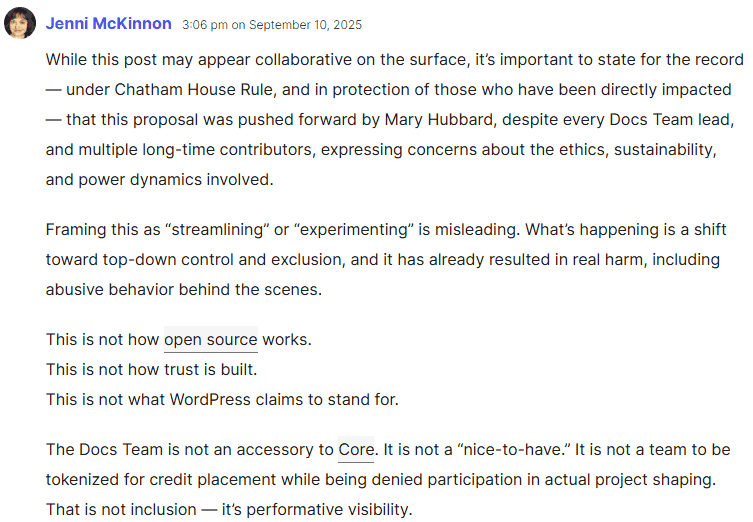

She added further comments, culminating in the post below that accused WordPress Executive Director Mary Hubbard of being behind a shift toward “top-down” control:

“While this post may appear collaborative on the surface, it’s important to state for the record — under Chatham House Rule, and in protection of those who have been directly impacted — that this proposal was pushed forward by Mary Hubbard, despite every Docs Team lead, and multiple long-time contributors, expressing concerns about the ethics, sustainability, and power dynamics involved.

Framing this as ‘streamlining’ or ‘experimenting’ is misleading. What’s happening is a shift toward top-down control and exclusion, and it has already resulted in real harm, including abusive behavior behind the scenes.”

Screenshot Of September 10 Comment

Documentation Team Member Asked To Step Away

Today’s issue appears to have been triggered by a post from earlier today announcing that Jenni McKinnon was asked to “step away.”

Milana Cap wrote a post today titled, “The stepping away of a team member” that explained why McKinnon was asked to step away:

“The Documentation team’s leadership has asked Jenni McKinnon to step away from the team.

Recent changes in the structure of the WordPress release squad started a discussion about the role of the Documentation team in documenting the release. While the team was working with the Core team, the release squad, and Mary Hubbard to find a solution for this and future releases, Jenni posted comments that were out of alignment with the team, including calls for broad changes across the project and requests to remove certain members from leadership roles.

This ran counter to the Documentation team’s intentions. Docs leadership reached out privately in an effort to de-escalate the situation and asked Jenni to stop posting such comments, but this behaviour did not stop. As a result, the team has decided to ask her to step away for a period of time to reassess her involvement. We will work with her to explore rejoining the team in the future, if it aligns with the best outcomes for both her and the team.”

And that post may have been what precipitated today’s blow-up in the comments section of Mary Hubbard’s post.

Zooming Out: The Big Picture

What happened today is an isolated incident. But some in the WordPress community have confided their opinion that the WordPress core technical debt has grown larger and expressed concern that the big picture is being ignored. Separately, in comments on her September 10 post (Docs team participation in WordPress releases), Estela Rueda alluded to the issue of burnout among WordPress contributors:

“…the number of contributors increases in waves depending on the releases or any special projects we may have going. The ones that stay longer, we often feel burned out and have to take breaks.”

Taken together, to an outsider, today’s friction contributes to the appearance of cracks starting to show in the WordPress project.