Patchstack published a case study that examined how well Cloudflare and other general firewall and malware solutions protected WordPress websites from common vulnerability threats and attack vectors. The research showed that while general solutions stopped threats like SQL injection or cross-site scripting, a dedicated WordPress security solution consistently stopped WordPress-specific exploits at a significantly higher rate.

WordPress Vulnerabilities

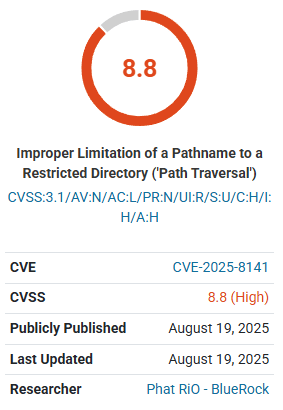

Due to the popularity of the WordPress platform, WordPress plugins and themes are a common focus for hackers, and vulnerabilities can quickly be exploited in the wild. Once proof-of-concept code is public, attackers often act within hours, leaving website owners little time to react.

This is why it is critical to be aware of the security provided by a web host and of how effective those solutions are in a WordPress environment.

Methodology

Patchstack explained their methodology:

“As a baseline, we have decided to host “honeypot” sites (sites against which we will perform controlled pentesting with a set of 11 WordPress-specific vulnerabilities) with 5 distinct hosting providers, some of which have ingrained features presuming to help with blocking WordPress vulnerabilities and/or overall security.

In addition to the hosting provider’s security measures and third-party providers for additional measures like robust WAFs or other patching providers, we have also installed Patchstack on every site, with our test question being:

- How many of these threats will bypass firewalls and other patching providers to ultimately reach Patchstack?

- And will Patchstack be able to block them all successfully?”

Testing process

Each website was set up the same way, with identical plugins, versions, and settings. Patchstack used a “exploitation testing toolkit” to run the same exploit tests in the same order on every site. Results were checked automatically and by hand to see if attacks were stopped, and whether the block came from the host’s defenses or from Patchstack.

General Overview: Hosting Providers Versus Vulnerabilities

The Patchstack case study tested five different configurations of security defenses, plus Patchstack.

1. Hosting Provider A Plus Cloudflare WAF

2. Hosting Provider B + Firewall + Monarx Server and Website Security

3. Hosting Provider C + Firewall + Imunify Web Server Security

4. Hosting Provider D + ConfigServer Firewall

5. Hosting Provider E + Firewall

The result of the testing showed that the various hosting infrastructure defenses failed to protect the majority of WordPress-specific threats, catching only 12.2% of the exploits. Patchstack caught 100% of all exploits.

Patchstack shared:

“2 out of the 5 hosts and their solutions failed to block any vulnerabilities at the network and server levels.

1 host blocked 1 vulnerability out of 11.

1 host blocked 2 vulnerabilities out of 11.

1 host blocked 4 vulnerabilities out of 11.”

Cloudflare And Other Solutions Failed

Solutions like Cloudflare WAF or bundled services such as Monarx or Imunify failed to consistently address WordPress specific vulnerabilities.

Cloudflare’s WAF stopped 4 of 11 exploits, Monarx blocked none, and Imunify did not prevent any WordPress-specific exploits. Firewalls such as ConfigServer, which are widely used in shared hosting environments, also failed every test.

These results show that while those kinds of products work reasonably well against broad attack types, they are not tuned to the specific security issues common to WordPress plugins and themes.

Patchstack is created to specifically stop WordPress plugin and theme vulnerabilities in real time. Instead of relying on static signatures or generic rules, it applies targeted mitigation through virtual patches as soon as vulnerabilities are disclosed, before attackers can act.

Virtual patches are mitigation for a specific WordPress vulnerability. This offers protection to users while a plugin or theme developer can create a patch for the flaw. This approach addresses WordPress flaws in a way hosting companies and generic tools can’t because they rarely match generic attack patterns, so they slip past traditional defenses and expose publishers to privilege escalation, authentication bypasses, and site takeovers.

Takeaways

- Standard hosting defenses fail against most WordPress plugin vulnerabilities (87.8% bypass rate).

- Many providers claiming “virtual patching” (like Monarx and Imunify) did not stop WordPress-specific exploits.

- Generic firewalls and WAFs caught some broad attacks (SQLi, XSS) but not WordPress-specific flaws tied to plugins and themes.

- Patchstack consistently blocked vulnerabilities in real time, filling the gap left by network and server defenses.

- WordPress’s plugin-heavy ecosystem makes it an especially attractive target for attackers, making effective vulnerability protection essential.

The case study by Patchstack shows that traditional hosting defenses and generic “virtual patching” solutions leave WordPress sites vulnerable, with nearly 88% of attacks bypassing firewalls and server-layer protections.

While providers like Cloudflare blocked some broad exploits, plugin-specific threats such as privilege escalation and authentication bypasses slipped through.

Patchstack was the only solution to consistently block these attacks in real time, giving site owners a dependable way to protect WordPress sites against the types of vulnerabilities that are most often targeted by attackers.

According to Patchstack:

“Don’t rely on generic defenses for WordPress. Patchstack is built to detect and block these threats in real-time, applying mitigation rules before attackers can exploit them.”

Read the results of the case study by Patchstack here.

Featured Image by Shutterstock/tavizta