Google’s Updated Raters Guidelines Refines Concept Of Low Quality via @sejournal, @martinibuster

Google’s Search Quality Rater Guidelines were updated a few months ago, and several of the changes closely track the talking points shared by Googlers at the 2025 Search Central Live events. Among the most consequential updates are those to the sections defining the lowest quality pages, which more clearly reflect the kinds of sites Google wants to exclude from the search results.

Section 4.0 Lowest Quality Pages

Google added a new definition of the Lowest Rating in the Lowest Quality Pages section. While Google has always been concerned about removing low quality sites from the search results, this change to their raters guideline likely reflects an emphasis on weeding out a specific kind of low quality website.

The new guideline focuses on identifying the publisher’s motives for publishing the content.

The previous definition said:

“The Lowest rating is required if the page has a harmful purpose, or if it is designed to deceive people about its true purpose or who is responsible for the content on the page.”

The new version keeps that sentence but adds a new sentence that encourages the quality rater to consider the underlying motives of the publisher responsible for the web page. The focus of this guidance is to encourage the quality raters to consider how the page benefits a site visitor and to judge whether the purpose of the page is entirely for benefiting the publisher.

The addition to this section reads:

“The Lowest rating is required if the page is created to benefit the owner of the website (e.g. to make money) with very little or no attempt to benefit website visitors or otherwise serve a beneficial purpose.”

There’s nothing wrong with being motivated to earn an income from a website. What Google is looking at is if the content only serves that purpose or if there is also a benefit for the user.

Focus On Effort

The next change is focused on identifying how much effort was put into creating the site. This doesn’t mean that publishers must now document how much time and effort was put into the creating the content. This section is simply about looking for evidence that the content is not distinguishable from content on other sites and offers no clear advantages over the content found elsewhere on the Internet.

This part about the main content (MC) was essentially rewritten:

“● The MC is copied, auto-generated, or otherwise created without adequate effort.”

The new version has more nuance about the main content (MC):

“● The MC is created with little to no effort, has little to no originality and the MC adds no value compared to similar pages on the web”

Three things to unpack there:

- Content created with little to no effort

- Contains little to no originality

- Main content adds no additional value

Publishers who focus on keeping up with competitors should be careful that they’re not simply creating the same thing as their competitors. Saying that it’s not the same thing because it’s the same topic only better doesn’t change the fact that it’s the same thing. Even if the content is “ten times better” the fact remains that it’s still basically the same thing as the competitor’s content, only ten times more of it.

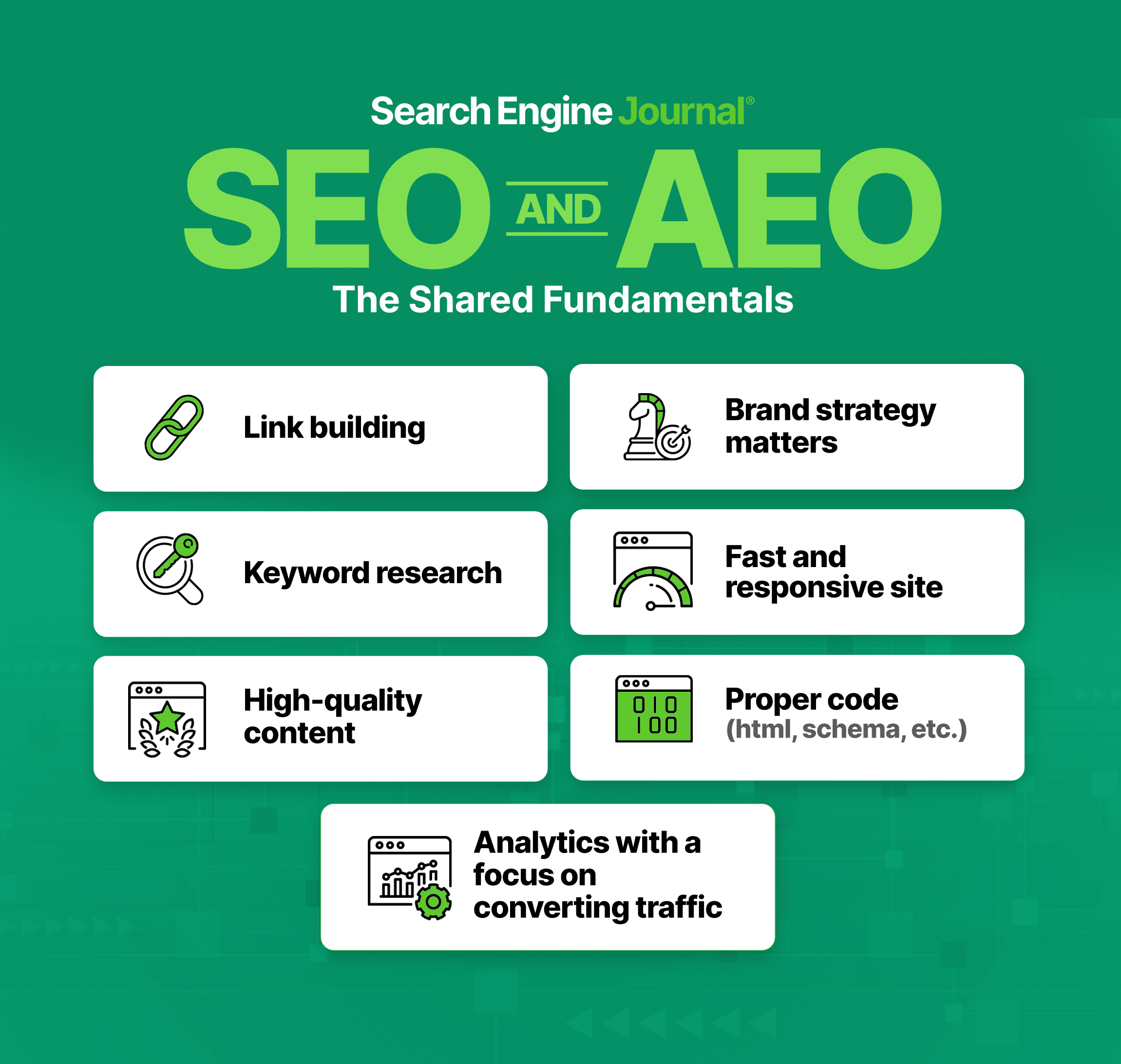

A Word About Content Gap Analysis

Some people are going to lose their minds about what I’m going to say about this, but keep an open mind.

There is a popular SEO process called Content Gap Analysis. It’s about reviewing competitors to identify topics that the competitors are writing about that are missing on the client’s site then copying those topics to fill the content gap.

That is precisely the kind of thing that leads to unoriginality and content that is indistinguishable from everything else that’s on the Internet. It’s my number one reason I would never use a software program that scrapes top ranked sites and suggests topics based on what the competitor’s are publishing. It results in virtually indistinguishable content and pure unoriginality.

Who wants to jump from one site to another site and read the same exact recipes, even if they have more images and graphs and videos. Copying a competitor’s content “but doing it better” is not original.

Scraping Google’s PAAs (People Also Asked) just like everyone else does not result in original content. It results in content that’s exactly the same as everyone else that’s scraping PAAs.

While the practice of content gap analysis is about writing about the same thing only better, it’s still unoriginal. Saying it’s better doesn’t change the fact that it’s the same thing.

Lack of originality is a huge issue with Internet content and it’s something that Google’s Danny Sullivan discussed extensively at the recent Google Search Central Live in New York City.

Instead of looking for information gaps, it’s better to review your competitor’s weaknesses. Then look at their strengths. Then compare that to your own weaknesses and strengths.

A competitor’s weakness can become your strength. This is especially valuable information when competing against a bigger and more powerful competitor.

Takeaways

1. Google’s Emphasis on Motive-Based Quality Judgments

- Quality raters are now encouraged to judge not just content, but the intent behind it.

- Pages created purely for monetization, with no benefit to users, should be rated lowest.

- This may signal Google’s intent to refine their ability to week out low quality content based on the user experience.

2. Effort and Originality Are Now Central Quality Signals

- Low-effort or unoriginal content is explicitly called out as justification for the lowest rating.

- This may signal that Google’s algorithms may increasingly focus on surfacing content with higher levels of originality.

- Content that doesn’t add distinctive value over competitors may struggle in the search results

3. Google’s Raters Guidelines Reflect Public Messaging

- Changes to the Guidelines mirror talking points in recent Search Central Live events.

- This suggests that Google’s algorithms may become more precise on things like originality, added value, and effort put into creating the content.

- This means publishers should (in my opinion) consider ways to make their sites more original than other sites, to compete by differentiation.

Google updated its Quality Rater Guidelines to draw a sharper line between content that helps users and content that only helps publishers. Pages created with little effort, no originality, or no user benefit are now listed as examples of the lowest quality, even if they seem more complete than competing pages.

Google’s Danny Sullivan used the example of travel sites that all have the sidebar that introduces the smiling site author and other hallmarks of travel sites as an example of an area where sites become indistinguishable from each other.

The reason why publishers do that is that they see what Google is ranking and assume that’s what Google wants. In my experience, that’s not the case. In my opinion it may be useful to think about what you can do to make a site more original.

Download the latest version of Google’s Search Quality Raters Guidelines here (PDF).

Featured Image by Shutterstock/Kues