Does Google Favor UGC? Reddit Leads In Search Growth [STUDY] via @sejournal, @MattGSouthern

This past year was a big one for SEO, with major changes in how Google ranks content.

According to SISTRIX’s latest IndexWatch report, the year’s biggest winners were platforms focused on user-generated content (UGC), AI-powered tools, and large ecommerce brands.

Reddit emerged as the leader among standout performers, but its dominance raises questions about Google’s practices.

Here’s a breakdown of the top-performing sites and what drove their success.

Top Performers in Search Growth

The report highlights Reddit as the year’s top performer, nearly tripling its visibility in Google’s US search results.

Reddit climbed higher in rankings for a variety of keywords, from product reviews to niche discussions, making it a major competitor to traditional content and e-commerce sites.

Other big winners in search visibility included:

- Reddit.com: +190.9% growth

- Instagram.com: Significant increases driven by its visual and video content

- YouTube.com: Continued dominance through video SEO

- Spotify.com: Strong gains in music-related searches

- Wikipedia.org: Consistent growth as an authoritative content source

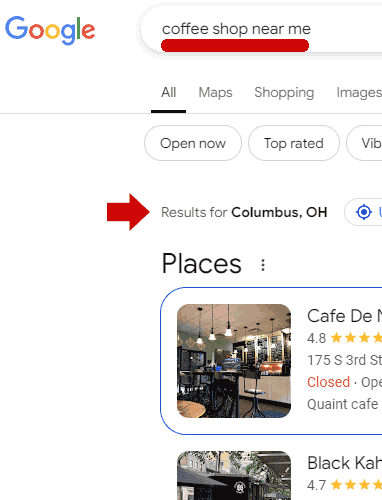

Reddit’s Dominance Raises Questions

While Reddit’s success is significant, it raises ethical and strategic questions for the SEO community.

Google’s policies, such as its stance on “site reputation” and “scaled content” abuse, discourage websites from publishing content outside their established topical authority. This policy aims to prevent sites from piggybacking on their existing authority to rank for unrelated keywords.

Yet Reddit appears to be exempt from this rule. The platform ranks for an incredibly wide range of keywords, from precise technical terms to general lifestyle topics, without being tied to a single area of expertise.

This begs the question: why does Reddit get to rank for everything while other sites are penalized for straying too far from their core focus?

Adding to the intrigue, it’s worth noting that Reddit has a deal in place with Google for broader search distribution. While this partnership isn’t entirely transparent, it raises further concerns about fairness in Google’s ranking system and whether specific platforms receive preferential treatment.

Fastest-Growing Sites by Percentage

While the largest platforms gained the most ground overall, several smaller ones stood out:

- ck12.org: +601.59% growth in rankings

- VirginAtlantic.com: +509.74% growth following site migrations

- Quillbot.com: +490.70% growth via AI-driven SEO strategies

- Hardrock.com: +436.63% growth after consolidating site sections

- TheKitchn.com: +300.40% growth driven by recipe content

The report notes that many sites relied on “programmatic” SEO strategies.

For example, ck12.org used AI-powered resources to rank for thousands of educational queries.

Lily Ray states in the report:

“For some of the winners, visibility growth stemmed from a “programmatic SEO” strategy, which use automation to scale pages that target many different search queries relevant to the site’s core offerings. For example, the site ck12.org, which claims to be the “world’s most powerful AI tutor,” has seen substantial visibility growth, predominantly stemming from its ‘flexbooks’ subdomain and ‘flexi’ subfolders.”

User-Generated Content

UGC platforms had a breakthrough year. Alongside Reddit, sites like Quora, Stack Exchange, and GitHub gained significant search visibility.

HubPages, particularly its Discover subdomain, also emerged as a major winner, growing in rankings by targeting topics like jokes, pet advice, and music.

Google’s algorithm seems to favor UGC platforms even when individual articles vary in quality.

The report notes:

“Interestingly, many of these articles resemble low-quality content that often causes demotion by Google’s algorithm updates targeting spammy content. This suggests Google’s algorithms may put more weight on prioritizing UGC websites like HubPages, which contain authentic human experiences and contributions, over penalizing or demoting individual articles included on those sites.”

E-Commerce Sites Make a Comeback

E-commerce platforms rebounded after a challenging few years.

Carters.com, for example, saw a boost by ranking for popular keywords like “baby clothes” and “kids clothing store.”

Other brands, such as Nike, Lenovo, and eBay, also experienced steady growth thanks to site updates, platform migrations, and better keyword targeting.

Key Takeaways

This year’s biggest SEO winners reflect three major trends:

- Google loves UGC: Platforms like Reddit and Quora thrived as Google prioritized community-driven content over traditional formats.

- Programmatic SEO strategies can work: Sites like ck12.org and Quillbot.com used scalable, AI-driven approaches to rank for various search queries.

- E-commerce rebounds: Retailers focused on SEO-friendly updates and keyword targeting saw strong gains in organic search.

Final Thoughts

Reddit’s rise highlights a larger debate: Is Google playing fair?

While most sites are held to strict standards for authority and expertise, Reddit appears to operate under different rules, ranking across nearly every vertical. Combined with its search distribution deal with Google, this raises questions about transparency and equity in search rankings.

As we move into 2025, it’s clear that websites must adapt to an evolving rulebook—one in which authenticity, AI strategies, and ethical considerations all play a role in success.

Featured Image: eamesBot/Shutterstock