Anthropic Research Shows How LLMs Perceive Text via @sejournal, @martinibuster

Researchers from Anthropic investigated Claude 3.5 Haiku’s ability to decide when to break a line of text within a fixed width, a task that requires the model to track its position as it writes. The study yielded the surprising result that language models form internal patterns resembling the spatial awareness that humans use to track location in physical space.

Andreas Volpini tweeted about this paper and made an analogy to chunking content for AI consumption. In a broader sense, his comment works as a metaphor for how both writers and models navigate structure, finding coherence at the boundaries where one segment ends and another begins.

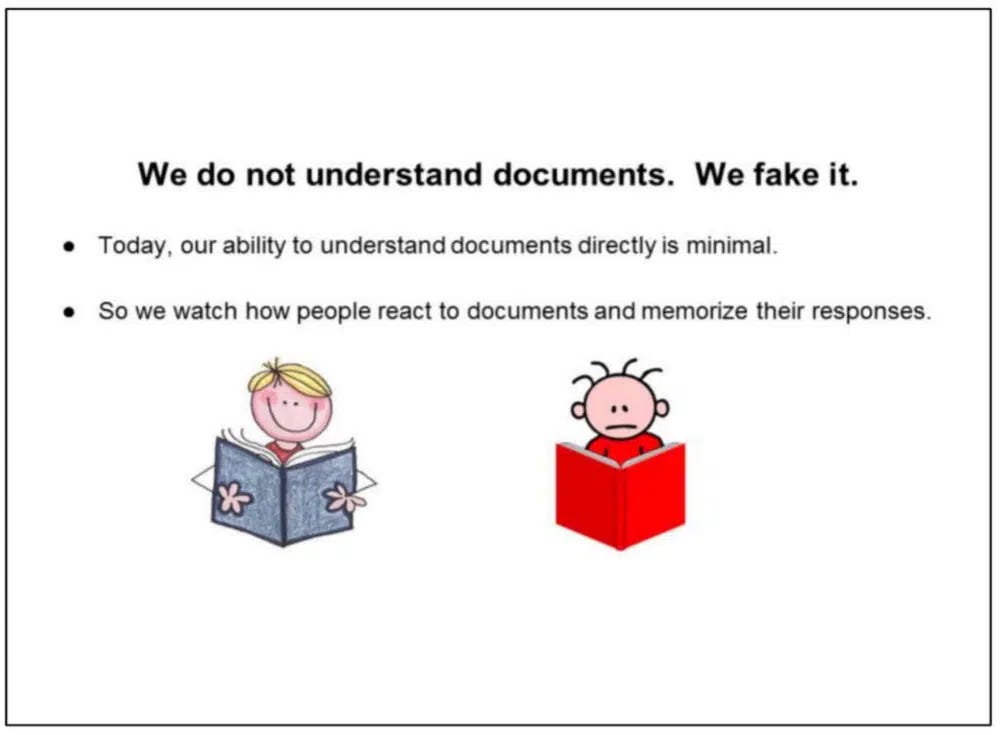

This research paper, however, is not about reading content but about generating text and identifying where to insert a line break in order to fit the text into an arbitrary fixed width. The purpose of doing that was to better understand what’s going on inside an LLM as it keeps track of text position, word choice, and line break boundaries while writing.

The researchers created an experimental task of generating text with a line break at a specific width. The purpose was to understand how Claude 3.5 Haiku decides on words to fit within a specified width and when to insert a line break, which required the model to track the current position within the line of text it is generating.

The experiment demonstrates how language models learn structure from patterns in text without explicit programming or supervision.

The Linebreaking Challenge

The linebreaking task requires the model to decide whether the next word will fit on the current line or if it must start a new one. To succeed, the model must learn the line width constraint (the rule that limits how many characters can fit on a line, like in physical space on a sheet of paper). To do this the LLM must track the number of characters written, compute how many remain, and decide whether the next word fits. The task demands reasoning, memory, and planning. The researchers used attribution graphs to visualize how the model coordinates these calculations, showing distinct internal features for the character count, the next word, and the moment a line break is required.

Continuous Counting

The researchers observed that Claude 3.5 Haiku represents line character counts not as counting step by step, but as a smooth geometric structure that behaves like a continuously curved surface, allowing the model to track position fluidly (on the fly) rather than counting symbol by symbol.

Something else that’s interesting is that they discovered the LLM had developed a boundary head (an “attention head”) that is responsible for detecting the line boundary. An attention mechanism weighs the importance of what is being considered (tokens). An attention head is a specialized component of the attention mechanism of an LLM. The boundary head, which is an attention head, specializes in the narrow task of detecting the end of line boundary.

The research paper states:

“One essential feature of the representation of line character counts is that the “boundary head” twists the representation, enabling each count to pair with a count slightly larger, indicating that the boundary is close. That is, there is a linear map QK which slides the character count curve along itself. Such an action is not admitted by generic high-curvature embeddings of the circle or the interval like the ones in the physical model we constructed. But it is present in both the manifold we observe in Haiku and, as we now show, in the Fourier construction. “

How Boundary Sensing Works

The researchers found that Claude 3.5 Haiku knows when a line of text is almost reaching the end by comparing two internal signals:

- How many characters it has already generated, and

- How long the line is supposed to be.

The aforementioned boundary attention heads decide which parts of the text to focus on. Some of these heads specialize in spotting when the line is about to reach its limit. They do this by slightly rotating or lining up the two internal signals (the character count and the maximum line width) so that when they nearly match, the model’s attention shifts toward inserting a line break.

The researchers explain:

“To detect an approaching line boundary, the model must compare two quantities: the current character count and the line width. We find attention heads whose QK matrix rotates one counting manifold to align it with the other at a specific offset, creating a large inner product when the difference of the counts falls within a target range. Multiple heads with different offsets work together to precisely estimate the characters remaining. “

Final Stage

At this stage of the experiment, the model has already determined how close it is to the line’s boundary and how long the next word will be. The last step is use that information.

Here’s how it’s explained:

“The final step of the linebreak task is to combine the estimate of the line boundary with the prediction of the next word to determine whether the next word will fit on the line, or if the line should be broken.”

The researchers found that certain internal features in the model activate when the next word would cause the line to exceed its limit, effectively serving as boundary detectors. When that happens, the model raises the chance of predicting a newline symbol and lowers the chance of predicting another word. Other features do the opposite: they activate when the word still fits, lowering the chance of inserting a line break.

Together, these two forces, one pushing for a line break and one holding it back, balance out to make the decision.

Model’s Can Have Visual Illusions?

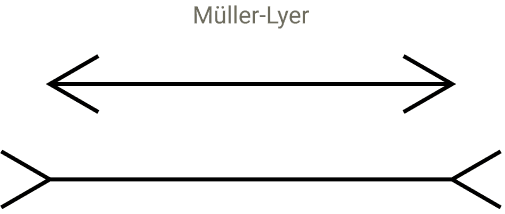

The next part of the research is kind of incredible because they endeavored to test whether the model could be susceptible to visual illusions that would cause trip it up. They started with the idea of how humans can be tricked by visual illusions that present a false perspective that make lines of the same length appear to be different lengths, one shorter than the other.

Screenshot Of A Visual Illusion

The researchers inserted artificial tokens, such as “@@,” to see how they disrupted the model’s sense of position. These tests caused misalignments in the model’s internal patterns it uses to keep track of position, similar to visual illusions that trick human perception. This caused the model’s sense of line boundaries to shift, showing that its perception of structure depends on context and learned patterns. Even though LLMs don’t see, they experience distortions in their internal organization similar to how humans misjudge what they see by disrupting the relevant attention heads.

They explained:

“We find that it does modulate the predicted next token, disrupting the newline prediction! As predicted, the relevant heads get distracted: whereas with the original prompt, the heads attend from newline to newline, in the altered prompt, the heads also attend to the @@.”

They wondered if there was something special about the @@ characters or would any other random characters disrupt the model’s ability to successfully complete the task. So they ran a test with 180 different sequences and found that most of them did not disrupt the models ability to predict the line break point. They discovered that only a small group of characters that were code related were able to distract the relevant attention heads and disrupt the counting process.

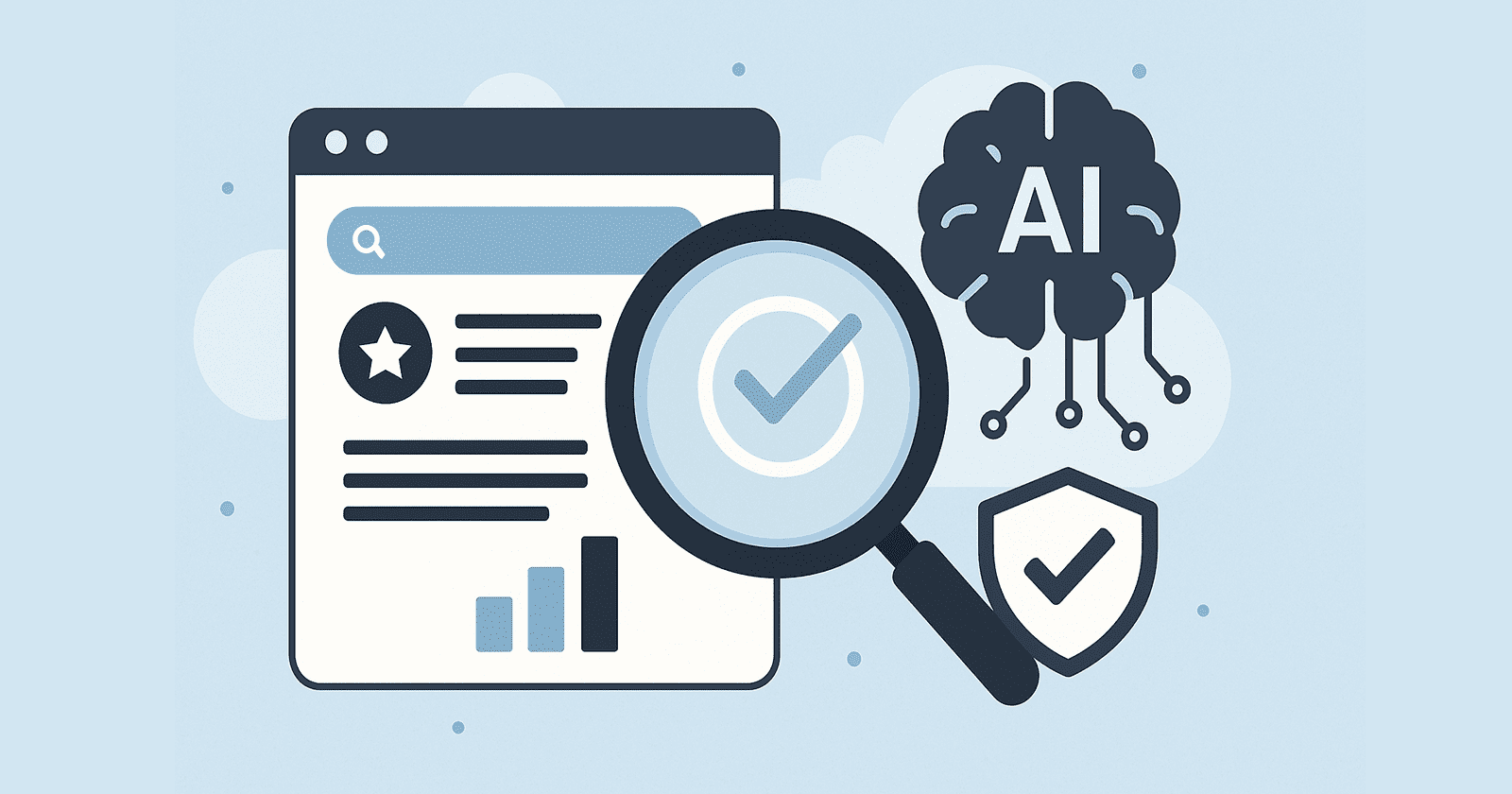

LLMs Have Visual-Like Perception For Text

The study shows how text-based features evolve into smooth geometric systems inside a language model. It also shows that models don’t only process symbols, they create perception-based maps from them. This part, about perception, is to me what’s really interesting about the research. They keep circling back to analogies related to human perception and how those analogies keep fitting into what they see going on inside the LLM.

They write:

“Although we sometimes describe the early layers of language models as responsible for “detokenizing” the input, it is perhaps more evocative to think of this as perception. The beginning of the model is really responsible for seeing the input, and much of the early circuitry is in service of sensing or perceiving the text similar to how early layers in vision models implement low level perception.”

Then a little later they write:

“The geometric and algorithmic patterns we observe have suggestive parallels to perception in biological neural systems. …These features exhibit dilation—representing increasingly large character counts activating over increasingly large ranges—mirroring the dilation of number representations in biological brains. Moreover, the organization of the features on a low dimensional manifold is an instance of a common motif in biological cognition. While the analogies are not perfect, we suspect that there is still fruitful conceptual overlap from increased collaboration between neuroscience and interpretability.”

Implications For SEO?

Arthur C. Clarke wrote that advanced technology is indistinguishable from magic. I think that once you understand a technology it becomes more relatable and less like magic. Not all knowledge has a utilitarian use and I think understanding how an LLM perceives content is useful to the extent that it’s no longer magical. Will this research make you a better SEO? It deepens our understanding of how language models organize and interpret content structure, makes it more understandable and less like magic.

Read about the research here:

When Models Manipulate Manifolds: The Geometry of a Counting Task

Featured Image by Shutterstock/Krot_Studio