Measuring When AI Assistants And Search Engines Disagree via @sejournal, @DuaneForrester

Before you get started, it’s important to heed this warning: There is math ahead! If doing math and learning equations makes your head swim, or makes you want to sit down and eat a whole cake, prepare yourself (or grab a cake). But if you like math, if you enjoy equations, and you really do believe that k=N (you sadist!), oh, this article is going to thrill you as we explore hybrid search in a bit more depth.

(Image Credit: Duane Forrester)

(Image Credit: Duane Forrester)For years (decades), SEO lived inside a single feedback loop. We optimized, ranked, and tracked. Everything made sense because Google gave us the scoreboard. (I’m oversimplifying, but you get the point.)

Now, AI assistants sit above that layer. They summarize, cite, and answer questions before a click ever happens. Your content can be surfaced, paraphrased, or ignored, and none of it shows in analytics.

That doesn’t make SEO obsolete. It means a new kind of visibility now runs parallel to it. This article shows ideas of how to measure that visibility without code, special access, or a developer, and how to stay grounded in what we actually know.

Why This Matters

Search engines still drive almost all measurable traffic. Google alone handles almost 4 billion searches per day. By comparison, Perplexity’s reported total annual query volume is roughly 10 billion.

So yes, assistants are still small by comparison. But they’re shaping how information gets interpreted. You can already see it when ChatGPT Search or Perplexity answers a question and links to its sources. Those citations reveal which content blocks (chunks) and domains the models currently trust.

The challenge is that marketers have no native dashboard to show how often that happens. Google recently added AI Mode performance data into Search Console. According to Google’s documentation, AI Mode impressions, clicks, and positions are now included in the overall “Web” search type.

That inclusion matters, but it’s blended in. There’s currently no way to isolate AI Mode traffic. The data is there, just folded into the larger bucket. No percentage split. No trend line. Not yet.

Until that visibility improves, I’m suggesting we can use a proxy test to understand where assistants and search agree and where they diverge.

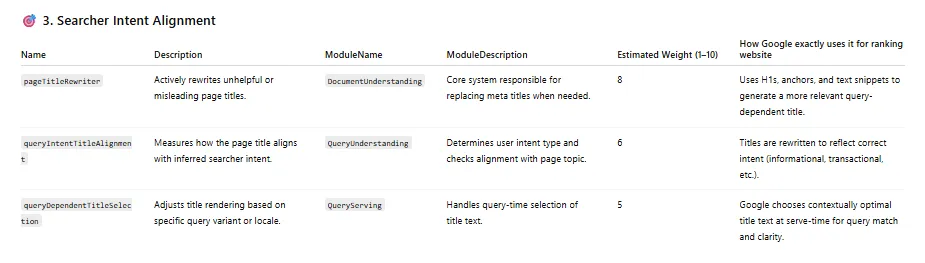

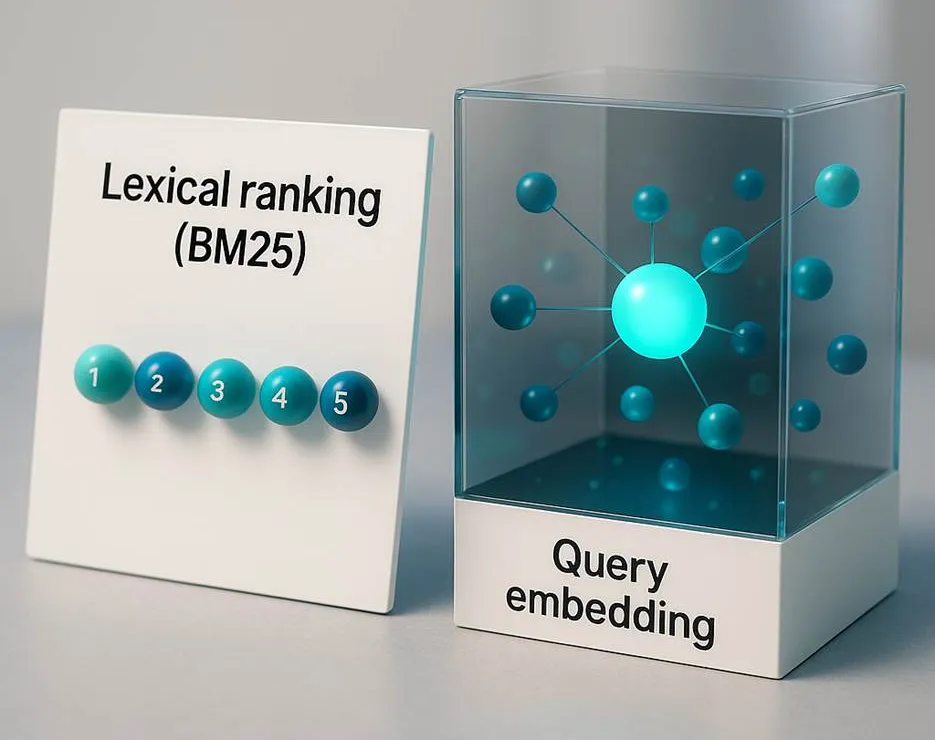

Two Retrieval Systems, Two Ways To Be Found

Traditional search engines use lexical retrieval, where they match words and phrases directly. The dominant algorithm, BM25, has powered solutions like Elasticsearch and similar systems for years. It’s also in use in today’s common search engines.

AI assistants rely on semantic retrieval. Instead of exact words, they map meaning through embeddings, the mathematical fingerprints of text. This lets them find conceptually related passages even when the exact words differ.

Each system makes different mistakes. Lexical retrieval misses synonyms. Semantic retrieval can connect unrelated ideas. But when combined, they produce better results.

Inside most hybrid retrieval systems, the two methods are fused using a rule called Reciprocal Rank Fusion (RRF). You don’t have to be able to run it, but understanding the concept helps you interpret what you’ll measure later.

RRF In Plain English

Hybrid retrieval merges multiple ranked lists into one balanced list. The math behind that fusion is RRF.

The formula is simple: score equals one divided by k plus rank. This is written as 1 ÷ (k + rank). If an item appears in several lists, you add those scores together.

Here, “rank” means the item’s position in that list, starting with 1 as the top. “k” is a constant that smooths the difference between top and mid-ranked items. Most systems typically use something near 60, but each may tune it differently.

It’s worth remembering that a vector model doesn’t rank results by counting word matches. It measures how close each document’s embedding is to the query’s embedding in multi-dimensional space. The system then sorts those similarity scores from highest to lowest, effectively creating a ranked list. It looks like a search engine ranking, but it’s driven by distance math, not term frequency.

Let’s make it tangible with small numbers and two ranked lists. One from BM25 (keyword relevance) and one from a vector model (semantic relevance). We’ll use k = 10 for clarity.

Document A is ranked number 1 in BM25 and number 3 in the vector list.

From BM25: 1 ÷ (10 + 1) = 1 ÷ 11 = 0.0909.

From the vector list: 1 ÷ (10 + 3) = 1 ÷ 13 = 0.0769.

Add them together: 0.0909 + 0.0769 = 0.1678.

Document B is ranked number 2 in BM25 and number 1 in the vector list.

From BM25: 1 ÷ (10 + 2) = 1 ÷ 12 = 0.0833.

From the vector list: 1 ÷ (10 + 1) = 1 ÷ 11 = 0.0909.

Add them: 0.0833 + 0.0909 = 0.1742.

Document C is ranked number 3 in BM25 and number 2 in the vector list.

From BM25: 1 ÷ (10 + 3) = 1 ÷ 13 = 0.0769.

From the vector list: 1 ÷ (10 + 2) = 1 ÷ 12 = 0.0833.

Add them: 0.0769 + 0.0833 = 0.1602.

Document B wins here as it ranks high in both lists. If you raise k to 60, the differences shrink, producing a smoother, less top-heavy blend.

This example is purely illustrative. Every platform adjusts parameters differently, and no public documentation confirms which k values any engine uses. Think of it as an analogy for how multiple signals get averaged together.

Where This Math Actually Lives

You’ll never need to code it yourself as RRF is already part of modern search stacks. Here are examples of this type of system from their foundational providers. If you read through all of these, you’ll have a deeper understanding of how platforms like Perplexity do what they do:

All of them follow the same basic process: Retrieve with BM25, retrieve with vectors, score with RRF, and merge. The math above explains the concept, not the literal formula inside every product.

Observing Hybrid Retrieval In The Wild

Marketers can’t see those internal lists, but we can observe how systems behave at the surface. The trick is comparing what Google ranks with what an assistant cites, then measuring overlap, novelty, and consistency. This external math is a heuristic, a proxy for visibility. It’s not the same math the platforms calculate internally.

Step 1. Gather The Data

Pick 10 queries that matter to your business.

For each query:

- Run it in Google Search and copy the top 10 organic URLs.

- Run it in an assistant that shows citations, such as Perplexity or ChatGPT Search, and copy every cited URL or domain.

Now you have two lists per query: Google Top 10 and Assistant Citations.

(Be aware that not every assistant shows full citations, and not every query triggers them. Some assistants may summarize without listing sources at all. When that happens, skip that query as it simply can’t be measured this way.)

Step 2. Count Three Things

- Intersection (I): how many URLs or domains appear in both lists.

- Novelty (N): how many assistant citations do not appear in Google’s top 10.

If the assistant has six citations and three overlap, N = 6 − 3 = 3. - Frequency (F): how often each domain appears across all 10 queries.

Step 3. Turn Counts Into Quick Metrics

For each query set:

Shared Visibility Rate (SVR) = I ÷ 10.

This measures how much of Google’s top 10 also appears in the assistant’s citations.

Unique Assistant Visibility Rate (UAVR) = N ÷ total assistant citations for that query.

This shows how much new material the assistant introduces.

Repeat Citation Count (RCC) = (sum of F for each domain) ÷ number of queries.

This reflects how consistently a domain is cited across different answers.

Example:

Google top 10 = 10 URLs. Assistant citations = 6. Three overlap.

I = 3, N = 3, F (for example.com) = 4 (appears in four assistant answers).

SVR = 3 ÷ 10 = 0.30.

UAVR = 3 ÷ 6 = 0.50.

RCC = 4 ÷ 10 = 0.40.

You now have a numeric snapshot of how closely assistants mirror or diverge from search.

Step 4. Interpret

These scores are not industry benchmarks by any means, simply suggested starting points for you. Feel free to adjust as you feel the need:

- High SVR (> 0.6) means your content aligns with both systems. Lexical and semantic relevance are in sync.

- Moderate SVR (0.3 – 0.6) with high RCC suggests your pages are semantically trusted but need clearer markup or stronger linking.

- Low SVR (< 0.3) with high UAVR shows assistants trust other sources. That often signals structure or clarity issues.

- High RCC for competitors indicates the model repeatedly cites their domains, so it’s worth studying for schema or content design cues.

Step 5. Act

If SVR is low, improve headings, clarity, and crawlability. If RCC is low for your brand, standardize author fields, schema, and timestamps. If UAVR is high, track those new domains as they may already hold semantic trust in your niche.

(This approach won’t always work exactly as outlined. Some assistants limit the number of citations or vary them regionally. Results can differ by geography and query type. Treat it as an observational exercise, not a rigid framework.)

Why This Math Is Important

This math gives marketers a way to quantify agreement and disagreement between two retrieval systems. It’s diagnostic math, not ranking math. It doesn’t tell you why the assistant chose a source; it tells you that it did, and how consistently.

That pattern is the visible edge of the invisible hybrid logic operating behind the scenes. Think of it like watching the weather by looking at tree movement. You’re not simulating the atmosphere, just reading its effects.

On-Page Work That Helps Hybrid Retrieval

Once you see how overlap and novelty play out, the next step is tightening structure and clarity.

- Write in short claim-and-evidence blocks of 200-300 words.

- Use clear headings, bullets, and stable anchors so BM25 can find exact terms.

- Add structured data (FAQ, HowTo, Product, TechArticle) so vectors and assistants understand context.

- Keep canonical URLs stable and timestamp content updates.

- Publish canonical PDF versions for high-trust topics; assistants often cite fixed, verifiable formats first.

These steps support both crawlers and LLMs as they share the language of structure.

Reporting And Executive Framing

Executives don’t care about BM25 or embeddings nearly as much as they care about visibility and trust.

Your new metrics (SVR, UAVR, and RCC) can help translate the abstract into something measurable: how much of your existing SEO presence carries into AI discovery, and where competitors are cited instead.

Pair those findings with Search Console’s AI Mode performance totals, but remember: You can’t currently separate AI Mode data from regular web clicks, so treat any AI-specific estimate as directional, not definitive. Also worth noting that there may still be regional limits on data availability.

These limits don’t make the math less useful, however. They help keep expectations realistic while giving you a concrete way to talk about AI-driven visibility with leadership.

Summing Up

The gap between search and assistants isn’t a wall. It’s more of a signal difference. Search engines rank pages after the answer is known. Assistants retrieve chunks before the answer exists.

The math in this article is an idea of how to observe that transition without developer tools. It’s not the platform’s math; it’s a marketer’s proxy that helps make the invisible visible.

In the end, the fundamentals stay the same. You still optimize for clarity, structure, and authority.

Now you can measure how that authority travels between ranking systems and retrieval systems, and do it with realistic expectations.

That visibility, counted and contextualized, is how modern SEO stays anchored in reality.

More Resources:

This post was originally published on Duane Forrester Decodes.

Featured Image: Roman Samborskyi/Shutterstock