Enterprise SEO Operating Models That Scale In 2026 And Beyond via @sejournal, @billhunt

Most enterprises are still treating SEO as a marketing activity. That decision, whether intentional or accidental, is now a material business risk.

In the years ahead, SEO performance will not be determined by better tactics, better tools, or even better talent. It will be determined by whether leadership understands what SEO has become and restructures the organization accordingly. SEO is no longer simply a channel but an infrastructure, and infrastructure decisions are leadership decisions.

The Old SEO Question Is No Longer Relevant

For years, executives asked a familiar question: Are we doing SEO well? Or even more simply, are we ranking well in Google?

That question assumed SEO was something you did, summed up as a collection of optimizations, audits, and campaigns applied after the fact. It made sense when search primarily ranked pages and rewarded incremental improvements. The more relevant question today is different: Is our organization structurally capable of being discovered, understood, and selected by modern search systems?

That is no longer a marketing question. It is an operating model question because AI optimization must become a team sport.

Search engines, and increasingly AI-driven systems, do not reward isolated optimizations. They reward coherence, structure, intent alignment, and machine-readable clarity across an entire digital ecosystem. Those outcomes are not created downstream. They are created by how an organization builds, governs, and scales its digital assets.

What Has Fundamentally Changed

To understand why enterprise SEO operating models must evolve, leadership first needs to understand what actually changed in search.

1. Search Systems Now Interpret Intent Before Retrieval

Modern search systems no longer treat queries as literal requests. They reinterpret ambiguous intent, expand queries through fan-out, explore multiple intent paths simultaneously, and retrieve information across formats and sources. Content no longer competes page-to-page. It competes concept-to-concept.

If an organization lacks clear intent modeling, structured topical coverage, and consistent entity representation, its content may never enter the retrieval set at all, regardless of how optimized individual pages appear.

2. Eligibility Now Precedes Ranking

This shift also changed the sequence of how visibility is earned. Ranking still matters, particularly for enterprises where much of the traffic still flows through traditional results. But ranking now occurs only after eligibility is established. As search experiences move toward synthesized answers and AI-driven surfaces, eligibility has become the prerequisite rather than the reward.

That eligibility is determined upstream by templates, data models, taxonomy, entity consistency, governance, and workflow design. These are not marketing decisions. They are organizational ones.

3. Enterprise SEO Has Crossed An Infrastructure Threshold

Enterprise SEO has always depended on infrastructure. What has changed is that modern search systems no longer compensate for structural shortcuts. In the past, rankings recovered, signals recalibrated, and messiness was often forgiven.

Today, AI-driven systems amplify inconsistency. Retrieval becomes selective, narratives persist, and structural debt compounds. Delivering results aligned to real searcher intent has shifted from a forgiving environment to a selective one, where visibility depends on how well the underlying system is designed. Taken together, these conditions define what a scalable enterprise SEO operating model actually looks like, not as a team or function, but as an organizational capability.

The Leadership Declaration: What Must Be True In 2026

Organizations that scale organic visibility in the coming years will share a small set of non-negotiable characteristics. These are not best practices. They are operating requirements.

Declaration #1: SEO Must Be Treated As Infrastructure

SEO must be treated as infrastructure. That means it moves from a downstream marketing function to a foundational digital capability. SEO requirements are embedded in platforms, standards are enforced through templates, and eligibility is designed before content is commissioned. When failures occur, they are treated like performance or security issues, not optional enhancements. If SEO depends on post-launch fixes, the operating model is already broken.

Declaration #2: SEO Must Live Upstream In Decision-Making

SEO must live upstream in decision-making. Search performance is created when decisions are made about site structure, content scope, taxonomy, product naming, localization strategy, data modeling, and internal linking frameworks. SEO cannot succeed if it only reviews outcomes; it must help shape inputs. This does not mean SEO dictates solutions. It means SEO defines non-negotiable discovery constraints, just as accessibility, performance, and security already do.

Declaration #3: SEO Requires Cross-Functional Accountability

SEO requires cross-functional accountability. Visibility depends on development, content, product, UX, legal, and localization teams working in concert, similar to a professional sports team. In most enterprises, SEO is measured on outcomes while other teams control the systems that produce them. That accountability gap must close. High-performing organizations define shared ownership of visibility, clear escalation paths, mandatory compliance standards, and executive sponsorship for search performance. Without this, SEO remains a negotiation rather than a capability.

Declaration #4: Governance Must Replace Guidelines

Governance must replace guidelines. Guidelines are optional; governance is enforceable. Scalable SEO requires mandatory standards, controlled templates, centralized entity definitions, enforced structured data policies, approved market deviations, and continuous compliance monitoring. This demands a Center of Excellence with authority, not just expertise. SEO cannot scale on influence alone.

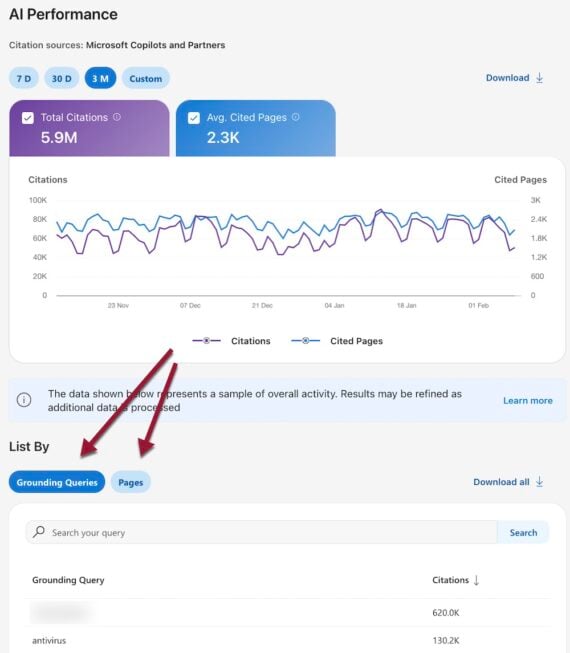

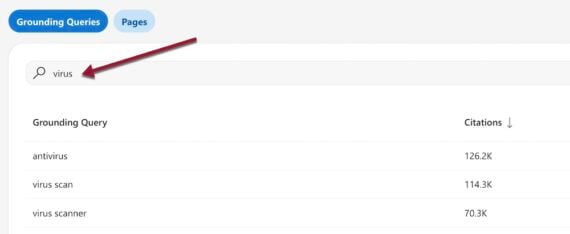

Declaration #5: SEO Must Be Measured As A System

Finally, SEO must be measured as a system. Executives need to move beyond quarterly performance questions and instead assess structural eligibility across markets, intent coverage, entity coherence, template enforcement, and where visibility leaks and why. System-level measurement replaces page-level obsession.

This shift mirrors a broader issue I explored in a previous Search Engine Journal article on the questions CEOs should be asking about their websites, but rarely do. The core insight was that executive oversight often focuses on surface-level outcomes while missing systemic sources of risk, inefficiency, and value leakage.

SEO measurement suffers from the same blind spot. Asking how SEO “performed” this quarter obscures whether the organization is structurally capable of being discovered and represented accurately across modern search and AI-driven environments. The more meaningful questions are systemic: where visibility leaks, which teams own those failure points, and whether the underlying architecture enforces consistency at scale.

Measured this way, SEO stops being a reporting function and becomes an early warning system for digital effectiveness.

The Operating Model Divide

Enterprises will fall into two groups.

Some will remain tactical optimizers, where SEO lives in marketing, fixes happen after launch, paid media masks organic gaps, and AI visibility remains inconsistent. Others will become structural builders, embedding SEO into systems, defining requirements before creation, enforcing governance, and earning consistent retrieval and trust from AI-driven platforms.

The difference will not be effort. It will be organizational design.

The Clarifying Reality

Ranking still matters, particularly for enterprises where a significant share of traffic continues to flow through traditional results. What has changed is not its importance, but its position in the visibility chain. Before anything can rank, it must first be retrieved. Before it can be retrieved, it must be eligible. And eligibility is no longer determined by isolated optimizations, but by infrastructure – how content is structured, how entities are defined, and how consistently signals are enforced across systems.

Every enterprise already has an SEO operating model, whether it was designed intentionally or emerged by default. In the years ahead, that distinction will matter far more than most organizations expect.

SEO has become infrastructure. Infrastructure requires leadership because it shapes what the organization can reliably produce and how it is perceived at scale. The companies that win will not be the ones that optimize harder, but the ones that operate differently, by designing systems that search engines and AI-driven platforms can consistently discover, understand, and trust.

More Resources:

Featured Image: Anton Vierietin/Shutterstock