AI Integration in Marketing: Strategic Insights For SEO & Agency Leaders via @sejournal, @CallRail

This edited extract is from Data Storytelling in Marketing by Caroline Florence ©2024 and is reproduced and adapted with permission from Kogan Page Ltd.

Storytelling is an integral part of the human experience. People have been communicating observations and data to each other for millennia using the same principles of persuasion that are being used today.

However, the means by which we can generate data and insights and tell stories has shifted significantly and will continue to do so, as technology plays an ever-greater role in our ability to collect, process, and find meaning from the wealth of information available.

So, what is the future of data storytelling?

I think we’ve all talked about data being the engine that powers business decision-making. And there’s no escaping the role that AI and data are going to play in the future.

So, I think the more data literate and aware you are, the more informed and evidence-led you can be about our decisions, regardless of what field you are in – because that is the future we’re all working towards and going to embrace, right?

It’s about relevance and being at the forefront of cutting-edge technology.

Sanica Menezes, Head of Customer Analytics, Aviva

The Near Future Scenario

Imagine simply applying a generative AI tool to your marketing data dashboards to create audience-ready copy. The tool creates a clear narrative structure, synthesized from the relevant datasets, with actionable and insightful messages relevant to the target audience.

The tool isn’t just producing vague and generic output with questionable accuracy but is sophisticated enough to help you co-author technically robust and compelling content that integrates a level of human insight.

Writing stories from vast and complex datasets will not only drive efficiency and save time, but free up the human co-author to think more creatively about how they deliver the end story to land the message, gain traction with recommendations and influence decisions and actions.

There is still a clear role for the human to play as co-author, including the quality of the prompts given, expert interpretation, nuance of language, and customization for key audiences.

But the human co-author is no longer bogged down by the complex and time-consuming process of gathering different data sources and analysing data for insights. The human co-author can focus on synthesizing findings to make sense of patterns or trends and perfect their insight, judgement, and communication.

In my conversations with expert contributors, the consensus was that AI would have a significant impact on data storytelling but would never replace the need for human intervention.

This vision for the future of storytelling is (almost) here. Tools like this already exist and are being further improved, enhanced, and rolled out to market as I write this book.

But the reality is that the skills involved in leveraging these tools are no different from the skills needed to currently build, create, and deliver great data stories. If anything, the risks involved in not having human co-authors means acquiring the skills covered in this book become even more valuable.

In the AI storytelling exercise WINconducted, the tool came up with “80 per cent of people are healthy” as its key point. Well, it’s just not an interesting fact.

Whereas the humans looking at the same data were able to see a trend of increasing stress, which is far more interesting as a story. AI could analyse the data in seconds, but my feeling is that it needs a lot of really good prompting in order for it to seriously help with the storytelling bit.

I’m much more positive about it being able to create 100 slides for me from the data and that may make it easier for me to pick out what the story is.

Richard Colwell, CEO, Red C Research & Marketing Group

We did a recent experiment with the Inspirient AI platform taking a big, big, big dataset, and in three minutes, it was able to produce 1,000 slides with decent titles and design.

Then you can ask it a question about anything, and it can produce 110 slides, 30 slides, whatever you want. So, there is no reason why people should be wasting time on the data in that way.

AI is going to make a massive difference – and then we bring in the human skill which is contextualization, storytelling, thinking about the impact and the relevance to the strategy and all that stuff the computer is never going to be able to do.

Lucy Davison, Founder And CEO, Keen As Mustard Marketing

Other Innovations Impacting On Data Storytelling

Besides AI, there are a number of other key trends that are likely to have an impact on our approach to data storytelling in the future:

Synthetic Data

Synthetic data is data that has been created artificially through computer simulation to take the place of real-world data. Whilst already used in many data models to supplement real-world data or when real-world data is not available, the incidence of synthetic data is likely to grow in the near future.

According to Gartner (2023), by 2024, 60 per cent of the data used in training AI models will be synthetically generated.

Speaking in Marketing Week (2023), Mark Ritson cites around 90 per cent accuracy for AI-derived consumer data, when triangulated with data generated from primary human sources, in academic studies to date.

This means that it has a huge potential to help create data stories to inform strategies and plans.

Virtual And Augmented Reality

Virtual and augmented reality will enable us to generate more immersive and interactive experiences as part of our data storytelling. Audiences will be able to step into the story world, interact with the data, and influence the narrative outcomes.

This technology is already being used in the world of entertainment to blur the lines between traditional linear television and interactive video games, creating a new form of content consumption.

Within data storytelling we can easily imagine a world with simulated customer conversations, whilst navigating the website or retail environment.

Instead of static visualizations and charts showing data, the audience will be able to overlay data onto their physical environment and embed data from different sources accessed at the touch of a button.

Transmedia Storytelling

Transmedia storytelling will continue to evolve, with narratives spanning multiple platforms and media. Data storytellers will be expected to create interconnected storylines across different media and channels, enabling audiences to engage with the data story in different ways.

We are already seeing these tools being used in data journalism where embedded audio and video, on-the-ground eyewitness content, live-data feeds, data visualization and photography sit alongside more traditional editorial commentary and narrative storytelling.

For a great example of this in practice, look at the Pulitzer Prize-winning “Snow fall: The avalanche at Tunnel Creek (Branch, 2012)” that changed the way The New York Times approached data storytelling.

In the marketing world, some teams are already investing in high-end knowledge share portals or embedding tools alongside their intranet and internet to bring multiple media together in one place to tell the data story.

User-Generated Content

User-generated content will also have a greater influence on data storytelling. With the rise of social media and online communities, audiences will actively participate in creating and sharing stories.

Platforms will emerge that enable collaboration between storytellers and audiences, allowing for the co-creation of narratives and fostering a sense of community around storytelling.

Tailoring narratives to the individual audience member based on their preferences, and even their emotional state, will lead to greater expectations of customization in data storytelling to enhance engagement and impact.

Moving beyond the traditional “You said, so we did” communication with customers to demonstrate how their feedback has been actioned, user-generated content will enable customers to play a more central role in sharing their experiences and expectations

These advanced tools are a complement to, and not a substitution for, the human creativity and critical thinking that great data storytelling requires. If used appropriately, they can enhance your data storytelling, but they cannot do it for you.

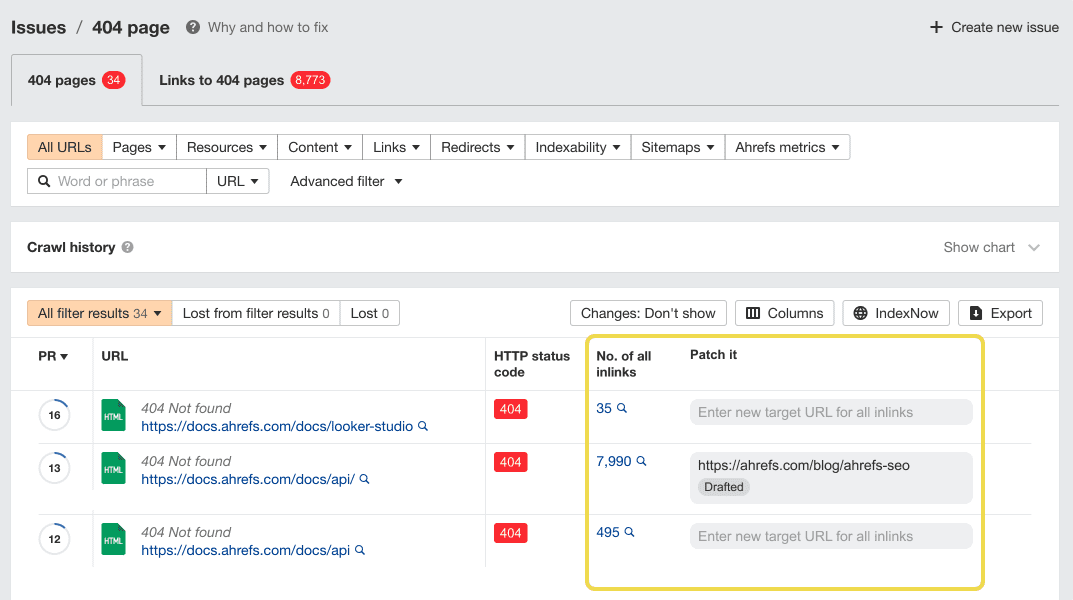

Whether you work with Microsoft Excel or access reports from more sophisticated business intelligence tools, such as Microsoft Power BI, Tableau, Looker Studio, or Qlik, you will still need to take those outputs and use your skills as a data storyteller to curate them in ways that are useful for your end audience.

There are some great knowledge-sharing platforms out there that can integrate outputs from existing data storytelling tools and help curate content in one place. Some can be built into existing platforms that might be accessible within your business, like Confluence.

Some can be custom-built using external tools for a bespoke need, such as creating a micro-site for your data story using WordPress. And some can be brought in at scale to integrate with existing Microsoft or Google tools.

The list of what is available is extensive but will typically be dependent on what is available IT-wise within your own organization.

The Continuing Role Of The Human In Data Storytelling

In this evolving world, the role of the data storyteller doesn’t disappear but becomes ever more critical.

The human data storyteller still has many important roles to still play, and the skills necessary to influence and engage cynical, discerning, and overwhelmed audiences become even more valuable.

Now that white papers, marketing copy, internal presentations, and digital content can all be generated faster than humans could ever manage on their own, the risk of information overload becomes inevitable without a skilled storyteller to curate the content.

Today, the human data storyteller is crucial for:

- Ensuring we are not telling “any old story” just because we can and that the story is relevant to the business context and needs.

- Understanding the inputs being used by the tool, including limitations and potential bias, as well as ensuring data is used ethically and that it is accurate, reliable, and obtained with the appropriate permissions.

- Framing queries appropriately in the right way to incorporate the relevant context, issues, and target audience needs to inform the knowledge base.

- Cross-referencing and synthesizing AI-generated insights or synthetic data with human expertise and subject domain knowledge to ensure the relevance and accuracy of recommendations.

- Leveraging the different VR, AR, and transmedia tools available to ensure the right one for the job.

To read the full book, SEJ readers have an exclusive 25% discount code and free shipping to the US and UK. Use promo code SEJ25 at koganpage.com here.

More resources:

Featured Image: PopTika/Shutterstock