Mass-market military drones have changed the way wars are fought

Mass-market military drones are one of MIT Technology Review’s 10 Breakthrough Technologies of 2023. Explore the rest of the list here.

When the United States first fired a missile from an armed Predator drone at suspected Al Qaeda leaders in Afghanistan on November 14, 2001, it was clear that warfare had permanently changed. During the two decades that followed, drones became the most iconic instrument of the war on terror. Highly sophisticated, multimillion-dollar US drones were repeatedly deployed in targeted killing campaigns. But their use worldwide was limited to powerful nations.

Then, as the navigation systems and wireless technologies in hobbyist drones and consumer electronics improved, a second style of military drone appeared—not in Washington, but in Istanbul. And it caught the world’s attention in Ukraine in 2022, when it proved itself capable of holding back one of the most formidable militaries on the planet.

The Bayraktar TB2 drone, a Turkish-made aircraft from the Baykar corporation, marks a new chapter in the still-new era of drone warfare. Cheap, widely available drones have changed how smaller nations fight modern wars. Although Russia’s invasion of Ukraine brought these new weapons into the popular consciousness, there’s more to their story.

Explosions in Armenia, broadcast on YouTube in 2020, revealed this new shape of war to the world. There, in a blue-tinted video, a radar dish spins underneath cyan crosshairs until it erupts into a cloud of smoke. The action repeats twice: a crosshair targets a vehicle mounted with a spinning dish sensor, its earthen barriers no defense against aerial attack, leaving an empty crater behind.

The clip, released on YouTube on September 27, 2020, was one of many the Azerbaijan military published during the Second Nagorno-Karabakh War, which it launched against neighboring Armenia that same day. The video was recorded by the TB2.

It encompasses all the horrors of war, with the added voyeurism of an unblinking camera.

In that conflict and others, the TB2 has filled a void in the arms market created by the US government’s refusal to export its high-end Predator family of drones. To get around export restrictions on drone models and other critical military technologies, Baykar turned to technologies readily available on the commercial market to make a new weapon of war.

The TB2 is built in Turkey from a mix of domestically made parts and parts sourced from international commercial markets. Investigations of downed Bayraktars have revealed components sourced from US companies, including a GPS receiver made by Trimble, an airborne modem/transceiver made by Viasat, and a Garmin GNC 255 navigation radio. Garmin, which makes consumer GPS products, released a statement noting that its navigation unit found in TB2s “is not designed or intended for military use, and it is not even designed or intended for use in drones.” But it’s there.

Commercial technology makes the TB2 appealing for another reason: while the US-made Reaper drone costs $28 million, the TB2 only costs about $5 million. Since its development in 2014, the TB2 has shown up in conflicts in Azerbaijan, Libya, Ethiopia, and now Ukraine. The drone is so much more affordable than traditional weaponry that Lithuanians have run crowdfunding campaigns to help buy them for Ukrainian forces.

The TB2 is just one of several examples of commercial drone technology being used in combat. The same DJI Mavic quadcopters that help real estate agents survey property have been deployed in conflicts in Burkina Faso and the Donbas region of Ukraine. Other DJI drone models have been spotted in Syria since 2013, and kit-built drones, assembled from commercially available parts, have seen widespread use.

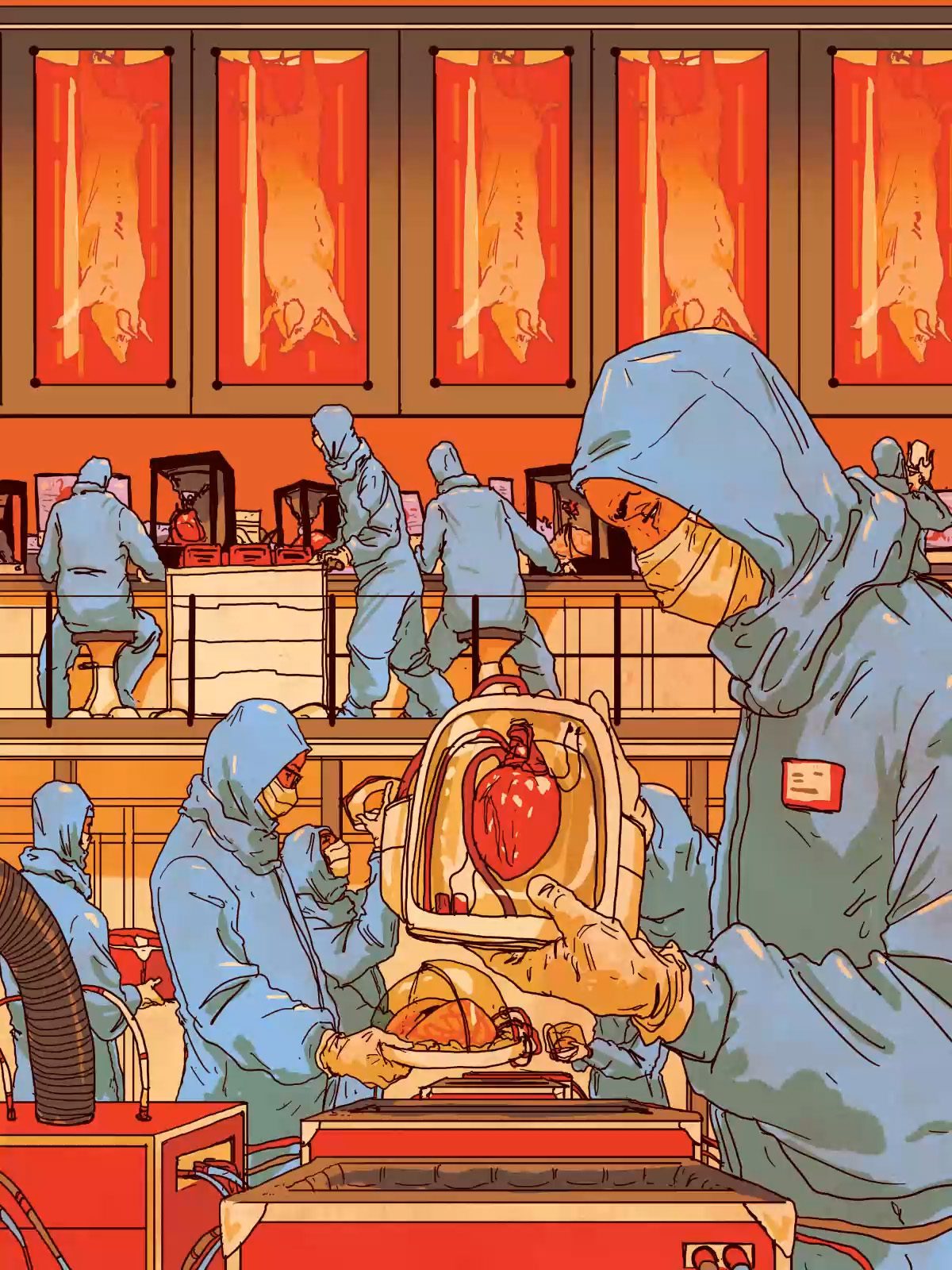

These cheap, good-enough drones that are free of export restrictions have given smaller nations the kind of air capabilities previously limited to great military powers. While that proliferation may bring some small degree of parity, it comes with terrible human costs. Drone attacks can be described in sterile language, framed as missiles stopping vehicles. But what happens when that explosive force hits human bodies is visceral, tragic. It encompasses all the horrors of war, with the added voyeurism of an unblinking camera whose video feed is monitored by a participant in the attack who is often dozens, if not thousands, of miles away.

What’s more, as these weapons proliferate, larger powers will increasingly employ them in conventional warfare rather than rely on targeted killings. When Ukraine proved it was capable of holding back the Russian invasion, Russia unleashed a terror campaign against Ukrainian civilians via Iranian-made Shahed-136 drones. These self-detonating drones, which Russia launches in salvos, contain commercial parts from US companies. The waves of drone attacks have largely been intercepted by Ukrainian air defenses, but some have killed civilians. Because the Shahed-136 drones are so cheap to make, estimated at around $20,000, intercepting them with a more expensive missile incurs a cost to the defender.

Export potential

The TB2 was developed by MIT graduate Selcuk Bayraktar, who researched advanced vertical landing patterns for drones while at the university. His namesake drone is a fixed-wing plane with modest specifications. It can communicate at a range of around 186 miles from its ground station and travels at 80 mph to 138 mph. At those speeds, a TB2 can stay in the sky for over 24 hours, comparable to higher-end drones like the Reaper and Gray Eagle.

From altitudes of up to 25,000 feet, the TB2 surveys the ground below, sharing video to coordinate long-range attacks or movements, or releasing laser-guided bombs on people, vehicles, or buildings.

But its most unique characteristic, says James Rogers, associate professor in war studies at the Danish Institute for Advanced Study, is that it’s “the first mass-produced drone system that medium and smaller states can get hold of.”

Before Baykar developed the TB2, the Turkish military wanted to buy Predator and Reaper drones from the US. Those are the remotely piloted planes that defined the US’s long wars in Afghanistan and Iraq. But drone exports from the US are governed by the Missile Technology Control Regime, a treaty whose members agree to limit access to particular types of weapons. The Trump administration relaxed adherence to these rules in 2020 (a change upheld by the Biden administration), but the previous enforcement of the rules, combined with concern that Turkey would use the drones to violate human rights, prevented a sale in 2012.

Turkey is not alone in being denied the ability to purchase US-made drones. Critics of the treaty point out that the US could sell fighter jets that require human pilots to Egypt and other countries, but won’t sell those same countries armed drones.

But commercial and military technology have a way of driving each other. Silicon Valley is largely an outgrowth of Cold War military technology research, and consumer electronics, especially those tied to computing and navigation systems, have long been subsidized by military research. GPS was once a military technology so sensitive that civilian use of the signal was intentionally degraded until 2000.

Now, commercial access to the full signal, in conjunction with cheap and powerful commercial GPS receivers like the one found in the Bayraktar, allows drones to perform at near-military standards, without special access to military signals or congressional oversight.

The Turkish military debuted the Bayraktar in 2016, targeting members of the PKK, a Kurdish militia. Since then, the drone has seen action with several other militaries, most famously Ukraine and Azerbaijan but also on one side of the Libyan Civil War. In 2022, the small West African nation of Togo, with a military budget of just under $114 million, purchased a consignment of Bayraktar TB2s.

“We got to the point where these drones are deciding the fate of nations.”

James Rogers

“I think Turkey has made a real conscious decision to focus on the purchase and development of the TB2, making it cheaper and more widely available—in some cases ‘free’ through donations,” says Rogers.

In 2021 Ethiopia received the TB2 and other foreign-supplied drones, which it used to halt and then reverse an advance by Tigrayan rebels on the capital that its ground forces couldn’t stop. Battlefield casualties directly resulting from the drones are hard to assess, but drone strikes on Tigrayan-held areas after the advance was halted killed at least 56 civilians.

“It is astonishing to think that Turkish drones, if we believe the accounts in Ethiopia, made the difference between an African nation’s regime falling or surviving. We got to the point where these drones are deciding the fate of nations,” says Rogers.

War hobbyists

The TB2, while modest in its abilities relative to other military drones, is an advanced piece of equipment that requires ground stations and a stretch of road to launch. But it reflects only one end of the spectrum of mass-market drones that have found their way onto battlefields. At the other end is the humble quadcopter.

By 2016, ISIS had modified DJI Phantom quadcopters to drop grenades. These weapons joined the arsenal of scratch-built ISIS drones, using parts that investigators with Conflict Armament Research had traced to mass-market commercial suppliers. This tactic spread and was soon common among armed groups. In 2018, Ukrainian forces fighting in Donetsk used a modified DJI Mavic to drop bombs on trenches held by Russian-backed separatists. Today these Chinese drones are found virtually anywhere in the world where there is combat.

“When it comes to this war in Ukraine, it is truly the competent use of quadcopters for a variety of tasks, including for artillery and mortar units, that has really made this cheap, available, expendable (unmanned aerial vehicle), very lethal and very dangerous,” says Samuel Bendett, an analyst at the Center for Naval Analysis and adjunct senior fellow at the Center for a New American Security.

In April 2022, China’s hobbyist drone maker DJI announced it was suspending all sales in Ukraine and Russia. But its quadcopters, especially the popular and affordable Mavic family, still find their way into military use, as soldiers buy and deploy the drones themselves. Sometimes regional governments even pitch in.

Even if these drones don’t release bombs, soldiers have learned to fear the buzzing of quadcopter engines overhead as the flights often presage an incoming artillery barrage. In one moment, a squad is a flicker of light, visible in thermal imaging, captured by a drone camera and shared with the tablet of an enemy hiding nearby. In the next, the soldiers’ execution is filmed from above, captured in 4K resolution by a weapon available for sale at any Best Buy.

Kelsey D. Atherton is a military technology journalist based in Albuquerque, New Mexico. His work has appeared in Popular Science, the New York Times, and Slate.