The State Of AI In Marketing: 6 Key Findings From Marketing Leaders via @sejournal, @theshelleywalsh

AI is being rapidly implemented, but that doesn’t mean it’s being used effectively.

The current lack of clear benchmarks and data about AI usage has meant that everyone has been operating in the dark.

This led us to create our first State Of AI In Marketing report, so that chief marketing officers and marketing decision-makers can have insights to make better informed decisions as they navigate the fast-moving developments in our industry.

We asked eight key questions about generative AI in marketing to a selection of U.S.-based decision-makers and leaders.

We got 155 responses from mostly senior marketers, directors, and C-suite to offer fresh insights into how industry leaders perceive AI, and how they are using AI right now.

While some marketers are unlocking major gains in efficiency, others are struggling with poor output quality, lack of brand voice consistency, and legal uncertainties.

Our whitepaper presents their responses, broken down across five core themes:

- Which AI tools are most broadly adopted among marketers.

- How marketers are using AI.

- The results they’re seeing.

- The challenges they face.

- Where they plan to invest next.

Whether you’re leading a team or building a roadmap, this report is designed to help you benchmark your AI strategy to make confident decisions as our industry moves at an unprecedented pace.

6 Key Findings From The Report

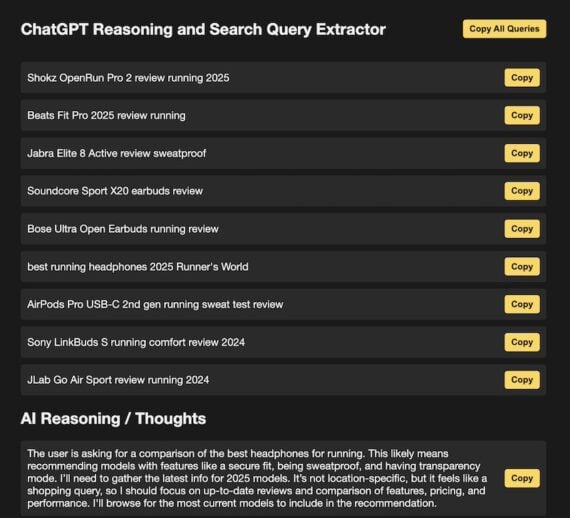

1. ChatGPT Is Currently Dominating The Tools

Over 83% of marketers said ChatGPT has positively impacted their efficiency or effectiveness.

But it’s not the only player: Tools like Gemini, Perplexity, Claude, and Canva AI are also making their mark, with many marketers assembling AI “stacks” that combine different strengths across platforms.

2. Content Has Seen The Most Tangible Impact From AI

Unsurprisingly, the most impact in marketing so far among our respondents is based around content, where 64.5% experienced value with content creation, 43.9% with content optimization, and 43.9% with idea generation.

However, AI is not replacing creativity; it’s augmenting it. Marketing teams are using tools to speed up, optimize and break through creative blocks, not to replace human insight.

The report also shares other key areas where AI has delivered the most value to those surveyed.

3. Time Savings Were The Single Most Improved Outcome

The majority of respondents (76.8%) cited time savings as the biggest improvement since adopting AI.

To enhance productivity and efficiency, marketers are gaining hours back to relocate their time to more strategic work.

4. Direct ROI-Linked Results Are Lacking

While operational efficiency is clearly impacted, strategic metrics like customer lifetime value, lead quality, and attribution remain largely unchanged.

In other words, AI is streamlining how we work, but not necessarily improving what we deliver without human oversight and a sound strategy.

5. Output Quality Remains A Top Concern

More than half (54.2%) of respondents identified inaccurate, unreliable, or inconsistent output quality as the biggest limitation in using AI for marketing.

This highlights a central theme that AI still requires substantial human oversight to produce marketing-ready content.

6. Misinformation Is The No. 1 Concern

The most cited concern about AI’s rise in marketing wasn’t job loss; it was the risk of misinformation.

A full 62.6% of respondents flagged AI-generated misinformation as their top worry, revealing the importance of trust, accuracy, and reputation for AI-powered content.

The report also highlights the other areas of concern where marketers are experiencing limitations and inefficiencies.

More Key Findings In The State Of AI Report

Marketing Leaders Are Planning To Invest In These Key Areas

Marketing decision-makers surveyed are prioritizing AI investments where value has already been proven. The report breaks down how much of that investment is across analytics, customer experience, SEO, marketing attribution, or content production, amongst other areas.

How Marketing Leaders Are Restructuring Their Teams

The report findings also indicate whether and how our respondents restructured to accommodate AI within their organization.

Where Will Be The Biggest Impact Over The Next Few Months

Possibly the most insightful section is where respondents gave their thoughts into what would be AI’s biggest impact on marketing over the next 12 months.

Many expect a content explosion, where the market is flooded with AI-generated assets, raising the bar for originality and quality.

Others foresee a reshaped search industry and reduced roles, with an emphasis on those who don’t embrace AI getting left behind.

But, not all forecasts are negative. Several marketers believe AI will level the playing field for small businesses, increase access to high-quality tools, and empower individuals to do the work of many.

You can find many more comments and predictions in the full report.

The State Of AI In Marketing Report For 2025 Can Help Shed Light

Right now is one of the most challenging times our industry has faced, and marketing leaders have hard decisions to make.

Hopefully, this whitepaper will help to shed light on how and where leaders can move forward.

In the report, you can find:

- A real-world look at what tools marketers are using now and how they’re stacking them.

- Insights into what areas of marketing AI are transforming, and where it’s still falling short.

- A clear view into future investments, from personalization to performance tracking.

- Actionable recommendations for how to evaluate, deploy, and govern AI effectively.

- Perspectives from marketers navigating AI’s challenges.

Download the full AI in marketing survey report to make confident decisions in your AI implementation strategy.

Featured Image: Paulo Bobita/Search Engine Journal