Do Faces Help YouTube Thumbnails? Here’s What The Data Says via @sejournal, @MattGSouthern

A claim about YouTube thumbnails is getting attention on X: that showing your face is “probably killing your views,” and that removing yourself will make click-through rates jump.

Nate Curtiss, Head of Content at 1of10 Media, pushed back, calling that kind of advice too absolute and pointing to a dataset that suggests the answer is more situational.

The dispute matters because thumbnail advice often gets reduced to rules. YouTube’s own product signals suggest the platform is trying to reward what keeps viewers watching, not whatever earns the fastest click.

Where The “Remove Your Face” Claim Comes From

In a recent post, vidIQ suggested that unless you’re already well-known, people click for ideas rather than creators, and that removing your face from thumbnails can raise CTR.

Believe it or not, your face in the thumbnail is probably killing your views.

Unless you’re already famous, people click for IDEAS, not creators.

Test removing yourself. Your CTR will likely spike.

— vidIQ (@vidIQ) December 3, 2025

Curtiss responded by calling the claim unsupported, and linked to highlights from a long-form report based on a sample of high-performing YouTube videos.

This is a pretty baseless claim lol.

We analyzed over 300,000 viral videos from 2025 to find the real answer to:

Do faces matter in thumbnails?

TLDR: It depends on your niche, your format, and how many faces you use.

1. Overall, thumbnails with faces and without faces… https://t.co/qywBOKsSMv pic.twitter.com/LTbKNlm6MJ

— Nate Curtiss (@natecurtiss_yt) December 21, 2025

The debate is one side arguing faces distract from the idea, while the other argues faces can help or hurt depending on what you publish and who you publish for.

What The Data Says About Faces In Thumbnails

The report Curtiss linked to describes a dataset of more than 300,000 “viral” YouTube videos from 2025, spanning tens of thousands of channels. It defines “outlier” performance using an “Outlier Score,” calculated as a high-performing video’s views relative to the channel’s median views.

On faces specifically, the report’s top finding is that thumbnails with faces and thumbnails without faces perform similarly, even though faces appear on a large share of videos in the sample.

The differences show up when the report breaks down the data:

- In its channel-size breakdown, it finds that adding a face only helped channels above a certain subscriber threshold, and even then the lift was modest.

- In its niche segmentation, it finds that some categories performed better with faces while others performed worse. Finance is listed among the niches that performed better with faces, while Business is listed among the niches that performed worse.

- It also reports that thumbnails featuring multiple faces performed best compared to single-face thumbnails.

What YouTube Says About Faces In Thumbnails

Even if a thumbnail change increases CTR, YouTube’s own tooling suggests the algorithm is optimizing for what happens after the click.

In a YouTube blog post, Creator Liaison Rene Ritchie explains that the thumbnail testing tool runs until one variant achieves a higher percentage of watch time.

He also explains why results are returned as watch time rather than separate CTR and retention metrics, describing watch time as incorporating both the click and the ability to keep viewers watching.

Ritchie writes:

“Thumbnail Test & Compare returns watch time rather than separate metrics on click-through rate (CTR) and retention (AVP), because watch time includes both! You have to click to watch and you have to retain to build up time. If you over-index on CTR, it could become click-bait, which could tank retention, and hurt performance. This way, the tool helps build good habits — thumbnails that make a promise and videos that deliver on it!”

This helps explain why CTR-based thumbnail advice can be incomplete. A thumbnail that boosts clicks but leads to shorter viewing may not win in YouTube’s testing tool.

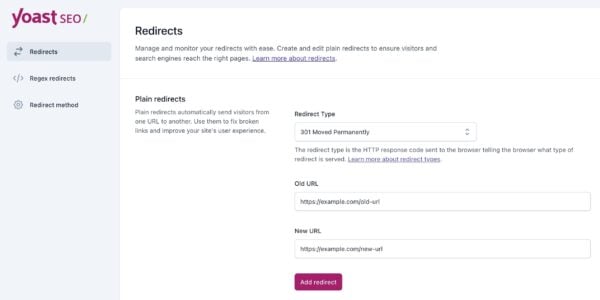

YouTube is leaning into A/B testing as a workflow inside Studio. In a separate YouTube blog post about new Studio features, YouTube describes how you can test and compare up to three titles and thumbnails per video.

The “Who” Matters: Subscribers vs. Strangers

YouTube’s Help Center suggests thinking about audience segments, such as new, casual, and regular viewers. Then adapt your content strategy for each group rather than treat all viewers the same.

YouTube suggests thinking about who you’re trying to reach. Content aimed at subscribers can lean on familiar cues, while content aimed at casual viewers may need more universally readable actions or emotions.

That aligns with the report’s finding that faces helped larger channels more than smaller ones, which could reflect stronger audience familiarity.

What This Means

The practical takeaway is not to “put your face in every thumbnail” or “go faceless.”

The data suggests faces are common and, on average, not dramatically different from no-face thumbnails. The interesting part is the segmentation: some topics appear to benefit from faces more than others, and multiple faces may generate more interest than a single reaction shot.

YouTube’s testing design keeps pulling the conversation back to viewer outcomes. Clicks matter, but so does whether the thumbnail matches the video and earns watch time once someone lands.

YouTube’s product team describes this as “Packaging,” which is a concept that treats the title, thumbnail, and the first 30 seconds of the video as a single unit.

On mobile, where videos often auto-play, the face in the thumbnail should naturally transition into the video’s intro. If the emotional cue in the thumbnail doesn’t match the opening of the video, it can hurt early retention.

Looking Ahead

This debate keeps resurfacing because creators want simple rules, and YouTube performance rarely works that way.

The debate overlooks an important point that top creators like MrBeast emphasize. It’s more about how you show your face than whether you show it at all.

MrBeast previously mentioned that changing how he appears in thumbnails, like switching to closed-mouth expressions, increased watch time in his tests.

The 1of10 data supports the idea that faces in thumbnails aren’t a blanket rule. Results can vary by topic, format, and audience expectations.

A better way to look at it is fit. Faces can help signal trust, identity, or emotion, but they can also compete with the subject of the video depending on what you publish.

With YouTube adding more testing to Studio, you may get better results by validating thumbnail decisions against watch-time outcomes instead of relying on one-size-fits-all advice.

Featured Image: T. Schneider/Shutterstock