OpenAI ChatGPT Agent Marks A Turning Point For Businesses And SEO via @sejournal, @martinibuster

OpenAI announced a new way for users to interact with the web to get things done in their personal and professional lives. ChatGPT agent is said to be able to automate planning a wedding, booking an entire vacation, updating a calendar, and converting screenshots into editable presentations. The impact on publishers, ecommerce stores, and SEOs cannot be overstated. This is what you should know and how to prepare for what could be one of the most consequential changes to online interactions since the invention of the browser.

OpenAI ChatGPT Agent Overview

OpenAI ChatGPT agent is based on three core parts, OpenAI’s Operator and Deep Research, two autonomous AI agents, plus ChatGPT’s natural language capabilities.

- Operator can browse the web and interact with websites to complete tasks.

- Deep Research is designed for multi-step research that is able to combine information from different resources and generate a report.

- ChatGPT agent requests permission before taking significant actions and can be interrupted and halted at any point.

ChatGPT Agent Capabilities

ChatGPT agent has access to multiple tools to help it complete tasks:

- A visual browser for interacting with web pages with the on-page interface.

- Text based browser for answering reasoning-based queries.

- A terminal for executing actions through a command-line interface.

- Connectors, which are authorized user-friendly integrations (using APIs) that enable ChatGPT agent to interact with third-party apps.

Connectors are like bridges between ChatGPT agent and your authorized apps. When users ask ChatGPT agent to complete a task, the connectors enable it to retrieve the needed information and complete tasks. Direct API access via connectors enables it to interact with and extract information from connected apps.

ChatGPT agent can open a page with a browser (either text or visual), download a file, perform an action on it, and then view the results in the visual browser. ChatGPT connectors enable it to connect with external apps like Gmail or a calendar for answering questions and completing tasks.

ChatGPT Agent Automation of Web-Based Tasks

ChatGPT agent is able to complete entire complex tasks and summarize the results.

Here’s how OpenAI describes it:

“ChatGPT can now do work for you using its own computer, handling complex tasks from start to finish.

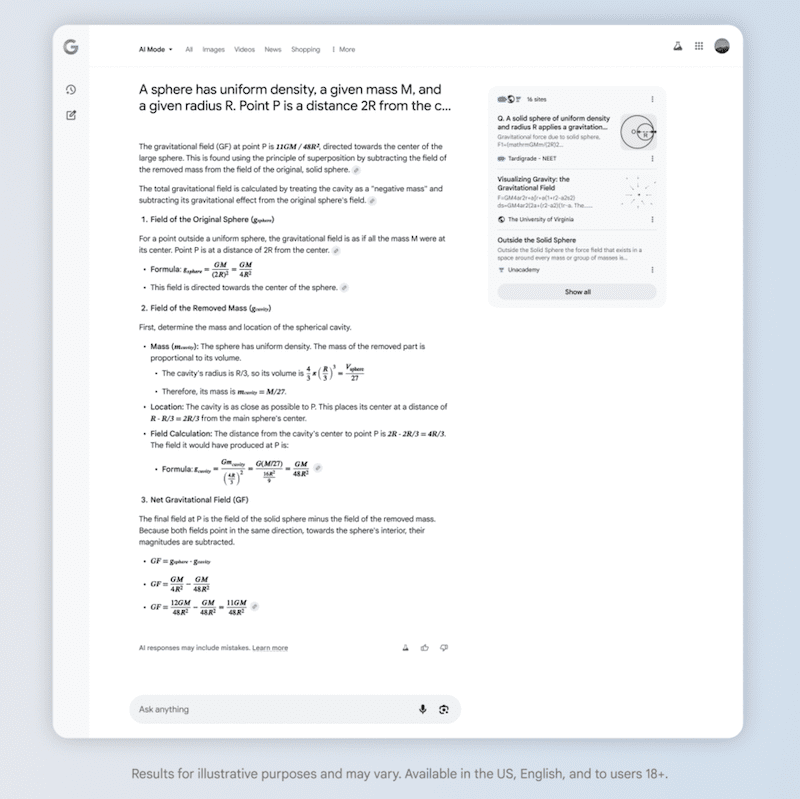

You can now ask ChatGPT to handle requests like “look at my calendar and brief me on upcoming client meetings based on recent news,” “plan and buy ingredients to make Japanese breakfast for four,” and “analyze three competitors and create a slide deck.”

ChatGPT will intelligently navigate websites, filter results, prompt you to log in securely when needed, run code, conduct analysis, and even deliver editable slideshows and spreadsheets that summarize its findings.

….ChatGPT agent can access your connectors, allowing it to integrate with your workflows and access relevant, actionable information. Once authenticated, these connectors allow ChatGPT to see information and do things like summarize your inbox for the day or find time slots you’re available for a meeting—to take action on these sites, however, you’ll still be prompted to log in by taking over the browser.

Additionally, you can schedule completed tasks to recur automatically, such as generating a weekly metrics report every Monday morning.”

What Does ChatGPT Agent Mean For SEO?

ChatGPT agent raises the stakes for publishers, online businesses, and SEO, in that making websites Agentic AI–friendly becomes increasingly important as more users become acquainted with it and begin sharing how it helps them in their daily lives and at work.

A recent study about AI agents found that OpenAI’s Operator responded well to structured on-page content. Structured on-page content enables AI agents to accurately retrieve specific information relevant to their tasks, perform actions (like filling in a form), and helps to disambiguate the web page (i.e., make it easily understood). I usually refrain from using jargon, but disambiguation is a word all SEOs need to understand because Agentic AI makes it more important than it has ever been.

Examples Of On-Page Structured Data

- Headings

- Tables

- Forms with labeled input forms

- Product listing with consistent fields like price, availability, name or label of the product in a title.

- Authors, dates, and headlines

- Menus and filters in ecommerce web pages

Takeaways

- ChatGPT agent is a milestone in how users interact with the web, capable of completing multi-step tasks like planning trips, analyzing competitors, and generating reports or presentations.

- OpenAI’s ChatGPT agent combines autonomous agents (Operator and Deep Research) with ChatGPT’s natural language interface to automate personal and professional workflows.

- Connectors extend Agent’s capabilities by providing secure API-based access to third-party apps like calendars and email, enabling task execution across platforms.

- Agent can interact directly with web pages, forms, and files, using tools like a visual browser, code execution terminal, and file handling system.

- Agentic AI responds well to structured, disambiguated web content, making SEO and publisher alignment with structured on-page elements more important than ever.

- Structured data improves an AI agent’s ability to retrieve and act on website information. Sites that are optimized for AI agents will gain the most, as more users depend on agent-driven automation to complete online tasks.

OpenAI’s ChatGPT agent is an automation system that can independently complete complex online tasks, such as booking trips, analyzing competitors, or summarizing emails, by using tools like browsers, terminals, and app connectors. It interacts directly with web pages and connected apps, performing actions that previously required human input.

For publishers, ecommerce sites, and SEOs, ChatGPT agent makes structured, easily interpreted on-page content critical because websites must now accommodate AI agents that interact with and act on their data in real time.

Read More About Optimizing For Agentic AI

Marketing To AI Agents Is The Future – Research Shows Why

Featured Image by Shutterstock/All kind of people