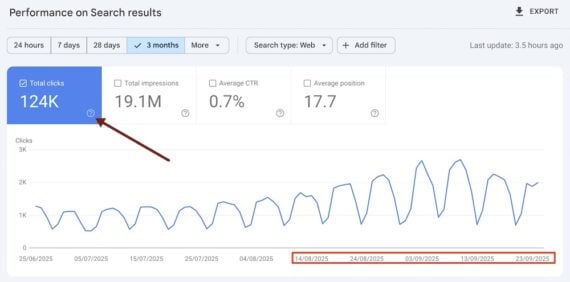

Google’s Search Relations team says generic login pages can confuse indexing and hurt rankings.

When many private URLs all show the same bare login form, Google may treat them as duplicates and show the login page in search.

In a recent “Search Off the Record” episode, John Mueller and Martin Splitt explained how this happens and what to do about it.

Why It Happens

If different private URLs all load the same login screen, Google sees those URLs as the same page.

Mueller said on the podcast:

“If you have a very generic login page, we will see all of these URLs that show that login page that redirect to that login page as being duplicates… We’ll fold them together as duplicates and we’ll focus on indexing the login page because that’s kind of what you give us to index.”

That means people searching for your brand may land on a login page instead of helpful information.

“We regularly see Google services getting this wrong,” Mueller admitted, noting that with many teams, “you invariably run across situations like that.”

Search Console fixed this by sending logged-out visitors to a marketing page with a clear sign-in link, which gave Google indexable context.

Don’t Rely On robots.txt To Hide Private URLs

Blocking sensitive areas in robots.txt can still let those URLs appear in search with no snippet. That’s risky if the URLs expose usernames or email addresses.

Mueller warned:

“If someone does something like a site query for your site… Google and other search engines might be like, oh, I know about all of these URLs. I don’t have any information on what’s on there, but feel free to try them out essentially.”

If it’s private, avoid leaking details in the URL, and use noindex or a login redirect instead of robots.txt.

What To Do Instead

If content must stay private, serve a noindex on private endpoints or redirect requests to a dedicated login or marketing page.

Don’t load private text into the page and then hide it with JavaScript. Screen readers and crawlers may still access it.

If you want restricted pages indexed, use the paywall structured data. It allows Google to fetch the full content while understanding that regular visitors will hit an access wall.

Paywall structured data isn’t only for paid content, Mueller explains:

“It doesn’t have to be something that’s behind like a clear payment thing. It can just be something like a login or some other mechanism that basically limits the visibility of the content.”

Lastly, add context to login experiences. Include a short description of the product or the section someone is trying to reach.

As Mueller advised:

“Put some information about what your service is on that login page.”

A Quick Test

Open an incognito window. While logged out, search for your brand or service and click the top results.

If you land on bare login pages with no context, you likely need updates. You can also search for known URL patterns from account areas to see what shows up.

Looking Ahead

As more businesses use subscriptions and gated experiences, access design affects SEO.

Use clear patterns (noindex, proper redirects, and paywalled markup where needed) and make sure public entry points provide enough context to rank for the right queries.

Small changes to login pages and redirects can prevent duplicate grouping and improve how your site appears in search.

Featured Image: Roman Samborskyi/Shutterstock