Every Monday, more than a hundred members of Giovanni Traverso’s Laboratory for Translational Engineering (L4TE) fill a large classroom at Brigham and Women’s Hospital for their weekly lab meeting. With a social hour, food for everyone, and updates across disciplines from mechanical engineering to veterinary science, it’s a place where a stem cell biologist might weigh in on a mechanical design, or an electrical engineer might spot a flaw in a drug delivery mechanism. And it’s a place where everyone is united by the same goal: engineering new ways to deliver medicines and monitor the body to improve patient care.

Traverso’s weekly meetings bring together a mix of expertise that lab members say is unusual even in the most collaborative research spaces. But his lab—which includes its own veterinarian and a dedicated in vivo team—isn’t built like most. As an associate professor at MIT, a gastroenterologist at Brigham and Women’s, and an associate member of the Broad Institute, Traverso leads a sprawling research group that spans institutions, disciplines, and floors of lab space at MIT and beyond.

For a lab of this size—spread across MIT, the Broad, the Brigham, the Koch Institute, and The Engine—it feels remarkably personal. Traverso, who holds the Karl Van Tassel (1925) Career Development Professorship, is known for greeting every member by name and scheduling one-on-one meetings every two or three weeks, creating a sense of trust and connection that permeates the lab.

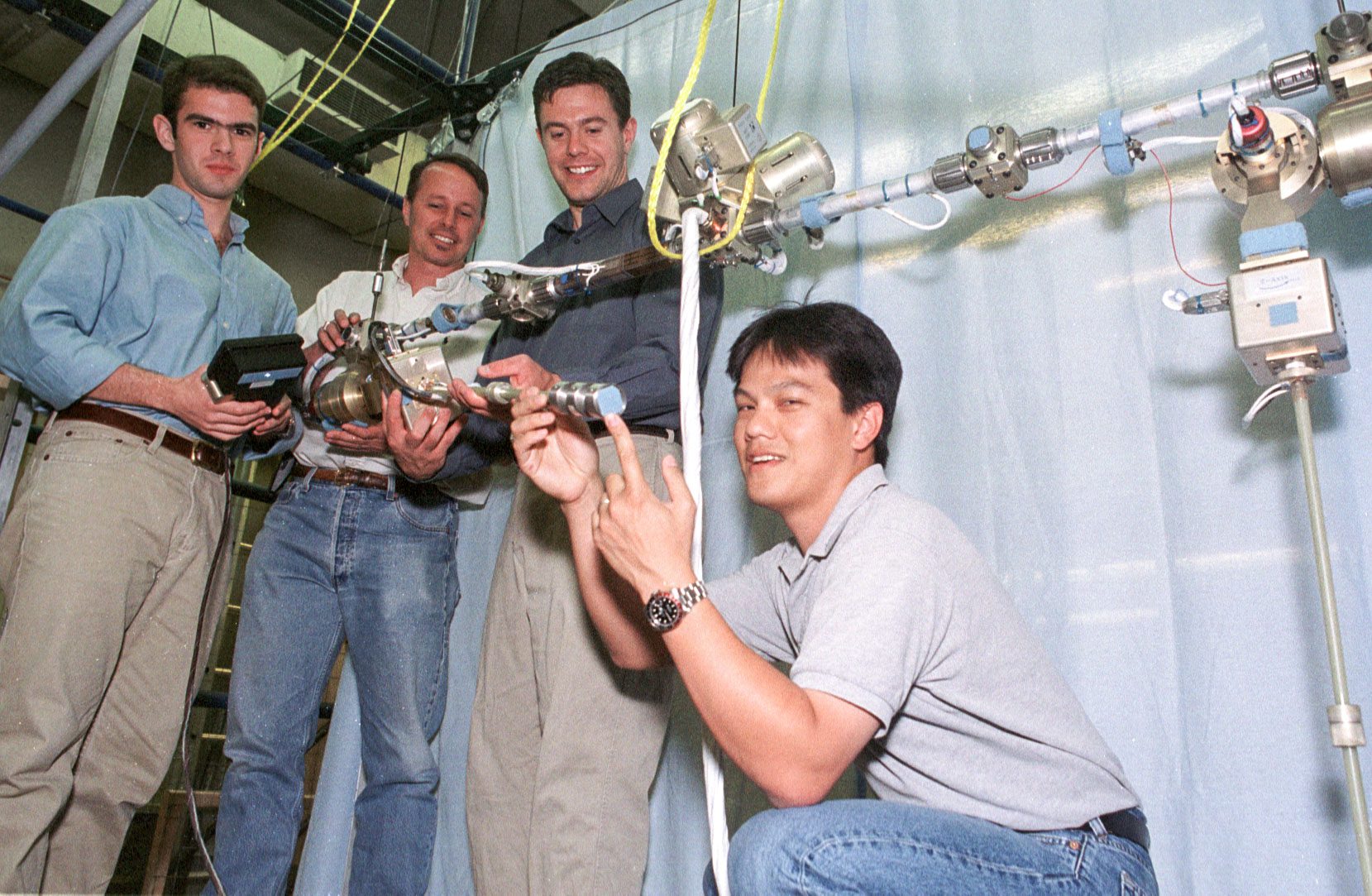

That trust is essential for a team built on radical interdisciplinarity. L4TE brings together mechanical and electrical engineers, biologists, physicians, and veterinarians in a uniquely structured lab with specialized “cores” such as fabrication, bioanalytics, and in vivo teams. The setup means a researcher can move seamlessly from developing a biological formulation to collaborating with engineers to figure out the best way to deliver it—without leaving the lab’s ecosystem. It’s a culture where everyone’s expertise is valued, people pitch in across disciplines, and projects aim squarely at the lab’s central goal: creating medical technologies that not only work in theory but survive the long, unpredictable journey to the patient.

“At the core of what we do is really thinking about the patient, the person, and how we can help make their life better,” Traverso says.

Helping patients ASAP

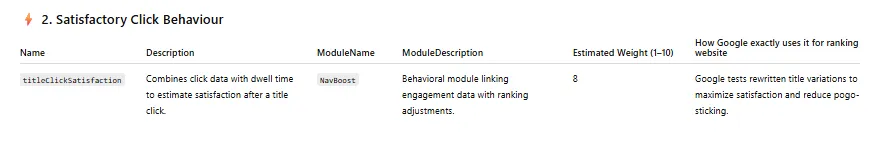

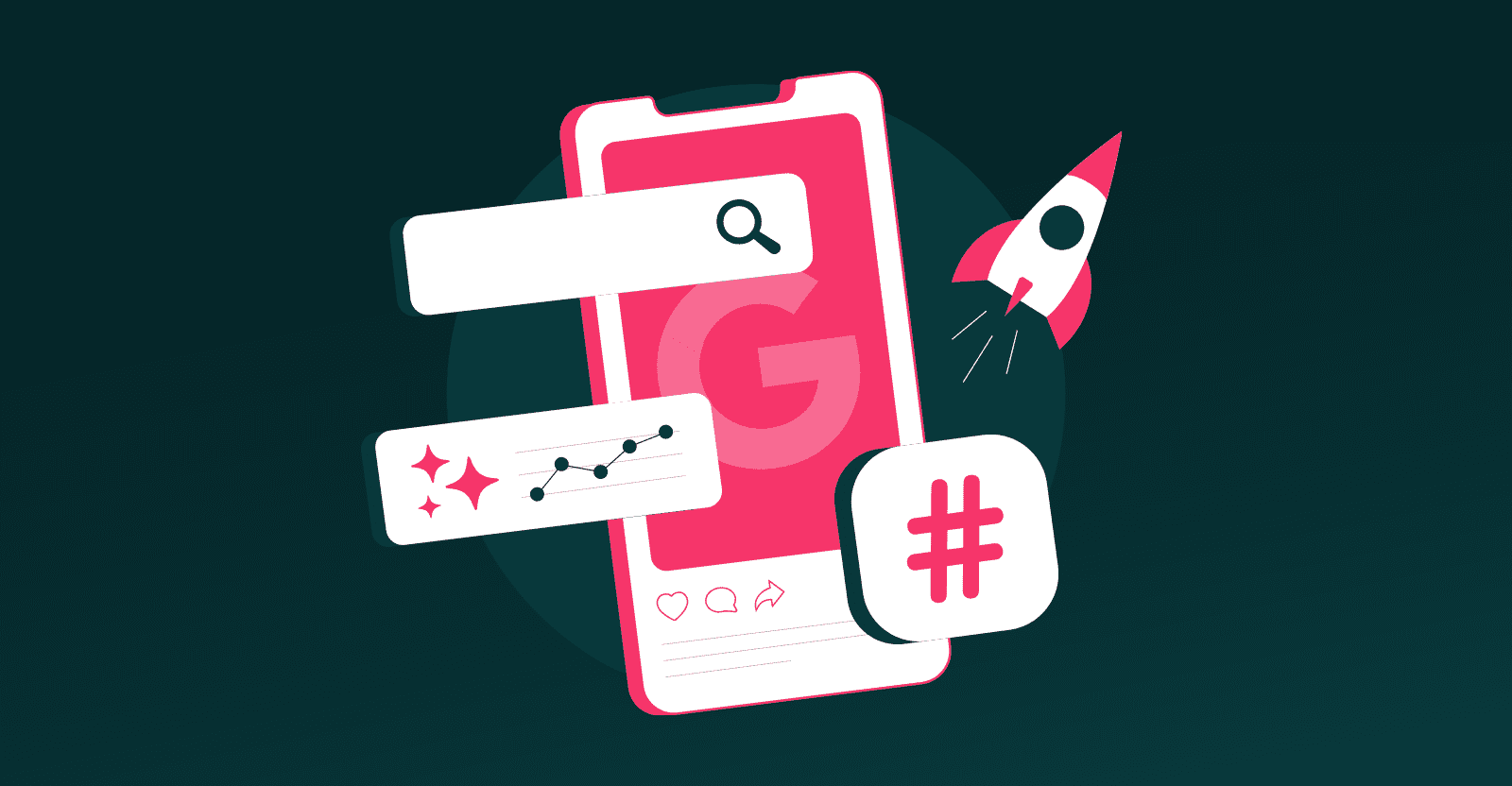

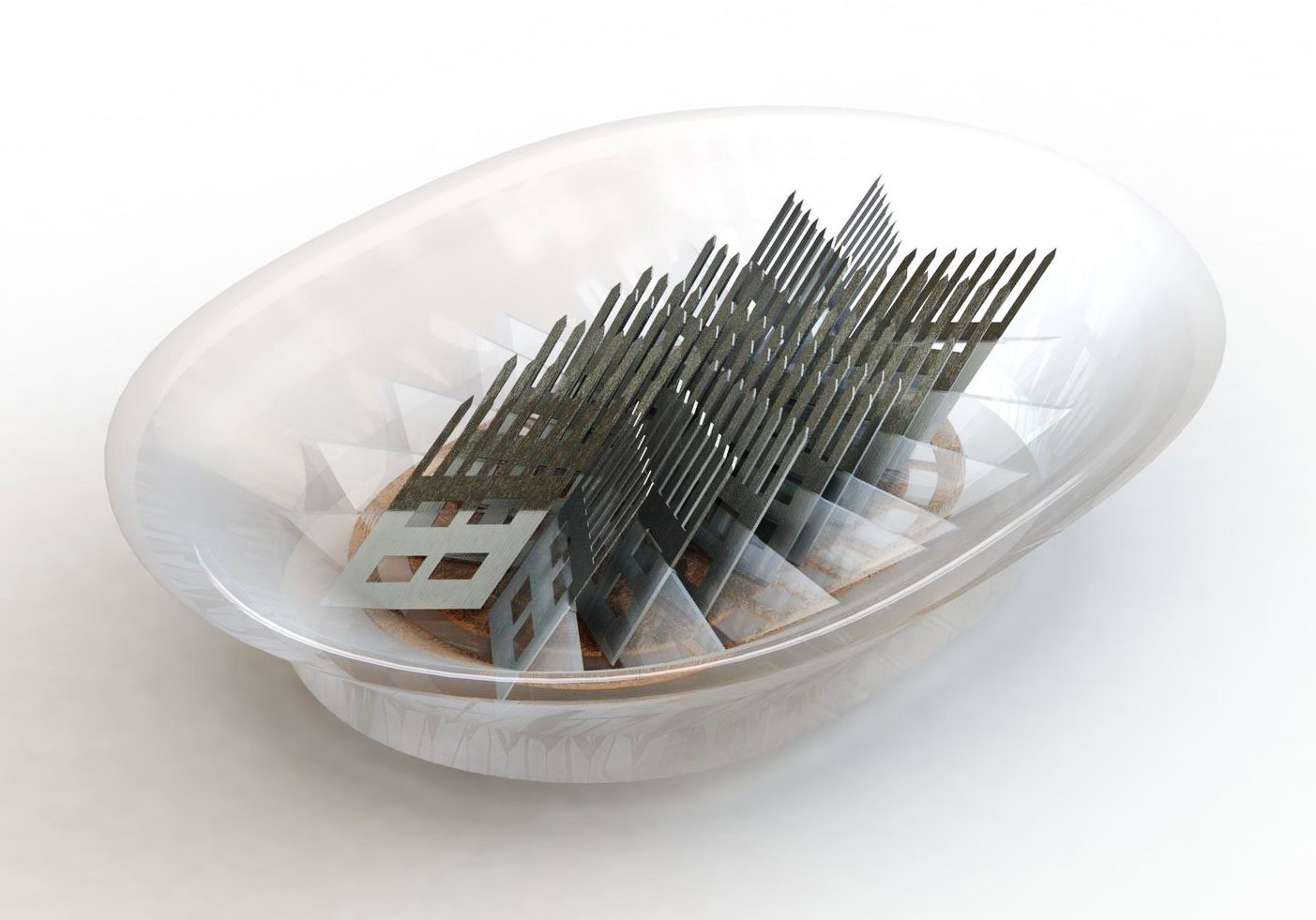

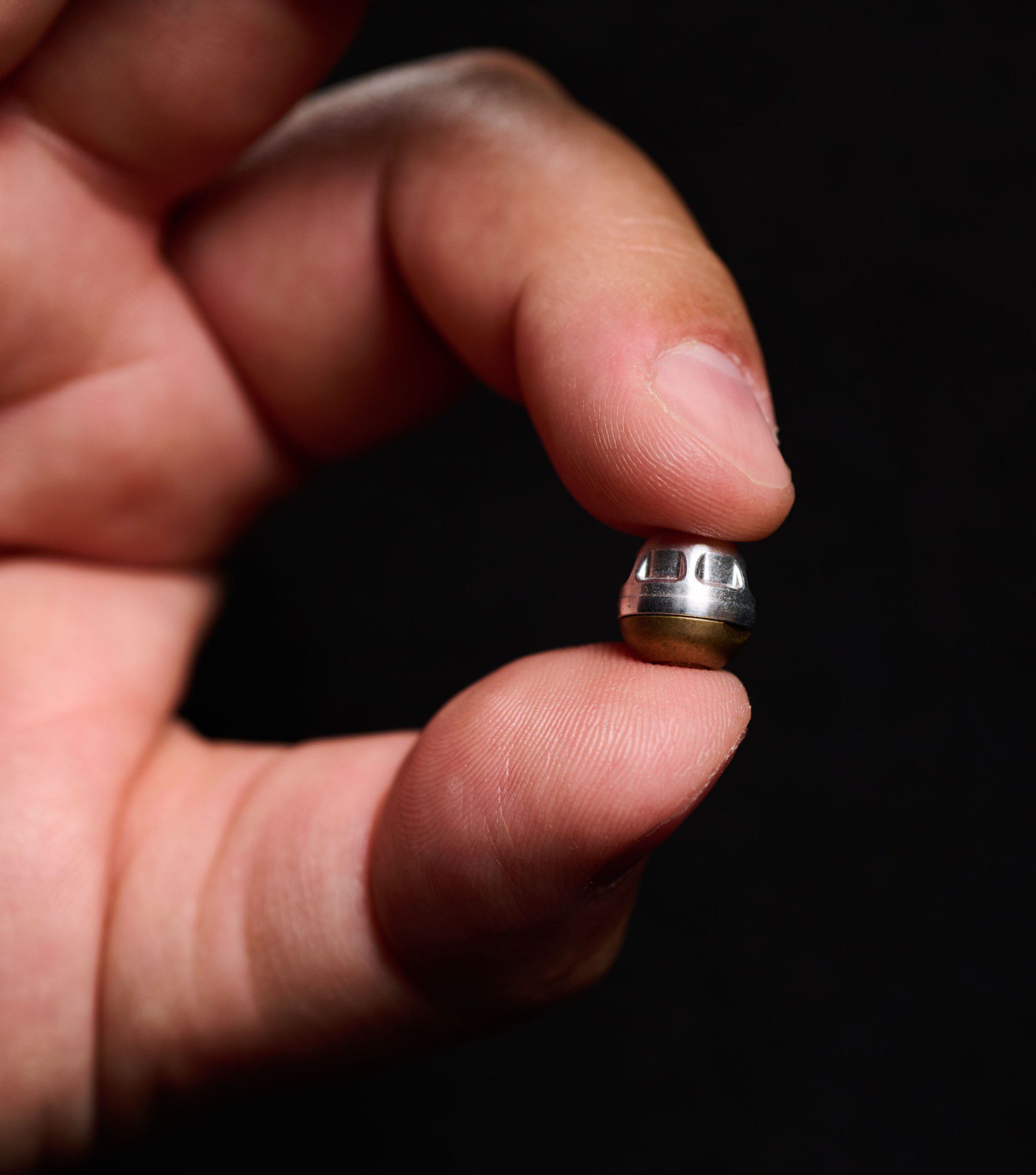

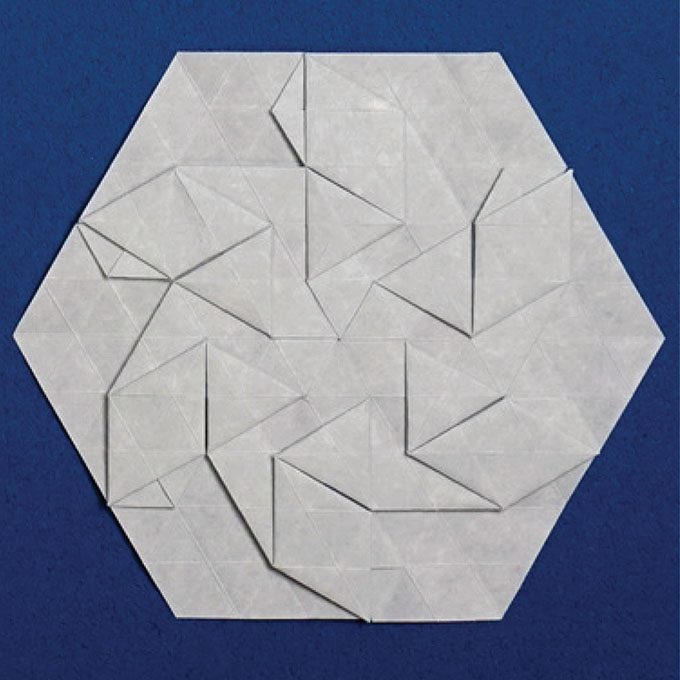

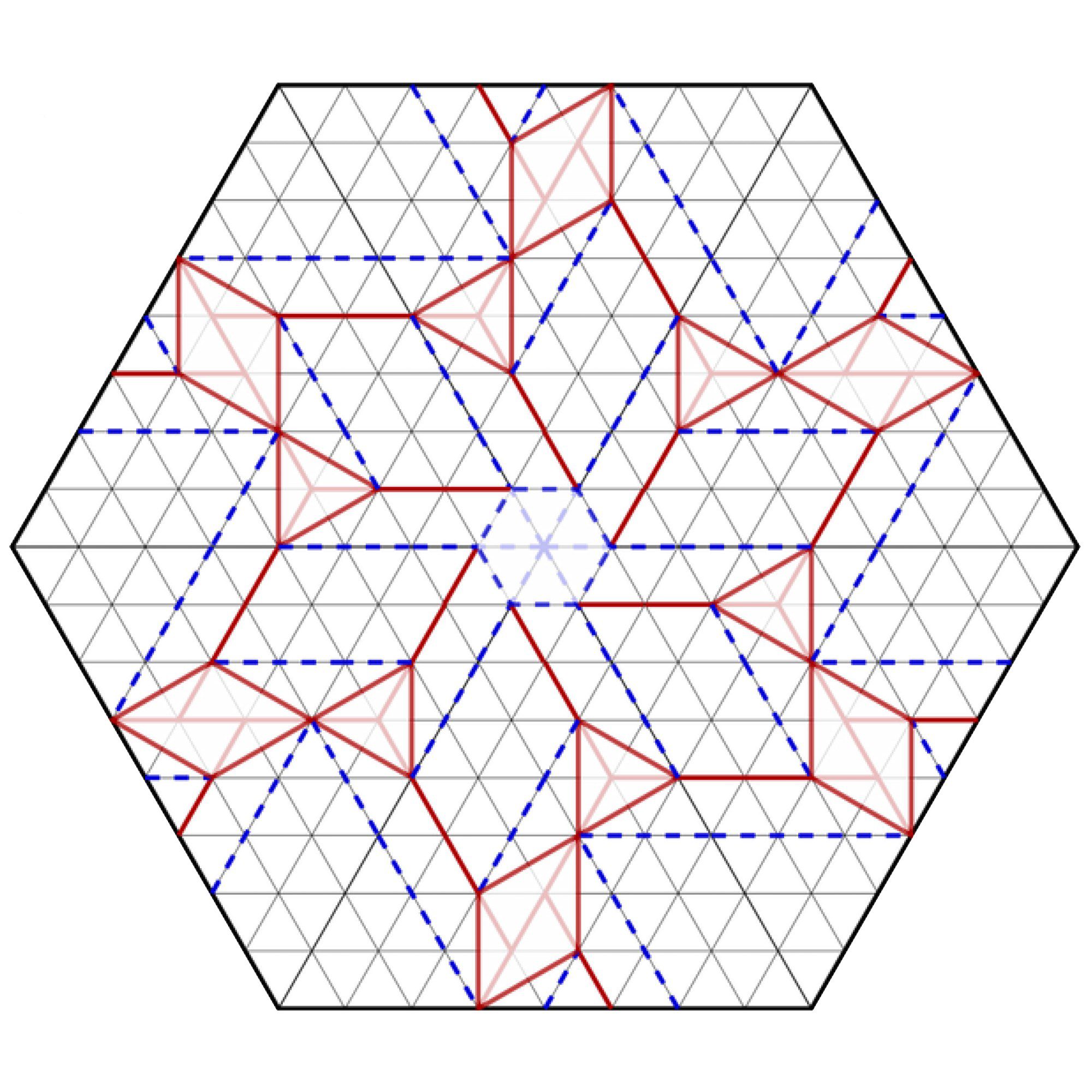

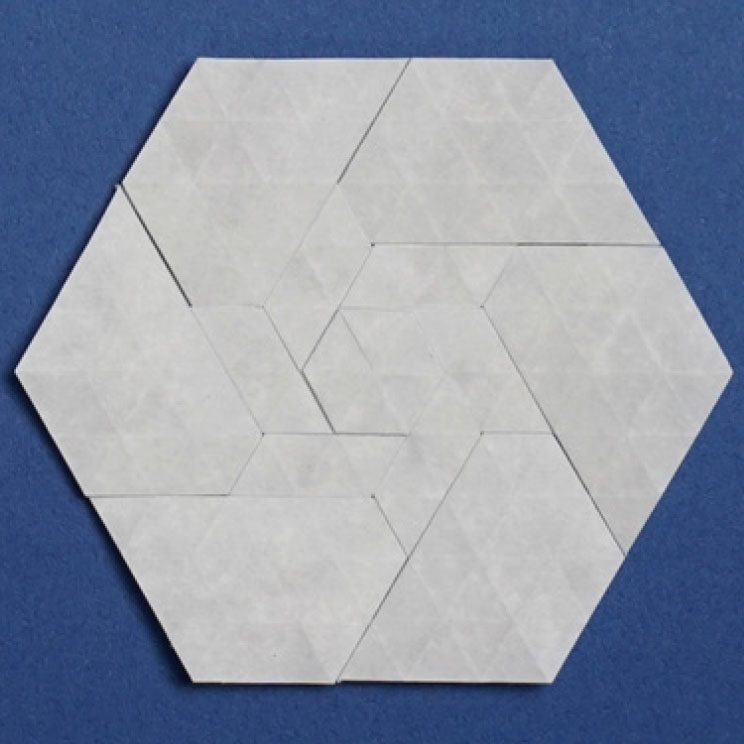

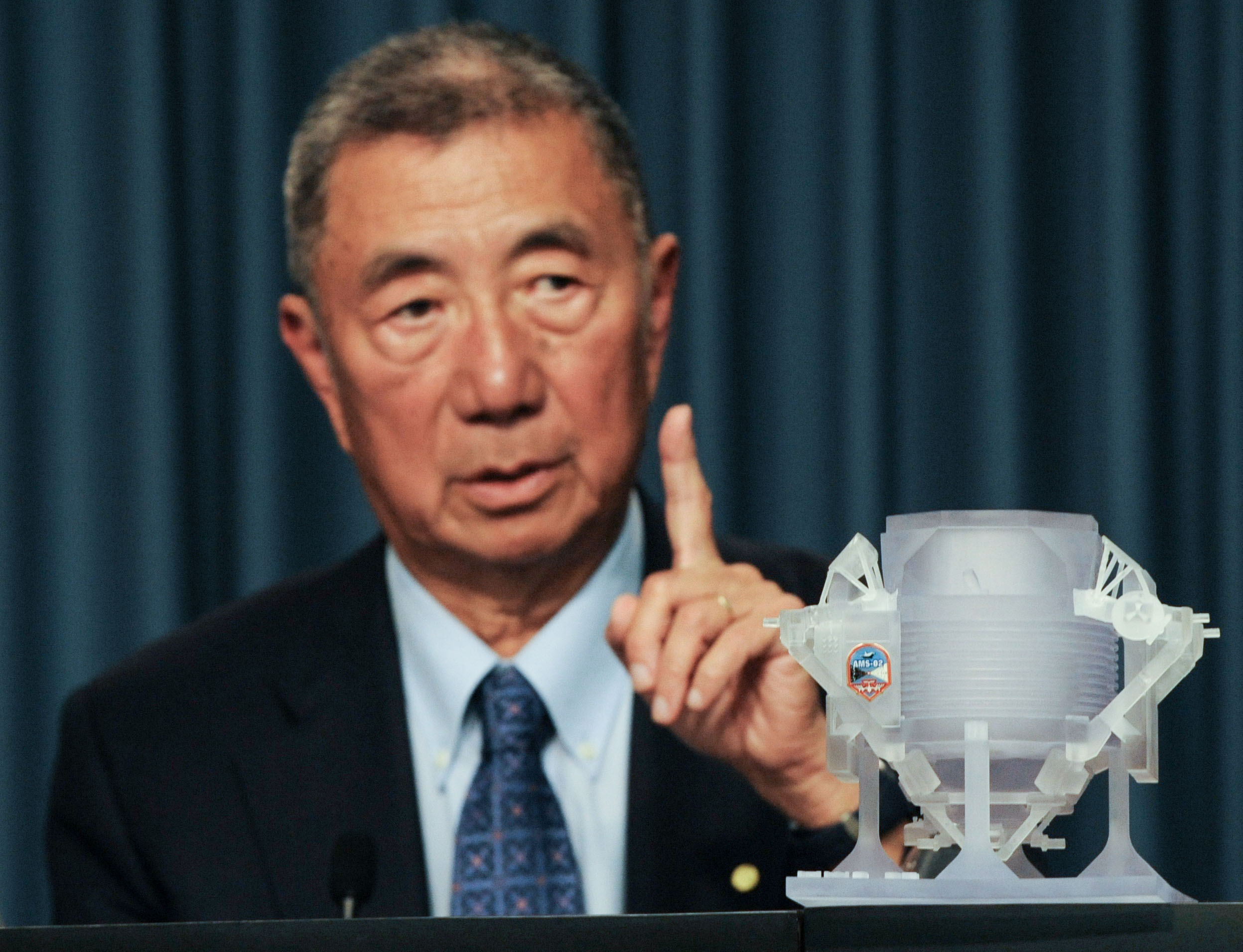

Traverso’s team has developed a suite of novel technologies: a star-shaped capsule that unfolds in the stomach and delivers drugs for days or weeks; a vibrating pill that mimics the feeling of fullness; the technology behind a once-a-week antipsychotic tablet that has completed phase III clinical trials. (See “Designing devices for real-world care,” below.) Traverso has cofounded 11 startups to carry such innovations out of the lab and into the world, each tailored to the technology and patient population it serves.

But the products are only part of the story. What distinguishes Traverso’s approach is the way those products are conceived and built. In many research groups, initial discoveries are developed into early prototypes and then passed on to other teams—sometimes in industry, sometimes in clinical settings—for more advanced testing and eventual commercialization. Traverso’s lab typically links those steps into one continuous system, blending invention, prototyping, testing, iteration, and clinical feedback as the work of a single interdisciplinary team. Engineers sit shoulder to shoulder with physicians, materials scientists with microbiologists. On any given day, a researcher might start the morning discussing an animal study with a veterinarian, spend the afternoon refining a mechanical design, and close the day in a meeting with a regulatory expert. The setup collapses months of back-and-forth between separate teams into the collaborative environment of L4TE.

“This is a lab where if you want to learn something, you can learn everything if you want,” says Troy Ziliang Kang, one of the research scientists.

In a field where translating scientific ideas into practical applications can take years (or stall indefinitely), Traverso has built a culture designed to shorten that path.

The range of problems the lab tackles reflects its interdisciplinary openness. One recent project aimed to replace invasive contraceptive devices such as vaginal rings with a biodegradable injectable that begins as a liquid, solidifies inside the body, and dissolves safely over time.

Another project addresses the challenge of delivering drugs directly to the gut, bypassing the mucus barrier that blocks many treatments. For Kang, whose grandfather died of gastric cancer, the work is personal. He’s developing devices that combine traditional drugs with electroceuticals—therapies that use electrical stimulation to influence cells or tissues.

“What I’m trying to do is find a mechanical approach, trying to see if we can really, through physical and mechanical approaches, break through those barriers and to deliver the electroceuticals and drugs to the gut,” he says.

In a field where the process of translating scientific ideas into practical applications can take years (or stall indefinitely), Traverso, 49, has built a culture designed to shorten that path. Researchers focus on designing devices with the clinical relevance to help people in the near term. And they don’t wait for outsiders to take an idea forward. They often initiate collaborations with entrepreneurs, investors, and partners to create startups or push projects directly into early trials—or even just do it themselves. The projects in the L4TE Lab are ambitious, but the aim is simple: Solve problems that matter and build the tools to make those solutions real.

Nabil Shalabi, an instructor in medicine at Harvard/BWH, an associate scientist at the Broad Institute, and a research affiliate in Traverso’s lab, sums up the attitude succinctly: “I would say this lab is really about one thing, and it’s about helping people.”

The physician-inventor

Traverso’s path into medicine and engineering began far from the hospitals and labs where he works today. Born in Cambridge, England, he moved with his family to Peru when he was still young. His father had grown up there in a family with Italian roots; his mother came from Nicaragua. He spent most of his childhood in Lima before political turmoil in Peru led his family to relocate to Toronto when he was 14.

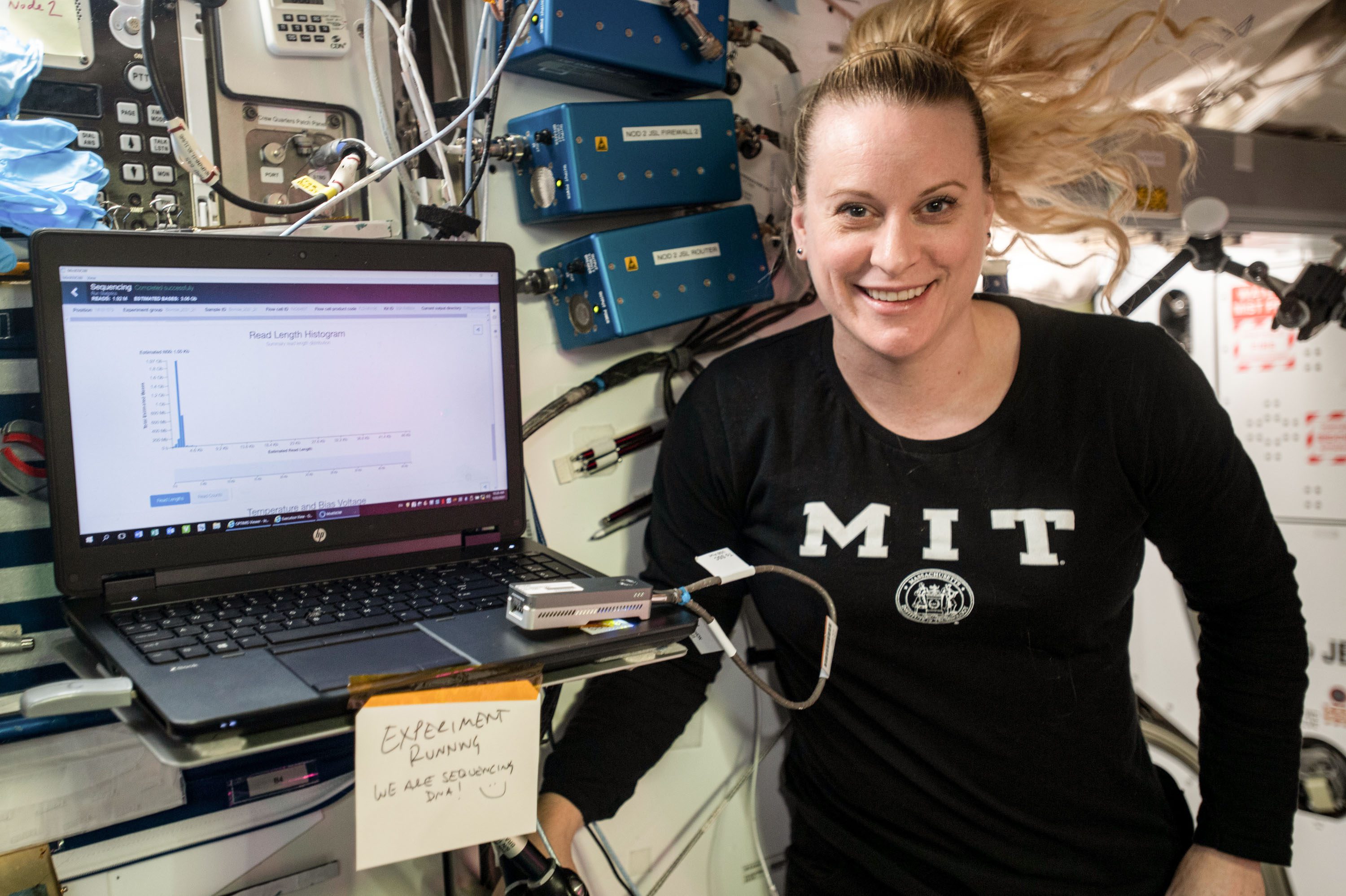

In high school, after finishing most of his course requirements early, he followed the advice of a chemistry teacher and joined a co-op program that would give him a glimpse of some career options. That decision brought him to a genetics lab at the Toronto Hospital for Sick Children, where he spent his afternoons helping map chromosome 7 and learning molecular techniques like PCR.

“In high school, and even before that, I always enjoyed science,” Traverso says.

After class, he’d ride the subway downtown and step into a world of hands-on science, working alongside graduate students in the early days of genomics.

“I really fell in love with the day-to-day, the process, and how one goes about asking a question and then trying to answer that question experimentally,” he says.

By the time he finished high school, he had already begun to see how science and medicine could intersect. He began an undergraduate medical program at Cambridge University, but during his second year, he reached out to the cancer biologist Bert Vogelstein and joined his lab at Johns Hopkins for the summer. The work resonated. By the end of the internship, Vogelstein asked if he’d consider staying to pursue a PhD. Traverso agreed, pausing his medical training after earning an undergraduate degree in medical sciences and genetics, and moved to Baltimore to begin a doctorate in molecular biology.

As a PhD student, he focused on the early detection of colon cancer, developing a method to identify mutations in stool samples—a concept later licensed by Exact Sciences and used in what is now known as the Cologuard test. After completing his PhD (and earning a spot on Technology Review’s 2003 TR35 list of promising young innovators for that work), he returned to Cambridge to finish medical school and spent the next three years in the UK, including a year as a house officer (the equivalent of a clinical intern in the US).

Traverso chose to pursue clinical training alongside research because he believed each would make the other stronger. “I felt that having the knowledge would help inform future research development,” he says.

So in 2007, as Traverso began a residency in internal medicine at Brigham and Women’s, he also approached MIT, where he reached out to Institute Professor Robert Langer, ScD ’74. Though Traverso didn’t have a background in Langer’s field of chemical engineering, he saw the value of pairing clinical insight with the materials science research happening in the professor’s lab, which develops polymers, nanoparticles, and other novel materials to tackle biomedical challenges such as delivering drugs precisely to diseased tissue or providing long-term treatment through implanted devices. Langer welcomed him into the group as a postdoctoral fellow.

In Langer’s lab, he found a place where clinical problems sparked engineering solutions, and where those solutions were designed with the patient in mind from the outset. Many of Traverso’s ideas came directly from his work in the hospital: Could medications be delivered in ways that make it easier for patients to take them consistently? Could a drug be redesigned so it wouldn’t require refrigeration in a rural clinic? And caring for a patient who’d swallowed shards of glass that ultimately passed without injury led Traverso to recognize the GI tract’s tolerance for sharp objects, inspiring his work on the microneedle pill.

“A lot of what we do and think about is: How do we make it easier for people to receive therapy for conditions that they may be suffering from?” Traverso says. How can they “really maximize health, whether it be by nutrient enhancement or by helping women have control over their fertility?”

If the lab sometimes runs like a startup incubator, its founder still thinks like a physician.

Scaling up to help more people

Traverso has cofounded multiple companies to help commercialize his group’s inventions. Some target global health challenges, like developing more sustainable personal protective equipment (PPE) for health-care workers. Others take on chronic conditions that require constant dosing—HIV, schizophrenia, diabetes—by developing long-acting oral or injectable therapies.

From the outset, materials, dimensions, and mechanisms are chosen for more than just performance in the lab. The researchers also consider the realities of regulation, manufacturing constraints, and safe use in patients.

“We definitely want to be designing these devices to be made of safe materials or [at a] safe size,” says James McRae, SM ’22, PhD ’25. “We think about these regulatory constraints that could come up in a company setting pretty early in our research process.” As part of his PhD work with Traverso, McRae created a “swallow-and-forget” health-tracking capsule that can stay in the stomach for months—and it doesn’t require surgery to install, as an implant would. The capsule measures tiny shifts in stomach temperature that happen whenever a person eats or drinks, providing a continuous record of eating patterns that’s far more reliable than what external devices or self-reporting can capture. The technology could offer new insight into how drugs such as Ozempic and other GLP-1 therapies change behavior—something that has been notoriously hard to monitor. From “day one,” McRae made sure to involve external companies and regulatory consultants for future human testing.

Traverso describes the lab’s work as a “continuum,” likening research projects to children who are born, nurtured, and eventually sent into the world to thrive and help people.

For lab employee Matt Murphy, a mechanical engineer who manages one of the main mechanical fabrication spaces, that approach is part of the draw. Having worked with researchers on projects spanning multiple disciplines—mechanical engineering, electronics, materials science, biology—he’s now preparing to spin out a company with one of Traverso’s postdocs.

“I feel like I got the PhD experience just working here for four years and being involved in health projects,” he says. “This has been an amazing opportunity to really see the first stages of company formation and how the early research really drives the commercialization of new technology.”

The lab’s specialized “cores” ensure that projects have consistent support and can draw on plenty of expertise, regardless of how many students or postdocs come and go. If a challenge arises in an area in which a lab member has limited knowledge, chances are someone else in the lab has that background and will gladly help. “The culture is so collaborative that everybody wants to teach everybody,” says Murphy.

Creating opportunities

In Traverso’s lab, members are empowered to pursue technically demanding research because the culture he created encourages them to stretch into new disciplines, take ownership of projects, and imagine where their work might go next. For some, that means cofounding a company. For others, it means leaving with the skills and network to shape their next big idea.

“He gives you both the agency and the support,” says Isaac Tucker, an L4TE postdoc based at the Broad Institute. “Gio trusts the leads in his lab to just execute on tasks.” McRae adds that Traverso is adept at identifying “pain points” in research and providing the necessary resources to remove barriers, which helps projects advance efficiently.

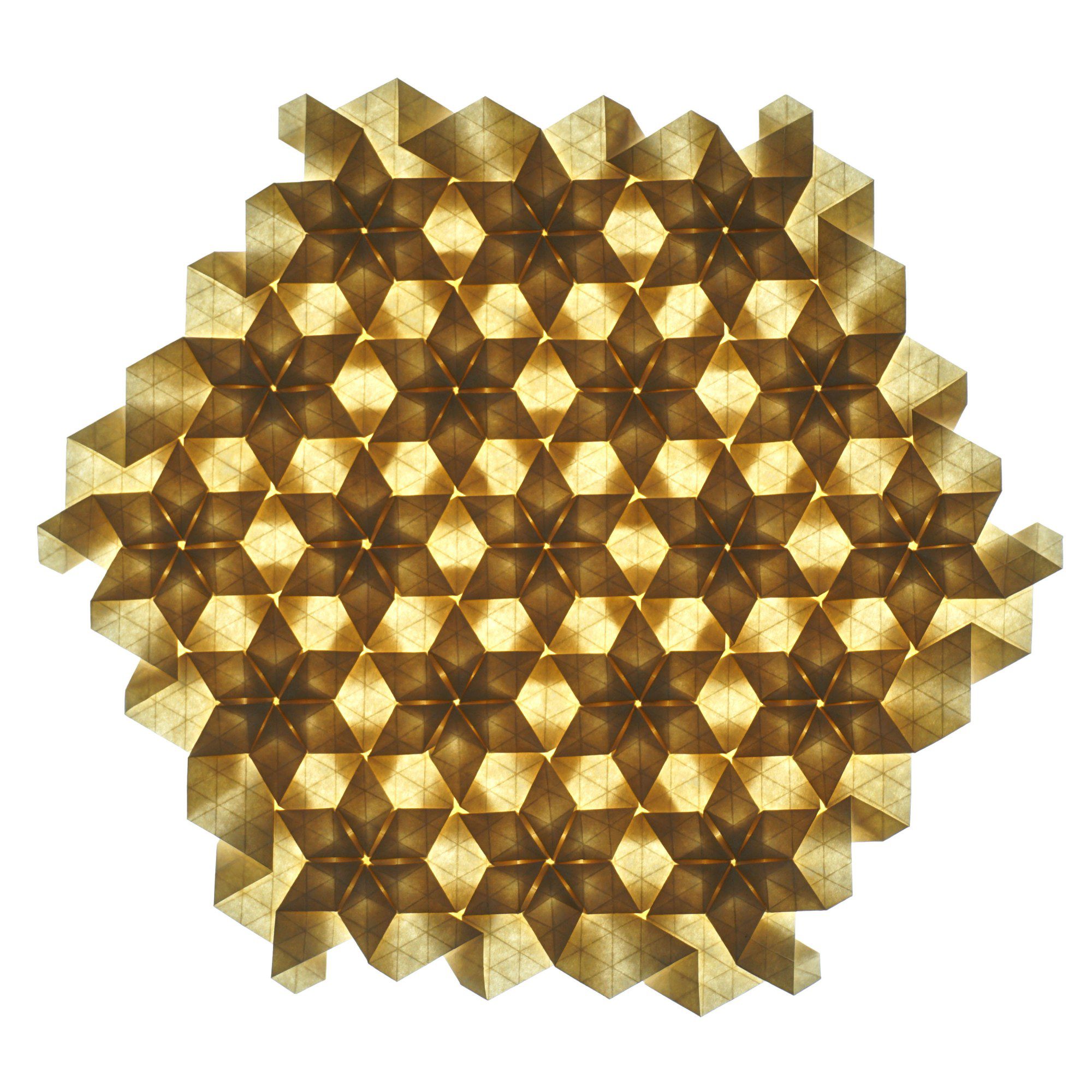

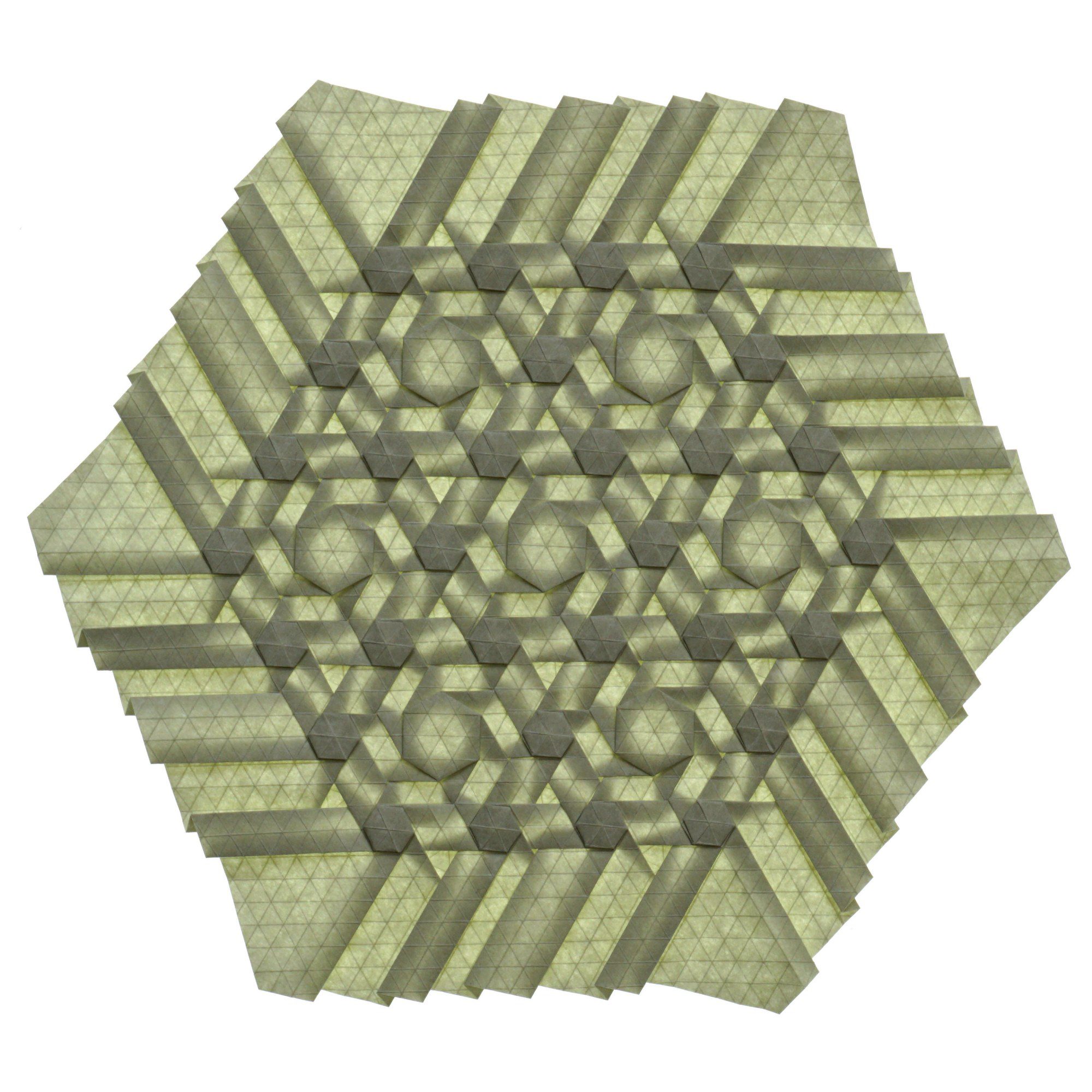

A project led by Kimberley Biggs, another L4TE postdoc, captures how the lab approaches high-stakes problems. Funded by the Gates Foundation, Biggs is developing a way to stabilize therapeutic bacteria used for neonatal and women’s health treatments so they remain effective without refrigeration—critical for patients in areas without reliable temperature-controlled supply chains. A biochemist by training, she had never worked on devices before joining the lab, but she collaborated closely with the mechanical fabrication team to embed her bacterial therapy for conditions such as bacterial vaginosis and recurrent urinary tract infections into an intravaginal ring that can release it over time. She says Traverso gave her “an incredible amount of trust” to lead the project from the start but continued to touch base often, making sure there were “no significant bottlenecks” and that she was meeting all the goals she wanted to meet to progress in her career.

Traverso encourages collaboration by putting together project teams that combine engineers, physicians, and scientists from other fields—a strategy he says can be transformative.

“If you only have one expert, they are constrained to what they know,” he explains. But “when you bring an electrical engineer together with a biologist or physician, the way that they’ll be able to see the problem or the challenge is very different.” As a result, “you see things that perhaps you hadn’t even considered were possible,” he says. Moving a project from a concept to a successful clinical trial “takes a village,” he adds. It’s a “complex, multi-step, multi-person, multi-year” process involving “tens if not hundreds of millions of dollars’ worth of effort.”

Good ideas deserve to be tested

The portion of Traverso’s lab housed at the “tough tech” incubator The Engine—and the only academic group working there—occupies a 30-bench private lab alongside shared fabrication spaces, heavy machinery, and communal rooms of specialized lab equipment. The combination of dedicated and shared resources has helped reduce some initial equipment expenses for new projects, while the startup-dense environment puts potential collaborators, venture capital, and commercialization pathways within easy reach. Biggs’s work on bacterial treatments is one of the lab’s projects at The Engine. Others include work to develop electronics for capsule-based devices and an applicator for microneedle patches.

Traverso’s philosophy is to “fail well and fail fast and move on.”

The end of one table houses “blue sky” research on a topic of long-standing interest to Traverso: pasta. Led by PhD student Jack Chen, the multi-pronged project includes using generative AI to help design new pasta shapes with superior sauce adhesion. Chen and collaborators ranging from executive chefs to experts in fluid dynamics apply the same analytical rigor to this research that they bring to medical devices. It’s playful work, but it’s also a microcosm of the lab’s culture: interdisciplinary to its core, unafraid to cross boundaries, and grounded in Traverso’s belief that good ideas deserve to be tested—even if they fail.

“I’d say the majority of things that I’ve ever been involved in failed,” he says. “But I think it depends on how you define failure.” He says that most of the projects he worked on for the first year and a half of his own PhD either just “kind of worked” or didn’t work at all—causing him to step back and take a different approach that ultimately led him to develop the highly effective technique now used in the Cologuard test. “Even if a hypothesis that we had didn’t work out, or didn’t work out as we thought it might, the process itself, I think, is valuable,” he says. So his philosophy is to “fail well and fail fast and move on.”

In practice, that means encouraging students and postdocs to take on big, uncertain problems, knowing a dead end isn’t the end of their careers—just an opportunity to learn how to navigate the next challenge better.

McRae remembers when a major program—two or three years in the making—abruptly changed course after its sponsor shifted priorities. The team had been preparing a device for safety testing in humans; suddenly, the focus on that goal was gone. Rather than shelving the work, Traverso urged the group to use it as an opportunity to “be a little more creative again” and explore new directions, McRae says. That pivot sparked his work on an autonomous drug delivery system, opening lines of research the team hadn’t pursued before. In this system, patients swallow two capsules that interact in the stomach. When a sensor capsule detects an abnormal signal, it directs a second capsule to release a drug.

“He will often say, ‘I have a focus on not wasting time. Time is something that you can’t buy back. Time is something that you can’t save and bank for later.’”

Kimberley Biggs

“When things aren’t working, just make sure they didn’t work and you’re confident why they didn’t work,” Traverso says he tells his students. “Is it the biology? Is it the materials science? Is it the mechanics that aren’t just aligning for whatever reason?” He models that diagnostic mindset—and the importance of preserving momentum.

“He will often say, ‘I have a focus on not wasting time. Time is something that you can’t buy back. Time is something that you can’t save and bank for later,’” says Biggs. “And so whenever you do encounter some sort of bottleneck, he is so supportive in trying to fix that.”

Traverso’s teaching reflects the same interplay between invention, risk, and real-world impact. In Translational Engineering, one of his graduate-level courses at MIT, he invites experts from the FDA, hospitals, and startups to speak about the realities of bringing medical technology to the world.

“He shared his network with us,” says Murphy, who took the course while working in the lab. “Now that I’m trying to spin out a company, I can reach out to these people.”

Although he now spends most of his time on research and teaching, Traverso maintains an inpatient practice at the Brigham, participating in the consult service—a team of gastroenterology fellows and medical students supervising patient care—for several weeks a year. Staying connected to patients keeps the problems concrete and helps guide decisions on which puzzles to tackle in the lab.

“I think there are certain puzzles in front of us, and I do gravitate to areas that have a solution that will help people in the near term,” he says.

For Traverso, the measure of success is not the complexity of the engineering but the efficacy of the result. The goal is always a therapy that works for the people who need it, wherever they are.

Designing devices for real-world care

A sampling of recent research from Traverso’s Lab for Translational Engineering

A mechanical adhesive device inspired by sucker fish sticks to soft, wet surfaces; it could be used to deliver drugs in the GI tract or to monitor aquatic environments.

A pill based on Traverso’s technology that can be taken once a week gradually releases medication within the stomach. It’s designed for patients with conditions like schizophrenia, hypertension, and asthma who find it difficult to take medicine every day.

A new delivery method for injectable drugs uses smaller needles and fewer shots. Drugs injected as a suspension of tiny crystals assemble into a “depot” under the skin that could last for months or years.

A protein from tiny tardigrades, also known as “water bears,” could protect healthy cells from radiation damage during cancer treatments, reducing severe side effects that many patients find too difficult to tolerate. Injecting messenger RNA encoding this protein into mice produced enough to protect healthy cells.

An inflatable gastric balloon could be enlarged before a meal to prevent overeating and help people lose weight.

Inspired by the way squid use jets to shoot ink clouds, a capsule releases a burst of drugs directly into the GI tract. It could offer an alternative to injecting drugs such as insulin, as well as vaccines and therapies to treat obesity and other metabolic disorders.

An implantable sensor could reverse opioid overdoses. Implanted under the skin, it rapidly releases naloxone when an overdose is detected.

A screening device for cervical cancer offers a clear line of sight to the cervix in a way that causes less discomfort than a traditional speculum. It’s affordable enough for use in low- and middle-income countries.