Google Gemini privacy support pages warn that information shared with Gemini Apps may be read and annotated by human reviewers and also be included into AI training datasets. This is what you need to know and what actions are available to prevent this from happening.

Google Gemini

Gemini is the name for the technology underlying the Google Gemini Android App available on Google Play, a functionality in the Apple iPhone Google App and a standalone chatbot called Gemini Advanced.

Gemini on Android, iPhone & Gemini Advanced

Gemini on mobile devices and the standalone chatbot are multimodal. Multimodal means that users can ask it questions with images, audio or text input. Gemini can answer questions about things in the real world, respond to questions, can perform actions, provide information about an object in a photo or provide instructions on how to use it.

All of that data in the form of images, audio and text are submitted to Google and some of it could be reviewed by humans or included in AI training datasets.

Gemini Uses Gemini Data To Create Training Datasets?

Gemini Apps uses past conversations and location data for generating responses, which is normal and reasonable. Gemini also collects and stores that same data to improve other Google products.

This is what the privacy explainer page says about it:

“Google collects your Gemini Apps conversations, related product usage information, info about your location, and your feedback. Google uses this data, consistent with our Privacy Policy, to provide, improve, and develop Google products and services and machine learning technologies, including Google’s enterprise products such as Google Cloud.”

Google’s privacy explainer says that the data is stored in a users Google Account for up to 18 months and they are able to limit the data storage to three months and 36 months.

There’s also a way to turn off saving data to a users Google Account:

“If you want to use Gemini Apps without saving your conversations to your Google Account, you can turn off your Gemini Apps Activity.

..Even when Gemini Apps Activity is off, your conversations will be saved with your account for up to 72 hours. This lets Google provide the service and process any feedback. This activity won’t appear in your Gemini Apps Activity.”

But there’s an exception to the above rule that lets Google hold on to the data for even longer.

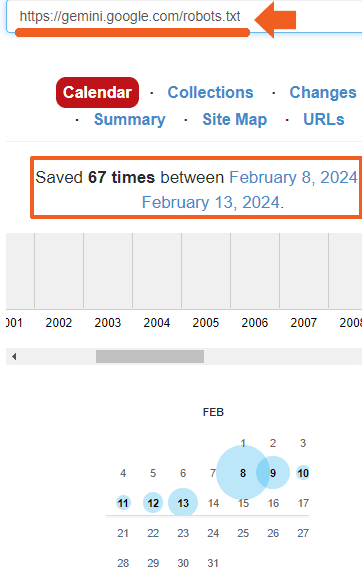

Human Reviews Of User Gemini Data

Google’s Gemini privacy support page explains that user data that is reviewed and annotated by human reviewers is retained by Google for up to three years.

“How long is reviewed data retained

Gemini Apps conversations that have been reviewed by human reviewers (as well as feedback and related data like your language, device type, or location info) are not deleted when you delete your Gemini Apps activity because they are kept separately and are not connected to your Google Account. Instead, they are retained for up to 3 years.”

The above cited support page informs that human reviewed and annotated data is used to create datasets for Chatbots:

“These are then used to create a better dataset for generative machine-learning models to learn from so our models can produce improved responses in the future.”

Google Gemini Warning: Don’t Share Confidential Data

Google’s Gemini privacy explainer page warns that users should not share confidential information.

It explains:

“To help with quality and improve our products (such as generative machine-learning models that power Gemini Apps), human reviewers read, annotate, and process your Gemini Apps conversations. We take steps to protect your privacy as part of this process. This includes disconnecting your conversations with Gemini Apps from your Google Account before reviewers see or annotate them.

Please don’t enter confidential information in your conversations or any data you wouldn’t want a reviewer to see or Google to use to improve our products, services, and machine-learning technologies.

…Don’t enter anything you wouldn’t want a human reviewer to see or Google to use. For example, don’t enter info you consider confidential or data you don’t want to be used to improve Google products, services, and machine-learning technologies.”

There is a way to keep all that from happening. Turning off Gemini Apps Activity stops user data from being shown to human reviewers, so there is a way to opt-out and not have the data stored and used to create datasets.

But, Google still stores data up to 72 hours in order to have a backup in case of a failure but also for sharing with other Google services and with third party services that a user may interact with while using Gemini.

Using Gemini Can Lead To 3rd Party Data Sharing

Using Gemini can start a chain reaction of other apps using and storing user conversations, location data and other information.

The Gemini privacy support page explains:

“If you turn off this setting or delete your Gemini Apps activity, other settings, like Web & App Activity or Location History, may continue to save location and other data as part of your use of other Google services.

In addition, when you integrate and use Gemini Apps with other Google services, they will save and use your data to provide and improve their services, consistent with their policies and the Google Privacy Policy. If you use Gemini Apps to interact with third-party services, they will process your data according to their own privacy policies.”

The same Gemini privacy page links to a page for requesting removal of content, as well as to a Gemini Apps FAQ and a Gemini Apps Privacy Hub to learn more.

Gemini Use Comes With Strings

Many of the ways that Gemini uses data is for legitimate purposes, including submitting the information for human reviews. But Google’s own Gemini support pages make it very clear that users should not share any confidential information that a human reviewer might see or because it might get included into an AI training dataset.

Featured Image by Judith Linine / Shutterstock.com