7 Things To Look For In An SEO-Friendly WordPress Host

This post was sponsored by Bluehost. The opinions expressed in this article are the sponsor’s own.

When trying to improve your WordPress site’s search rankings, hosting might not be the first thing on your mind.

But your choice of hosting provider can significantly impact your SEO efforts.

A poor hosting setup can slow down your site, compromise its stability and security, and drain valuable time and resources.

The answer? Choosing the right WordPress hosting provider.

Here are seven essential features to look for in an SEO-friendly WordPress host that will help you:

1. Reliable Uptime & Speed for Consistent Performance

A website’s uptime and speed can significantly influence your site’s rankings and the success of your SEO strategies.

Users don’t like sites that suffer from significant downtime or sluggish load speeds. Not only are these sites inconvenient, but they also reflect negatively on the brand and their products and services, making them appear less trustworthy and of lower quality.

For these reasons, Google values websites that load quickly and reliably. So, if your site suffers from significant downtime or sluggish load times, it can negatively affect your site’s position in search results as well as frustrate users.

Reliable hosting with minimal downtime and fast server response times helps ensure that both users and search engines can access your content seamlessly.

Performance-focused infrastructure, optimized for fast server responses, is essential for delivering a smooth and engaging user experience.

When evaluating hosting providers, look for high uptime guarantees through a robust Service Level Agreement (SLA), which assures site availability and speed.

Bluehost Cloud, for instance, offers a 100% SLA for uptime, response time, and resolution time.

Built specifically with WordPress users in mind, Bluehost Cloud leverages an infrastructure optimized to deliver the speed and reliability that WordPress sites require, enhancing both SEO performance and user satisfaction. This guarantee provides you with peace of mind.

Your site will remain accessible and perform optimally around the clock, and you’ll spend less time troubleshooting and dealing with your host’s support team trying to get your site back online.

2. Data Center Locations & CDN Options For Global Reach

Fast load times are crucial not only for providing a better user experience but also for reducing bounce rates and boosting SEO rankings.

Since Google prioritizes websites that load quickly for users everywhere, having data centers in multiple locations and Content Delivery Network (CDN) integration is essential for WordPress sites with a global audience.

To ensure your site loads quickly for all users, no matter where they are, choose a WordPress host with a distributed network of data centers and CDN support. Consider whether it offers CDN options and data center locations that align with your audience’s geographic distribution

This setup allows your content to reach users swiftly across different regions, enhancing both user satisfaction and search engine performance.

Bluehost Cloud integrates with a CDN to accelerate content delivery across the globe. This means that whether your visitors are in North America, Europe, or Asia, they’ll experience faster load times.

By leveraging global data centers and a CDN, Bluehost Cloud ensures your site’s SEO remains strong, delivering a consistent experience for users around the world.

3. Built-In Security Features To Protect From SEO-Damaging Attacks

Security is essential for your brand, your SEO, and overall site health.

Websites that experience security breaches, malware, or frequent hacking attempts can be penalized by search engines, potentially suffering from ranking drops or even removal from search indexes.

Therefore, it’s critical to select a host that offers strong built-in security features to safeguard your website and its SEO performance.

When evaluating hosting providers, look for options that include additional security features.

Bluehost Cloud, for example, offers comprehensive security features designed to protect WordPress sites, including free SSL certificates to encrypt data, automated daily backups, and regular malware scans.

These features help maintain a secure environment, preventing security issues from impacting your potential customers, your site’s SEO, and ultimately, your bottom line.

With Bluehost Cloud, your site’s visitors, data, and search engine rankings remain secure, providing you with peace of mind and a safe foundation for SEO success.

4. Optimized Database & File Management For Fast Site Performance

A poorly managed database can slow down site performance, which affects load times and visitor experience. Therefore, efficient data handling and optimized file management are essential for fast site performance.

Choose a host with advanced database and file management tools, as well as caching solutions that enhance site speed. Bluehost Cloud supports WordPress sites with advanced database optimization, ensuring quick, efficient data handling even as your site grows.

With features like server-level caching and optimized databases, Bluehost Cloud is built to handle WordPress’ unique requirements, enabling your site to perform smoothly without additional plugins or manual adjustments.

Bluehost Cloud contributes to a better user experience and a stronger SEO foundation by keeping your WordPress site fast and efficient.

5. SEO-Friendly, Scalable Bandwidth For Growing Sites

As your site’s popularity grows, so does its bandwidth requirements. Scalable or unmetered bandwidth is vital to handle traffic spikes without slowing down your site and impacting your SERP performance.

High-growth websites, in particular, benefit from hosting providers that offer flexible bandwidth options, ensuring consistent speed and availability even during peak traffic.

To avoid disaster, select a hosting provider that offers scalable or unmetered bandwidth as part of their package. Bluehost Cloud’s unmetered bandwidth, for instance, is designed to accommodate high-traffic sites without affecting load times or user experience.

This ensures that your site remains responsive and accessible during high-traffic periods, supporting your growth and helping you maintain your SEO rankings.

For websites anticipating growth, unmetered bandwidth with Bluehost Cloud provides a reliable, flexible solution to ensure long-term performance.

6. WordPress-Specific Support & SEO Optimization Tools

WordPress has unique needs when it comes to SEO, making specialized hosting support essential.

Hosts that cater specifically to WordPress provide an added advantage by offering tools and configurations such as staging environments and one-click installations specifically for WordPress.

WordPress-specific hosting providers also have an entire team of knowledgeable support and technical experts who can help you significantly improve your WordPress site’s performance.

Bluehost Cloud is a WordPress-focused hosting solution that offers priority, 24/7 support from WordPress experts, ensuring any issue you encounter is dealt with effectively.

Additionally, Bluehost’s staging environments enable you to test changes and updates before going live, reducing the risk of SEO-impacting errors.

Switching to Bluehost is easy, affordable, and stress-free, too.

Bluehost offers a seamless migration service designed to make switching hosts simple and stress-free. Our dedicated migration support team handles the entire transfer process, ensuring your WordPress site’s content, settings, and configurations are moved safely and accurately.

Currently, Bluehost also covers all migration costs, so you can make the switch with zero out-of-pocket expenses. We’ll credit the remaining cost of your existing contract, making the transition financially advantageous.

You can actually save money or even gain credit by switching

7. Integrated Domain & Site Management For Simplified SEO Administration

SEO often involves managing domain settings, redirects, DNS configurations, and SSL updates, which can become complicated without centralized management.

An integrated hosting provider that allows you to manage your domain and hosting in one place simplifies these SEO tasks and makes it easier to maintain a strong SEO foundation.

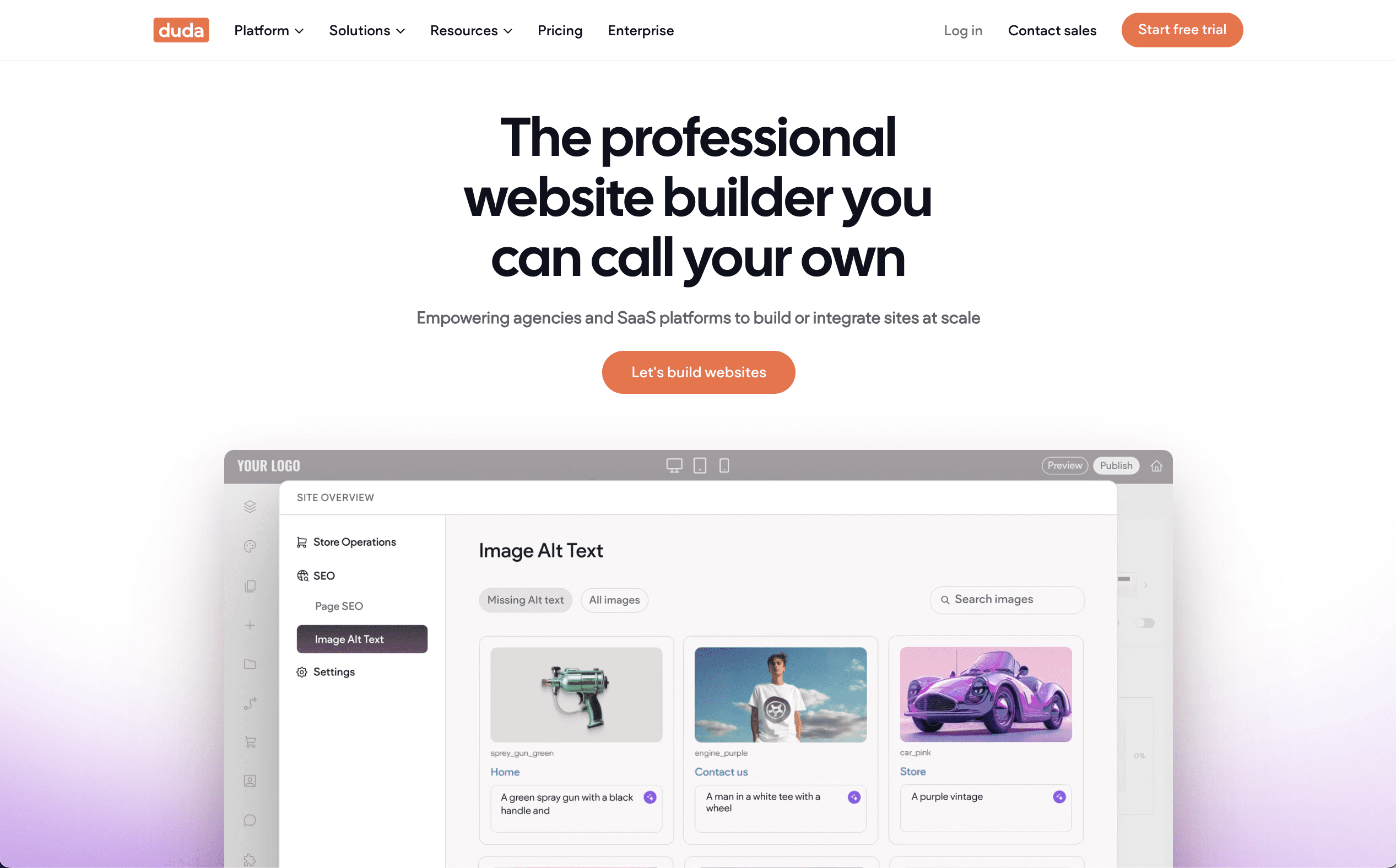

When selecting a host, look for providers that integrate domain management with hosting. Bluehost offers a streamlined experience, allowing you to manage both domains and hosting from a single dashboard.

SEO-related site administration becomes more manageable, and you can focus on the things you do best: growth and optimization.

Find A SEO-Friendly WordPress Host

Choosing an SEO-friendly WordPress host can have a significant impact on your website’s search engine performance, user experience, and long-term growth.

By focusing on uptime, global data distribution, robust security, optimized database management, scalable bandwidth, WordPress-specific support, and integrated domain management, you create a solid foundation that supports both SEO and usability.

Ready to make the switch?

As a trusted WordPress partner with over 20 years of experience, Bluehost offers a hosting solution designed to meet the unique demands of WordPress sites big and small.

Our dedicated migration support team handles every detail of your transfer, ensuring your site’s content, settings, and configurations are moved accurately and securely.

Plus, we offer eligible customers a credit toward their remaining contracts, making the transition to Bluehost not only seamless but also cost-effective.

Learn how Bluehost Cloud can elevate your WordPress site. Visit us today to get started.

Image Credits

Featured Image: Image by Bluehost. Used with permission.

In-Post Image: Images by Bluehost. Used with permission.