How AI’s Geo-Identification Failures Are Rewriting International SEO via @sejournal, @motokohunt

AI search isn’t just changing what content ranks; it’s quietly redrawing where your brand appears to belong. As large language models (LLMs) synthesize results across languages and markets, they blur the boundaries that once kept content localized. Traditional geographic signals of hreflang, ccTLDs, and regional schema are being bypassed, misread, or overwritten by global defaults. The result: your English site becomes the “truth” for all markets, while your local teams wonder why their traffic and conversions are vanishing.

This article focuses primarily on search-grounded AI systems such as Google’s AI Overviews and Bing’s generative search, where the problem of geo-identification drift is most visible. Purely conversational AI may behave differently, but the core issue remains: when authority signals and training data skew global and geographic context, synthesis often loses that context.

The New Geography Of Search

In classic search, location was explicit:

- IP, language, and market-specific domains dictated what users saw.

- Hreflang told Google which market variant to serve.

- Local content lived on distinct ccTLDs or subdirectories, supported by region-specific backlinks and metadata.

AI search breaks this deterministic system.

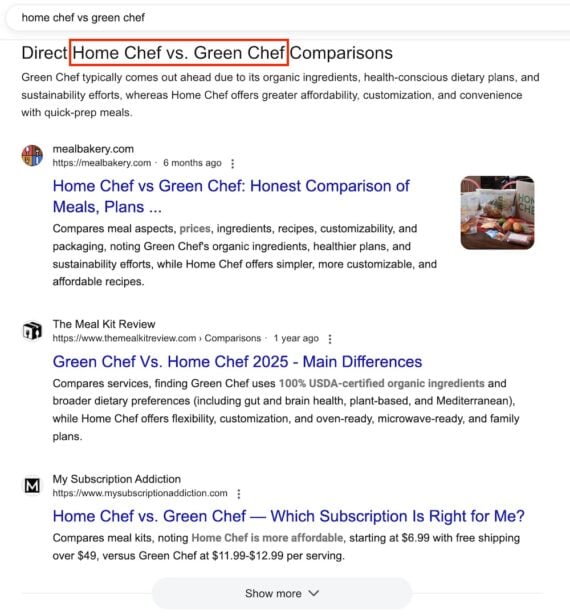

In a recent article on “AI Translation Gaps,” International SEO Blas Giffuni demonstrated this problem when he typed the phrase “proveedores de químicos industriales.” Rather than presenting the local market website with a list of industrial chemical suppliers in Mexico, it presented a translated list from the US, of which some either did not do business in Mexico or did not meet local safety or business requirements. A generative engine doesn’t just retrieve documents; it synthesizes an answer using whatever language or source it finds most complete.

If your local pages are thin, inconsistently marked up, or overshadowed by global English content, the model will simply pull from the worldwide corpus and rewrite the answer in Spanish or French.

On the surface, it looks localized. Underneath, it’s English data wearing a different flag.

Why Geo-Identification Is Breaking

1. Language ≠ Location

AI systems treat language as a proxy for geography. A Spanish query could represent Mexico, Colombia, or Spain. If your signals don’t specify which markets you serve through schema, hreflang, and local citations, the model lumps them together.

When that happens, your strongest instance wins. And nine times out of 10, that’s your main English language website.

2. Market Aggregation Bias

During training, LLMs learn from corpus distributions that heavily favor English content. When related entities appear across markets (‘GlobalChem Mexico,’ ‘GlobalChem Japan’), the model’s representations are dominated by whichever instance has the most training examples, typically the English global brand. This creates an authority imbalance that persists during inference, causing the model to default to global content even for market-specific queries.

3. Canonical Amplification

Search engines naturally try to consolidate near-identical pages, and hreflang exists to counter that bias by telling them that similar versions are valid alternatives for different markets. When AI systems retrieve from these consolidated indexes, they inherit this hierarchy, treating the canonical version as the primary source of truth. Without explicit geographic signals in the content itself, regional pages become invisible to the synthesis layer, even when they are adequately tagged with hreflang.

This amplifies market-aggregation bias; your regional pages aren’t just overshadowed, they’re conceptually absorbed into the parent entity.

Will This Problem Self-Correct?

As LLMs incorporate more diverse training data, some geographic imbalances may diminish. However, structural issues like canonical consolidation and the network effects of English-language authority will persist. Even with perfect training data distribution, your brand’s internal hierarchy and content depth differences across markets will continue to influence which version dominates in synthesis.

The Ripple Effect On Local Search

Global Answers, Local Users

Procurement teams in Mexico or Japan receive AI-generated answers derived from English pages. The contact info, certifications, and shipping policies are wrong, even if localized pages exist.

Local Authority, Global Overshadowing

Even strong local competitors are being displaced because models weigh the English/global corpus more heavily. The result: the local authority doesn’t register.

Brand Trust Erosion

Users perceive this as neglect:

“They don’t serve our market.”

“Their information isn’t relevant here.”

In regulated or B2B industries where compliance, units, and standards matter, this results in lost revenue and reputational risk.

Hreflang In The Age of AI

Hreflang was a precision instrument in a rules-based world. It told Google which page to serve in which market. But AI engines don’t “serve pages” – they generate responses.

That means:

- Hreflang becomes advisory, not authoritative.

- Current evidence suggests LLMs don’t actively interpret hreflang during synthesis because it doesn’t apply to the document-level relationships they use for reasoning.

- If your canonical structure points to global pages, the model inherits that hierarchy, not your hreflang instructions.

In short, hreflang still helps Google indexing, but it no longer governs interpretation.

AI systems learn from patterns of connectivity, authority, and relevance. If your global content has richer interlinking, higher engagement, and more external citations, it will always dominate the synthesis layer – regardless of hreflang.

Read more: Ask An SEO: What Are The Most Common Hreflang Mistakes & How Do I Audit Them?

How Geo Drift Happens

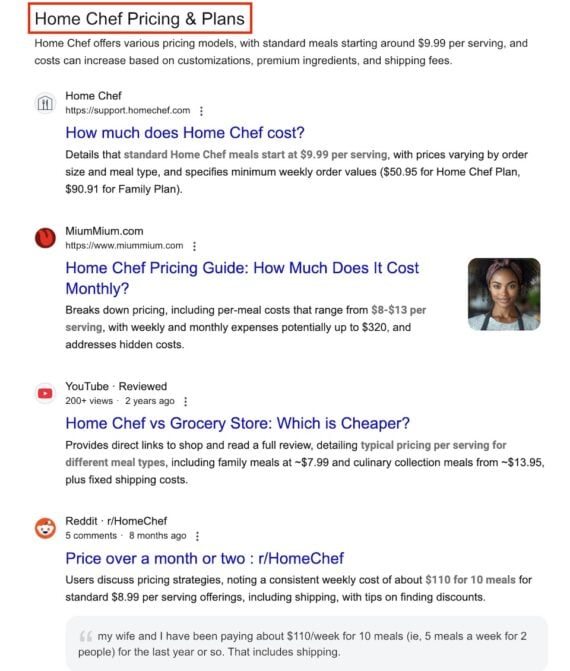

Let’s look at a real-world pattern observed across markets:

- Weak local content (thin copy, missing schema, outdated catalog).

- Global canonical consolidates authority under .com.

- AI overview or chatbot pulls the English page as source data.

- The model generates a response in the user’s language, drawing on facts and context from the English source while adding a few local brand names to create the appearance of localization, and then serves a synthetic local-language answer.

- User clicks through to a U.S. contact form, gets blocked by shipping restrictions, and leaves frustrated.

Each of these steps seems minor, but together they create a digital sovereignty problem – global data has overwritten your local market’s representation.

Geo-Legibility: The New SEO Imperative

In the era of generative search, the challenge isn’t just to rank in each market – it’s to make your presence geo-legible to machines.

Geo-legibility builds on international SEO fundamentals but addresses a new challenge: making geographic boundaries interpretable during AI synthesis, not just during traditional retrieval and ranking. While hreflang tells Google which page to index for which market, geo-legibility ensures the content itself contains explicit, machine-readable signals that survive the transition from structured index to generative response.

That means encoding geography, compliance, and market boundaries in ways LLMs can understand during both indexing and synthesis.

Key Layers Of Geo-Legibility

| Layer | Example Action | Why It Matters |

| Content | Include explicit market context (e.g., “Distribuimos en México bajo norma NOM-018-STPS”) | Reinforces relevance to a defined geography. |

| Structure | Use schema for areaServed, priceCurrency, and addressLocality | Provides explicit geographic context that may influence retrieval systems and helps future-proof as AI systems evolve to better understand structured data. |

| Links & Mentions | Secure backlinks from local directories and trade associations | Builds local authority and entity clustering. |

| Data Consistency | Align address, phone, and organization names across all sources | Prevents entity merging and confusion. |

| Governance | Monitor AI outputs for misattribution or cross-market drift | Detects early leakage before it becomes entrenched. |

Note: While current evidence for schema’s direct impact on AI synthesis is limited, these properties strengthen traditional search signals and position content for future AI systems that may parse structured data more systematically.

Geo-legibility isn’t about speaking the right language; it’s about being understood in the right place.

Diagnostic Workflow: “Where Did My Market Go?”

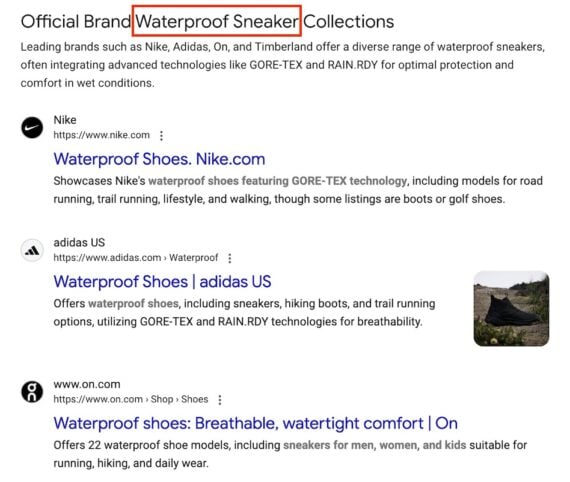

- Run Local Queries in AI Overview or Chat Search. Test your core product and category terms in the local language and record which language, domain, and market each result reflects.

- Capture Cited URLs and Market Indicators. If you see English pages cited for non-English queries, that’s a signal your local content lacks authority or visibility.

- Cross-Check Search Console Coverage. Confirm that your local URLs are indexed, discoverable, and mapped correctly through hreflang.

- Inspect Canonical Hierarchies. Ensure your regional URLs aren’t canonicalized to global pages. AI systems often treat canonical as “primary truth.”

- Test Structured Geography. For Google and Bing, be sure to add or validate schema properties like areaServed, address, and priceCurrency to help engines map jurisdictional relevance.

- Repeat Quarterly. AI search evolves rapidly. Regular testing ensures your geo boundaries remain stable as models retrain.

Remediation Workflow: From Drift To Differentiation

| Step | Focus | Impact |

| 1 | Strengthen local data signals (structured geography, certification markup). | Clarifies market authority |

| 2 | Build localized case studies, regulatory references, and testimonials. | Anchors E-E-A-T locally |

| 3 | Optimize internal linking from regional subdomains to local entities. | Reinforces market identity |

| 4 | Secure regional backlinks from industry bodies. | Adds non-linguistic trust |

| 5 | Adjust canonical logic to favor local markets. | Prevents AI inheritance of global defaults |

| 6 | Conduct “AI visibility audits” alongside traditional SEO reports. |

Beyond Hreflang: A New Model Of Market Governance

Executives need to see this for what it is: not an SEO bug, but a strategic governance gap.

AI search collapses boundaries between brand, market, and language. Without deliberate reinforcement, your local entities become shadows inside global knowledge graphs.

That loss of differentiation affects:

- Revenue: You become invisible in the markets where growth depends on discoverability.

- Compliance: Users act on information intended for another jurisdiction.

Equity: Your local authority and link capital are absorbed by the global brand, distorting measurement and accountability.

Why Executives Must Pay Attention

The implications of AI-driven geo drift extend far beyond marketing. When your brand’s digital footprint no longer aligns with its operational reality, it creates measurable business risk. A misrouted customer in the wrong market isn’t just a lost lead; it’s a symptom of organizational misalignment between marketing, IT, compliance, and regional leadership.

Executives must ensure their digital infrastructure reflects how the company actually operates, which markets it serves, which standards it adheres to, and which entities own accountability for performance. Aligning these systems is not optional; it’s the only way to minimize negative impact as AI platforms redefine how brands are recognized, attributed, and trusted globally.

Executive Imperatives

- Reevaluate Canonical Strategy. What once improved efficiency may now reduce market visibility. Treat canonicals as control levers, not conveniences.

- Expand SEO Governance to AI Search Governance. Traditional hreflang audits must evolve into cross-market AI visibility reviews that track how generative engines interpret your entity graph.

- Reinvest in Local Authority. Encourage regional teams to create content with market-first intent – not translated copies of global pages.

- Measure Visibility Differently. Rankings alone no longer indicate presence: track citations, sources, and language of origin in AI search outputs.

Final Thought

AI didn’t make geography irrelevant; it just exposed how fragile our digital maps were.

Hreflang, ccTLDs, and translation workflows gave companies the illusion of control.

AI search removed the guardrails, and now the strongest signals win – regardless of borders.

The next evolution of international SEO isn’t about tagging and translating more pages. It’s about governing your digital borders and making sure every market you serve remains visible, distinct, and correctly represented in the age of synthesis.

Because when AI redraws the map, the brands that stay findable aren’t the ones that translate best; they’re the ones who define where they belong.

More Resources:

Featured Image: Roman Samborskyi/Shutterstock