5 Ways Emerging Businesses Can Show up in ChatGPT, Gemini & Perplexity via @sejournal, @nofluffmktg

This post was sponsored by No Fluff. The opinions expressed in this article are the sponsor’s own.

When ChatGPT, Gemini, and Perplexity mention a company, these large language models (LLMs) are deciding whether that business is safe to reference, not how long it has existed.

Most business leaders assume one thing when they don’t show up in AI-generated answers:

We’re too new.

In reality, early testing across multiple AI platforms suggests something else is going on. In many cases, the problem has less to do with company age and more to do with how AI systems evaluate structure, repetition, and trust signals.

It is possible for new brands to be mentioned in AI search results.

Even well-built products with real expertise are routinely missing from AI recommendations. Yet when buyers ask who to trust, the same legacy names keep appearing.

Why Most New Businesses Don’t Show Up In AI Search Results

This isn’t random.

AI systems lean on existing training data and visible digital footprints, which favor brands that have been cited for years. Because every answer carries risk, these systems act conservatively.

They don’t look for the most optimized page; they look for the most verifiable entity. If your footprint is thin, inconsistent, or poorly supported by third parties, the AI will often swap you out for a competitor it can trust more easily.

Most new businesses launch with:

- Minimal historical signals

Very little online content or mentions, so AI has almost nothing to work with. - Few credibility signals

Few backlinks, reviews, or press, so you don’t “look” trustworthy yet. - Blending brand names

Similar or generic brand names are easier for AI systems to confuse, misattribute, or skip entirely if trust signals are weak. - Unclear positioning

Unclear positioning or ideas that appear only once on a company website are less likely to be trusted.

Together, these create unreliable signals.

In generative search, visibility is less about ranking and more about reasoning.

This is why most new brands aren’t evaluated as “bad,” but as too uncertain to reference safely.

That distinction matters. Being referenced by AI is not just exposure; it influences who buyers consider credible before they ever reach a website. AI-referred visitors often convert at higher rates than traditional organic traffic.

For new businesses, the lack of legacy signals isn’t “just a disadvantage.” Handled correctly, it can be an opening to establish clarity and trust faster than older competitors that rely on outdated authority.

Proof That New Businesses Can Show Up In AI Search [The Experiment]

There’s surprisingly little guidance on whether a new or growing brand can actually appear in AI-generated answers. Given how much these systems depend on past signals, it’s easy to assume established companies appear by default.

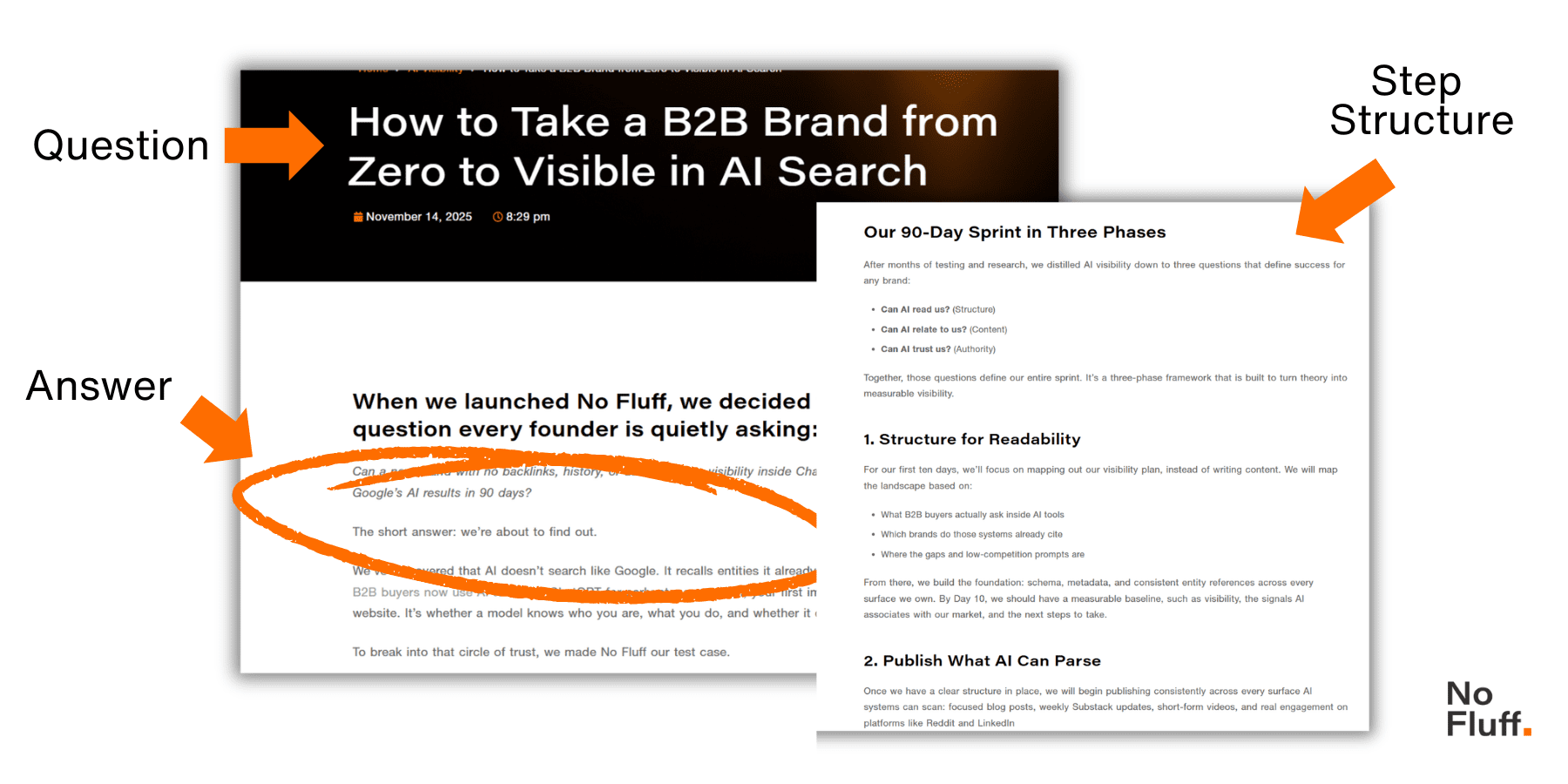

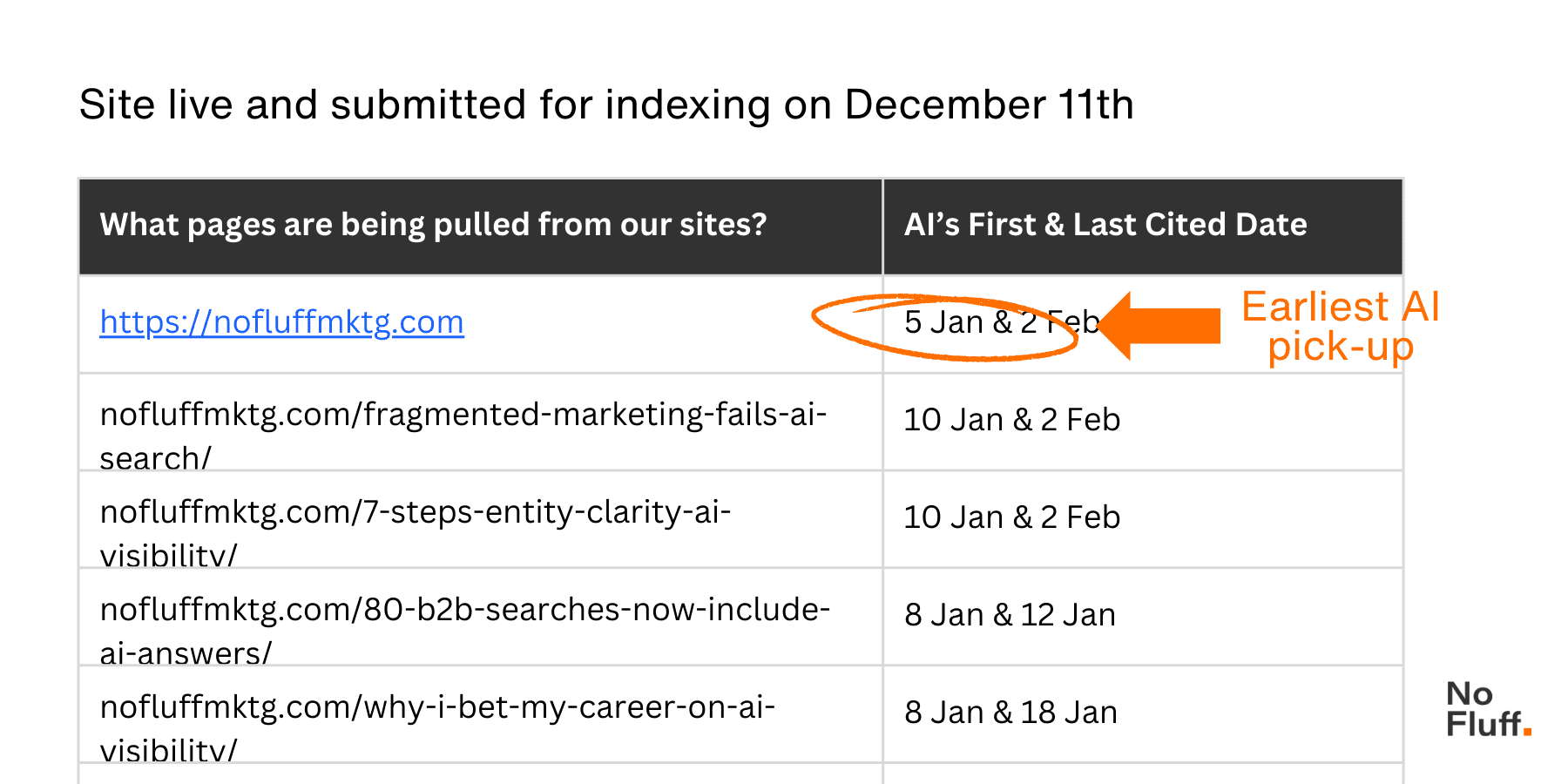

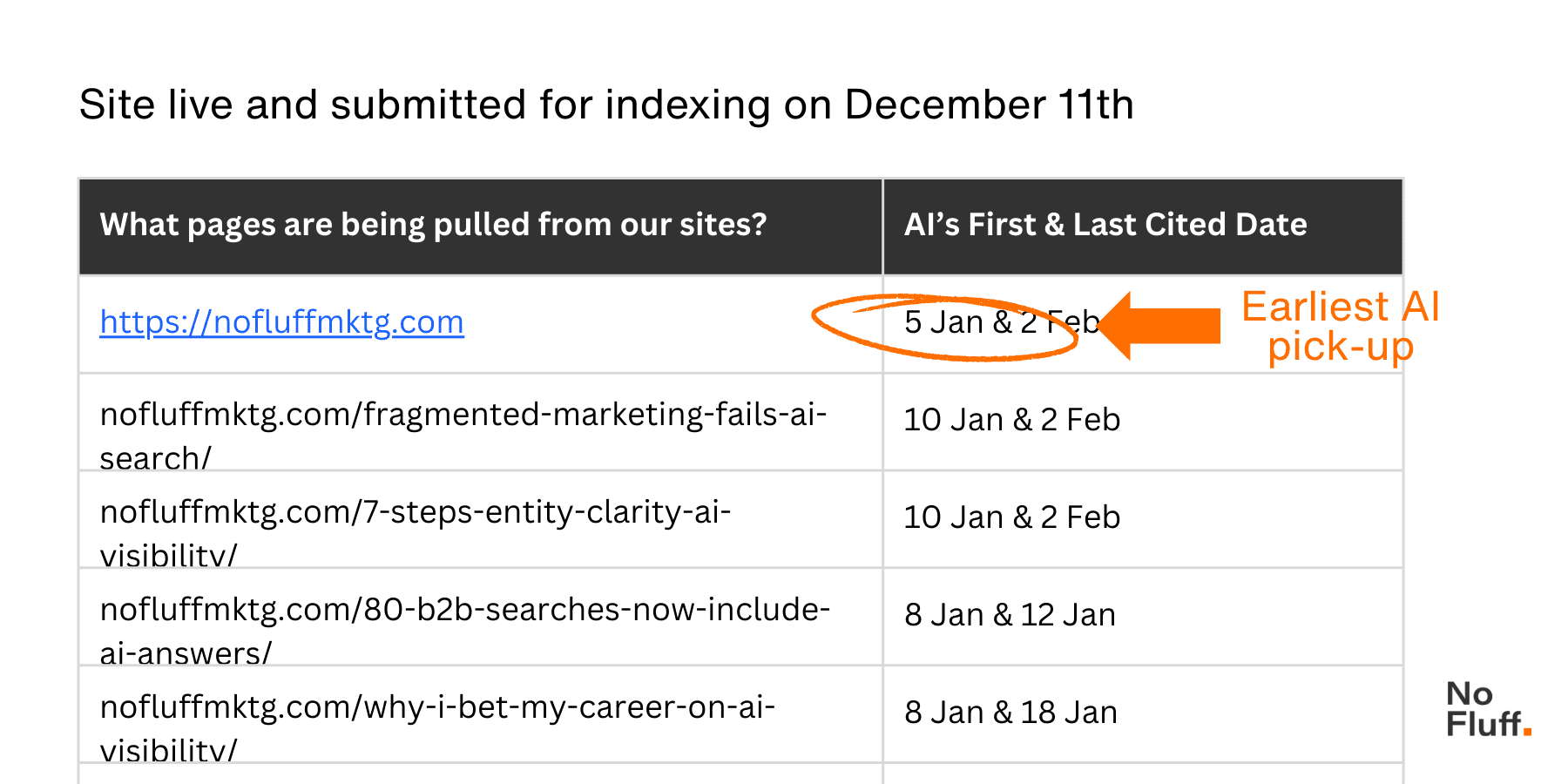

To test that assumption, a brand-new B2B company was tracked from launch as part of a 12-week AI search visibility experiment. The findings below reflect the first six weeks of that ongoing test. The company started with no prior history, no backlinks, and no press coverage. A true zero.

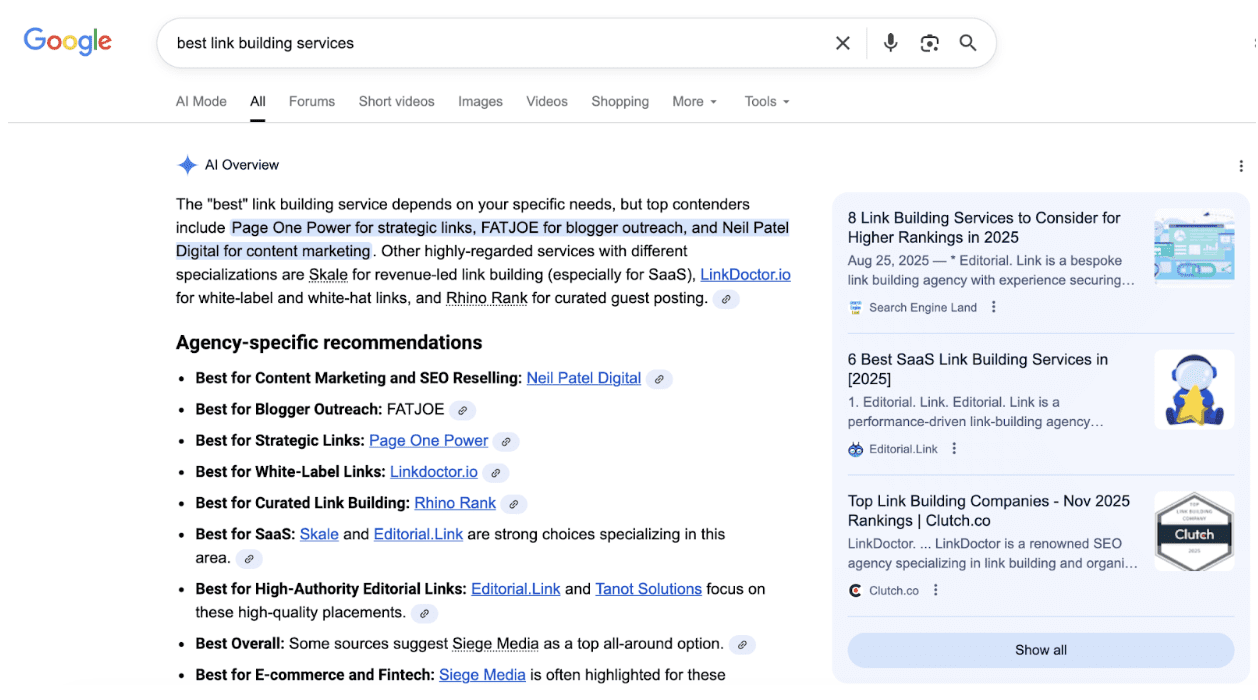

Visibility was measured across 150 buyer-style prompts in ChatGPT, Google AI Overviews, and Perplexity rather than inferred from third-party dashboards.

Using weekly GEO sprints focused on technical foundations, answer-first content, and reinforcing signals like social, video, and early backlinks, the goal was to see how far a best-practice GEO playbook could move a truly new brand.

Within six weeks, the emerging business saw the following results:

- Appeared in 5% of relevant AI responses.

- Showed up across 39 of 150 questions.

- Mentioned 74 times, with 42 cited mentions.

- 6% citation accuracy, ~11% pointing to the brand’s own site.

6 Patterns Observed in Early AI Visibility Testing

Across the first six weeks, six patterns consistently influenced whether the brand was included, replaced by a competitor, or excluded entirely from AI-generated answers:

Pattern 1: Structure Matters More Than Topic

Content that wandered (even if it was thoughtful or “robust”) consistently lagged in AI pickup. The pages that were picked up were tighter: they answered the question up front, broke the content into clear steps, and stuck to one idea at a time.

Pattern 2: The Social “Amplifier” Effect

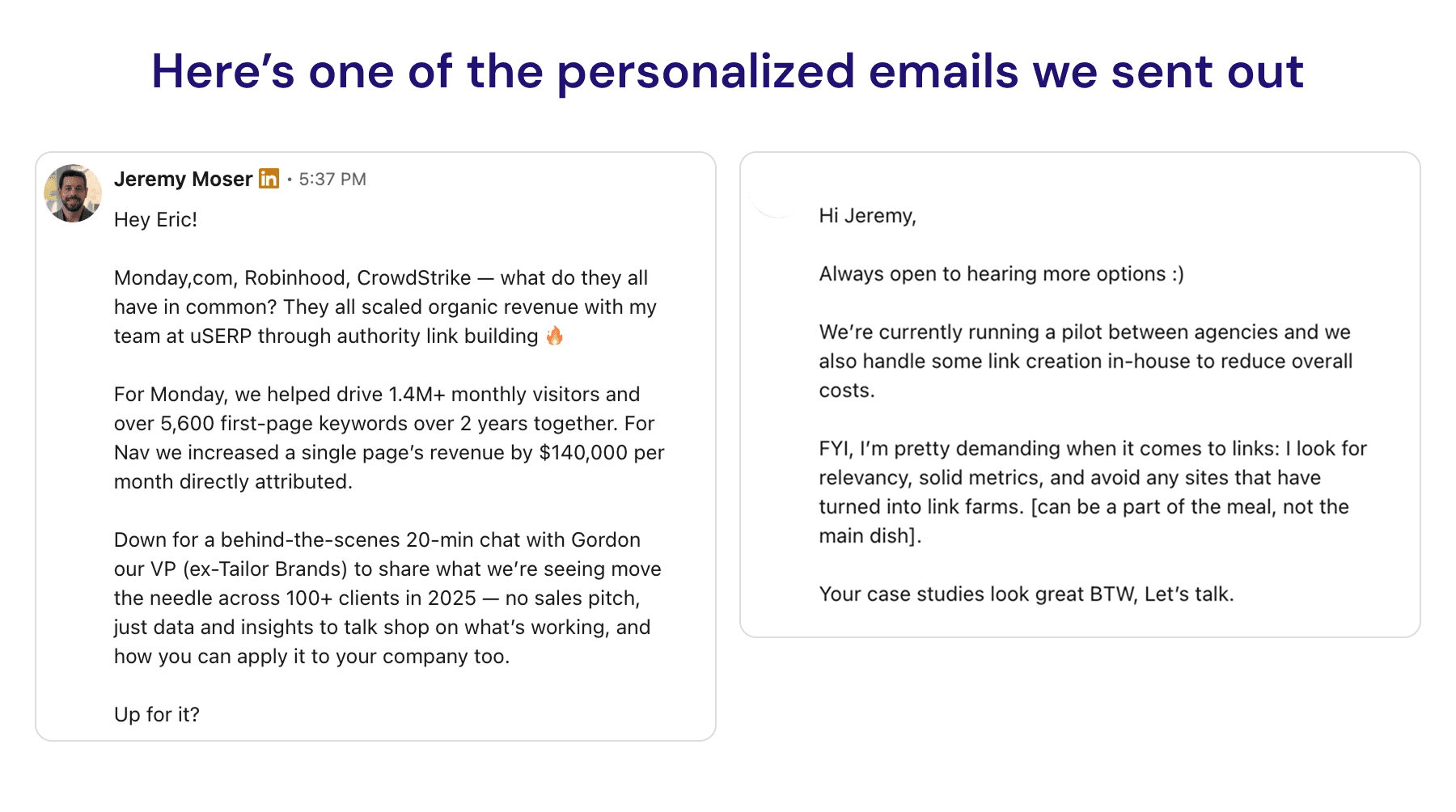

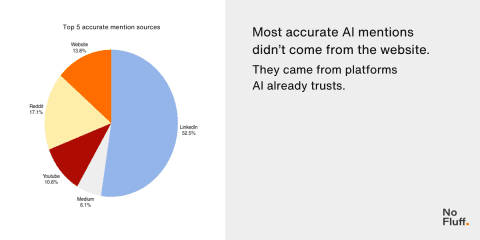

AI is more likely to cite sources it already trusts. In the first two weeks, most citations came from the brand’s LinkedIn and Medium posts rather than its website. For a new brand, publishing key ideas first on high-authority platforms, including LinkedIn or Medium, often triggers AI pickup before the same content is indexed on your own website.

Pattern 3: Hallucinations are Often Signal Failures

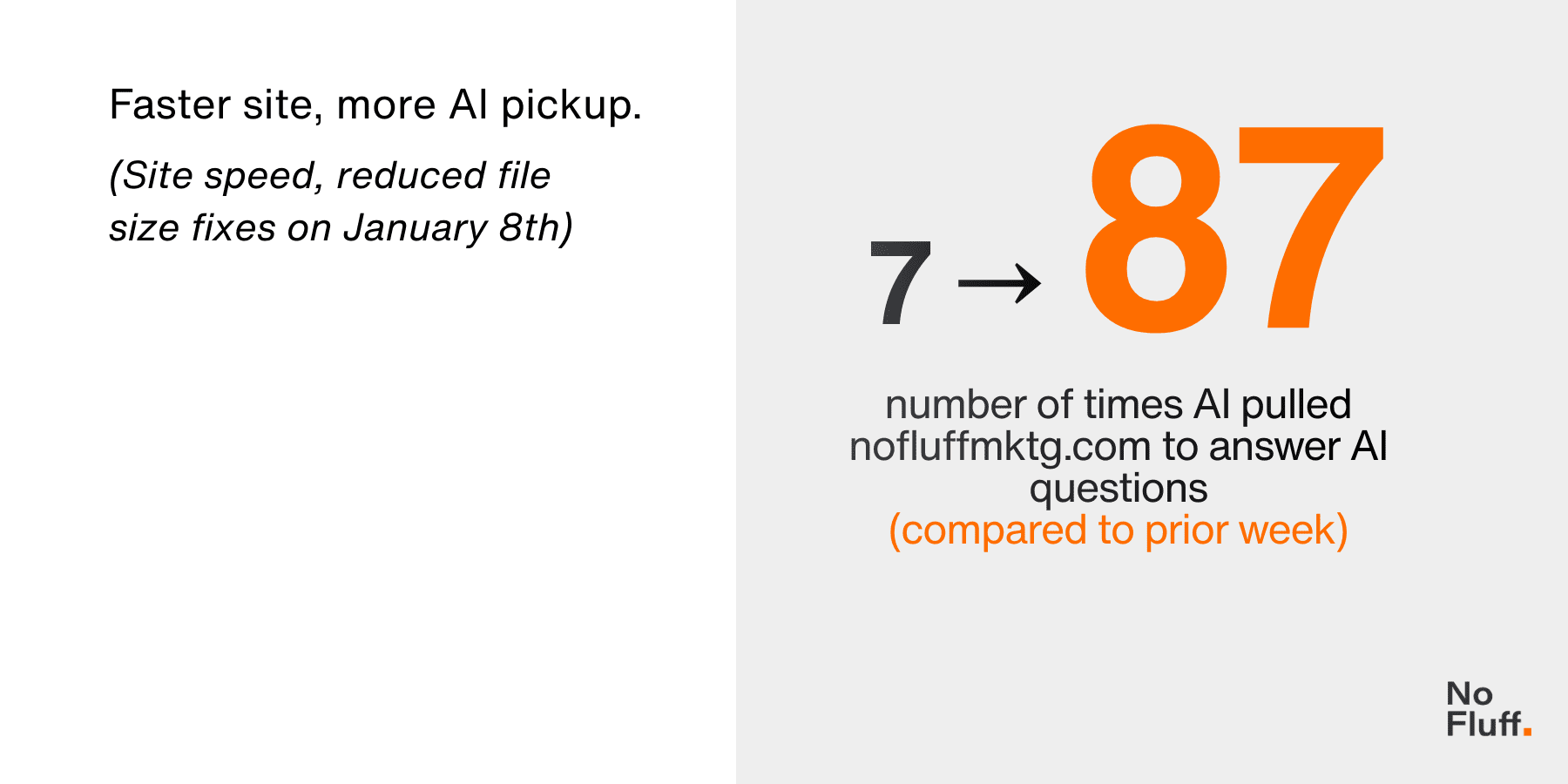

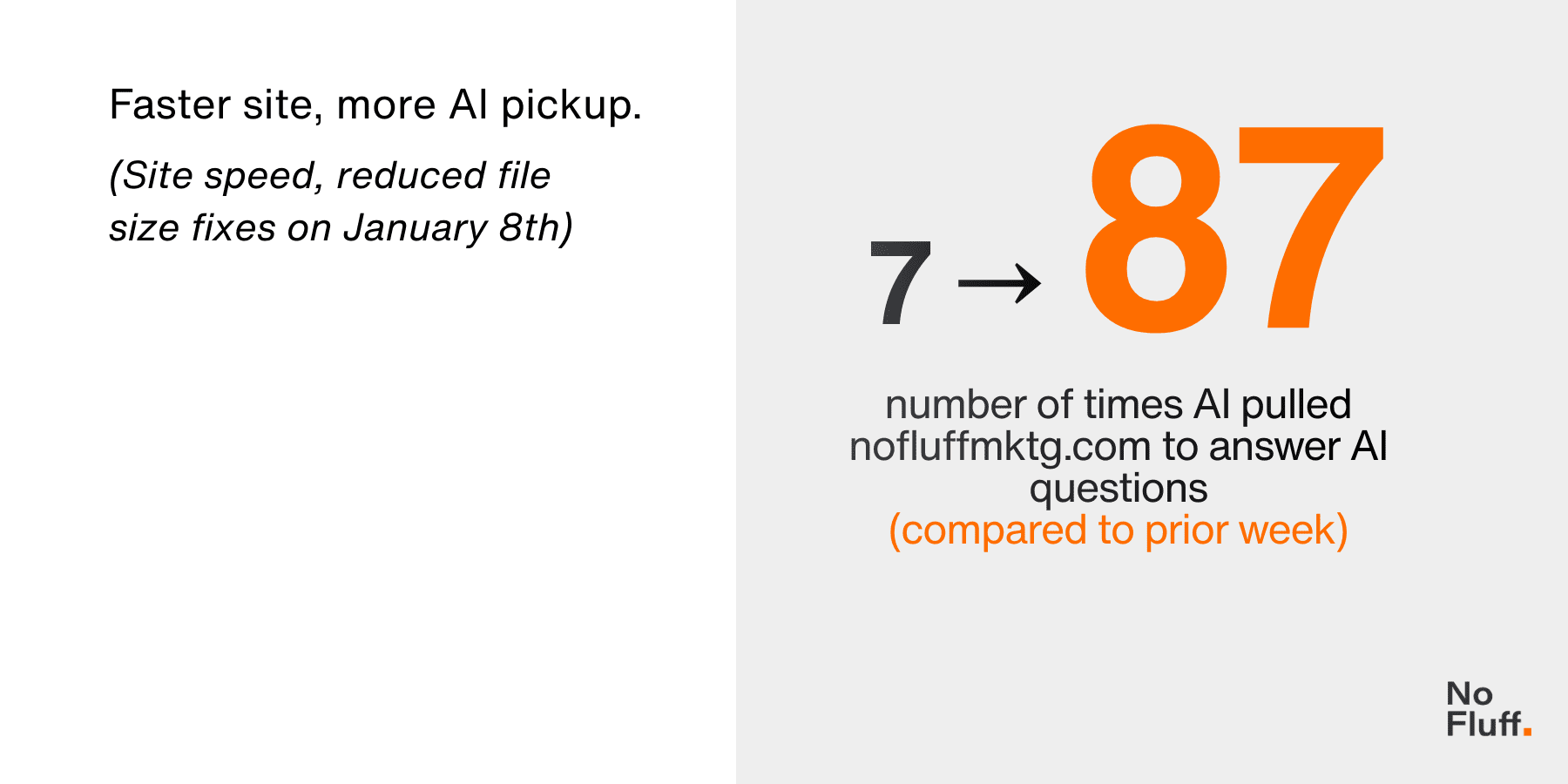

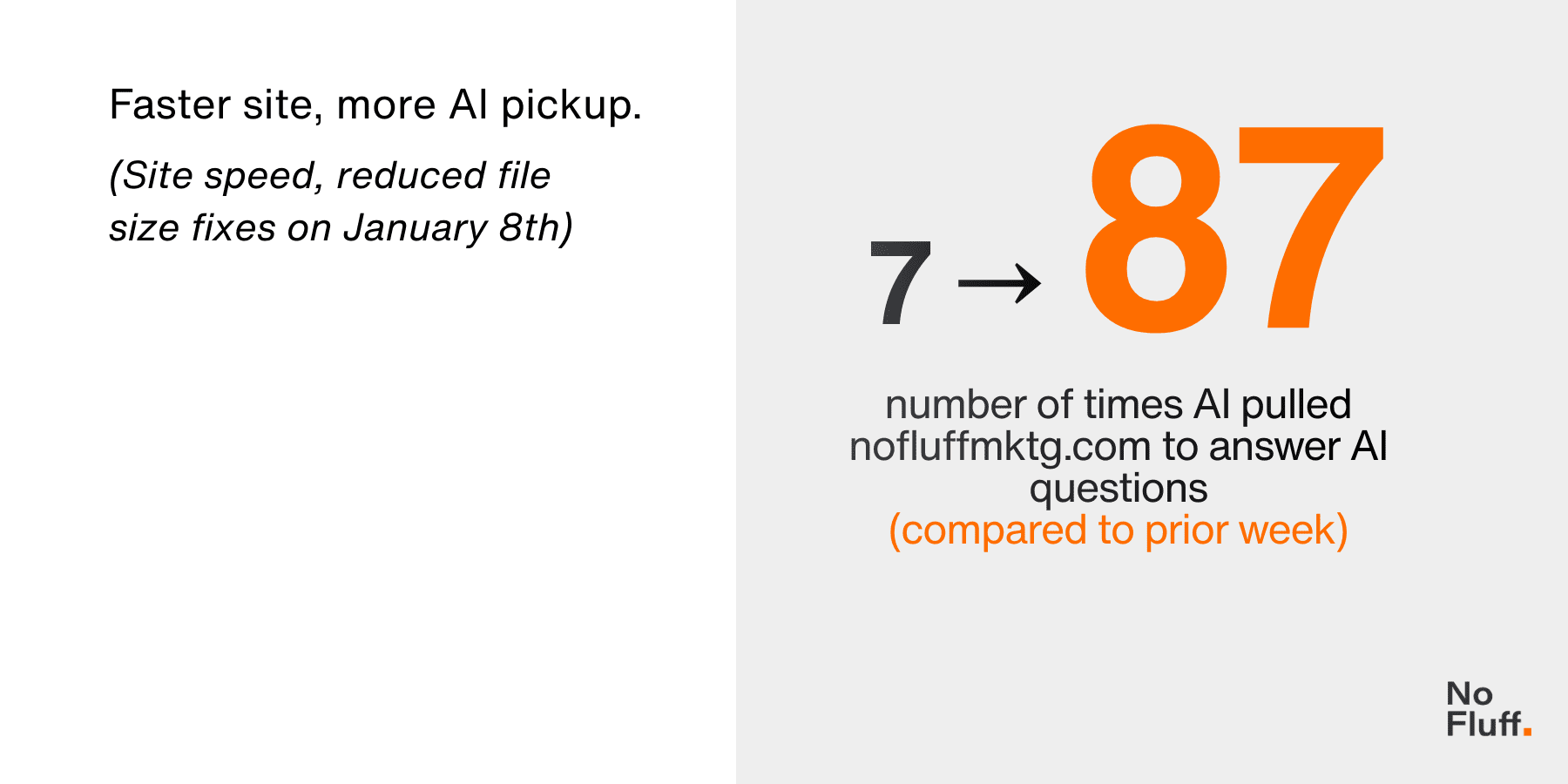

When AI systems misidentify a new brand or confuse it with competitors, the cause is typically thin, slow, or conflicting signals. When pages failed to load within roughly 5–15 seconds, AI systems issue broader “fan-out” queries and assemble answers from adjacent or incorrect sources. Following improvements in site speed, crawl reliability, and entity clarity, the share of answers that correctly referenced this company’s own domain increased, while misattributed mentions declined.

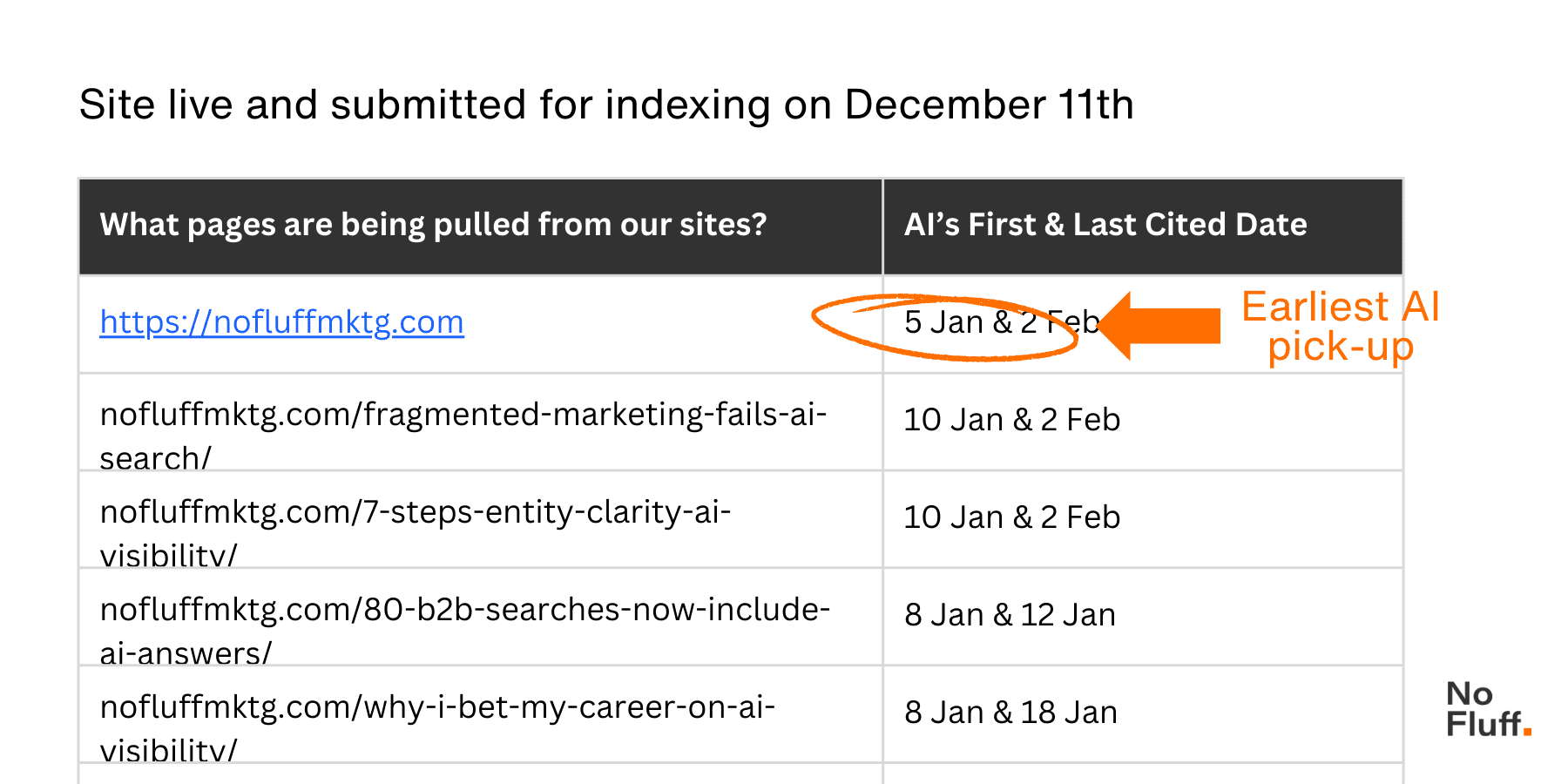

Pattern 4: The 3-Week Indexing Window

The first AI pickup from a new domain can happen within three to four weeks. In this experiment, the first page was discovered on day 27. After that initial discovery, subsequent pages were picked up faster, with the shortest lag around eight days.

Early inclusion wasn’t driven by content volume. It was driven by structure: a solid schema, consistent metadata, a clean, crawlable site, and machine-readable files such as llms.txt.

Pattern 5: Win the Explanatory Round First

New brands typically will not start by winning highly competitive, decision-stage prompts like “best” or “top” lists, unless the offering is truly unique or non-competitive. Before a brand can realistically be shortlisted, it must first be sourced as a primary authority for definitional or educational questions.

In the first 45 days, the goal wasn’t comparison visibility, but recognition and trust: getting AI systems to associate the brand with the right topics and sources. Early success is best measured by citation frequency, or how often a brand is used as the primary source for a given topic.

Pattern 6: Solve the Unfinished Trust Gap (Most Important)

Even with a well-structured site and strong content, brands struggle to get recommended without outside validation. The initial stages of this experiment showed AI answers defaulted to familiar domains and replaced newer brands with competitors that had clearer third-party mentions. This validates the importance of press and authoritative coverage early on. Waiting to “add it later” only slows trust.

5 Steps To Set A New Business Up For AI Visible Success

By now, the takeaway is clear: AI visibility doesn’t happen automatically once a site is live or a few campaigns are running. The good news is that this can be influenced deliberately. The steps below reflect the sequence that consistently moved a new brand from zero visibility to being cited in AI-generated answers. Rather than treating AI visibility as a side effect of SEO, this approach treats it as an operational problem: how to make a brand easy for AI systems to recognize, verify, and reuse.

Step 1: Map Your Brand Entity

Before building a site, you must define your brand in a way machines understand. ChatGPT, Gemini, and Perplexity don’t read your website the way humans do. They connect facts, names, and relationships into entities that define who you are. If those connections are missing or inconsistent, your brand simply won’t appear (no matter how much content you publish).

- Define your business clearly using semantic triples: Use the [Subject] → [Predicate] → [Object] format (e.g., “Brand X” → “offers” → “Service Y”) to provide machine-readable facts.

- Stick to public, widely understood language: Pull terminology from widely accepted sources like Wikipedia or Wikidata. If you describe your product using internal jargon that doesn’t match how the category is commonly defined, you risk being misclassified or overlooked.

- State your authority: Define why your brand deserves trust. What facts, evidence, and proof back you up? Write 3–5 simple, factual claims you want to be known for.

- Define your competitive counter-position: Be clear about what makes you different. Scope the specific niche you own (audience, problem, angle, or offering) that sets you apart from alternatives.

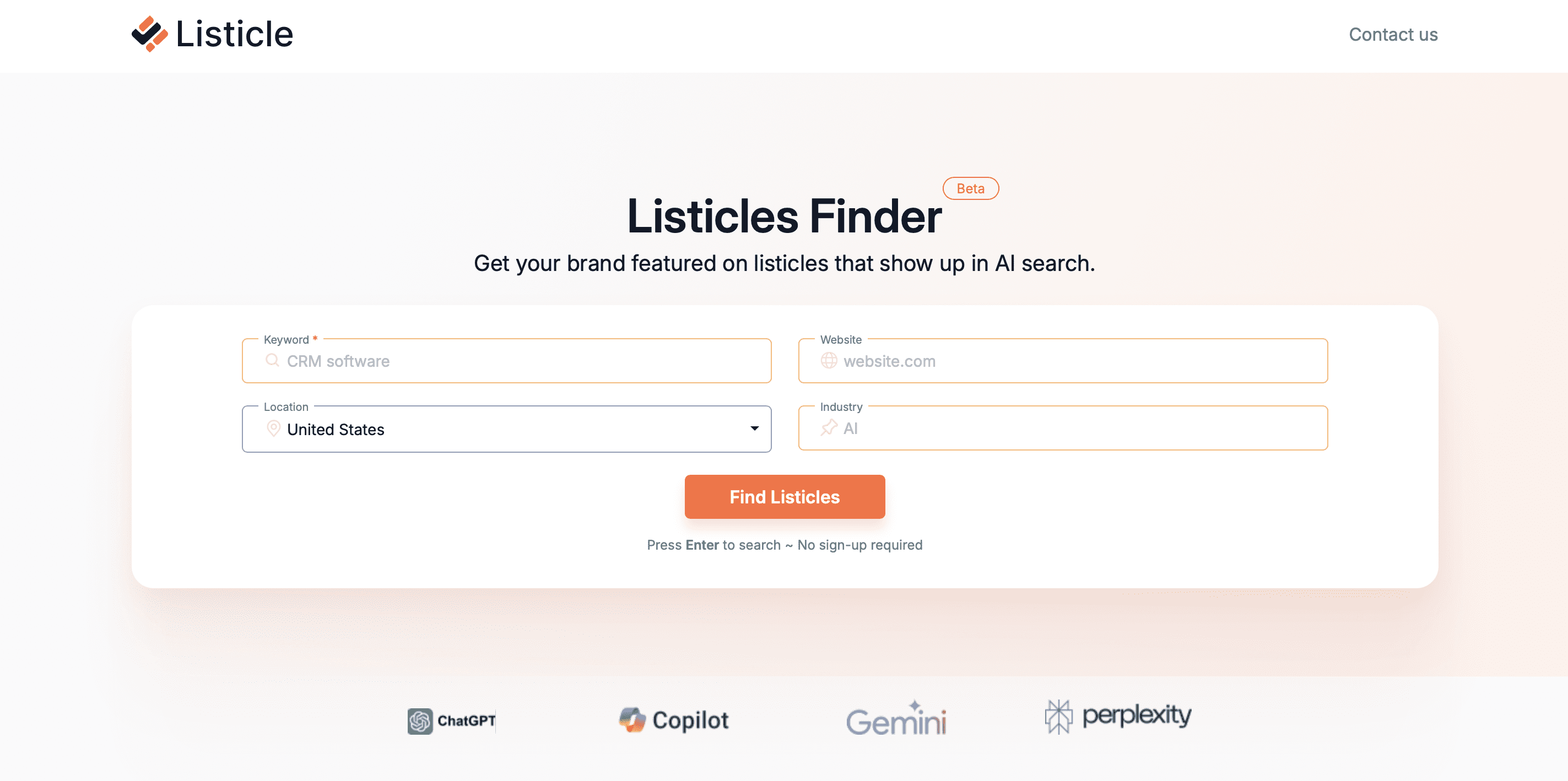

Step 2: Engineer Your Benchmark Prompt Set

You cannot rely on traditional SEO tools designed to track AI visibility. Most rely on inferred data or simulations, not on real prompts.

- Map the competitive landscape: Identify which brands AI systems already reference, which buyer questions are realistically winnable, and where category language creates confusion.

- Reverse-engineer buyer questions: Identify how buyers phrase real questions using keyword and competitor analysis (SEO tool data, People Also Ask, Google SERPS, and asking multiple AI engines themselves)

- Lock your data set: Create a fixed set of 150 buyer-authentic questions across six clusters: Branded, Category, Problem, Comparison, and Advanced Semantic.

- Start testing: Run these prompts weekly across ChatGPT, Gemini, and Perplexity to track your mentions and citation growth.

Step 3: Make the Brand Machine-Readable

Make your site machine-readable to ensure AI bots don’t skip your content. AI systems don’t care about your website’s aesthetic; they care about how easily they can parse your data. If your technical signals are thin or conflicting, AI will hallucinate or substitute your brand with a competitor.

- Implement JSON-LD Schema: Use Organization, Service, and FAQ schemas to tell AI exactly who you are and what you do.

- Deploy an txt File: Place this at your domain root to provide a plain-text guide for AI crawlers, telling them how to describe your company and which pages to prioritize.

- Eliminate crawling issues: Make sure your site is fully crawlable via robots.txt and that no content is hidden in gated PDFs or images. Most importantly, check site speed using PageSpeed Insights. Models don’t patiently wait for slow pages!

Step 4: Publish “Retrieval-Ready” Content

Write for the impatient analyst (the AI bot). Start with high-leverage prompts, questions with real buyer intent that AI already answers, but only using a small and weak set of sources, making them easier to influence before trust fully locks in.

- Lead with the answer: Start every section with a direct, factual answer.

- Chunk semantically: Divide content into logical, independent sections that can be extracted and reused by AI without requiring the context of the entire page.

- Consider the freshness factor: AI favors content updated within the last 60–90 days. For high-competition sectors like SaaS or Finance, content should be refreshed every three months to remain a “trusted” recommendation.

Step 5: Earn External Validation

AI systems cross-check your site’s claims against the rest of the web.

- Claim directory profiles: Align your entity data across Crunchbase, G2, LinkedIn, and Yelp. Inconsistencies across these profiles are a primary cause of AI hallucinations.

- Target authoritative mentions: Secure mentions in industry-specific publications with consistent pickup throughout your prompts and or a strong domain rating.

- External reinforcement: For every important page on your site, aim for at least three intentional external link-backs from authoritative sources to trigger AI pickup.

The Biggest Takeaway: Prioritize Authority as a Long-Term Game

For new brands, the limiting factor in AI search is not optimization. It’s authority.

AI systems are more likely to surface unfamiliar companies first in low-risk, explanatory answers, not in “best,” “top,” or comparison prompts. A clean site and solid SEO help a brand get recognized, but being recommended is a different hurdle.

In practice, early progress is about reducing uncertainty. When a brand consistently appears in third-party articles, reviews, or other independent sources, it becomes easier to explain and safer to reference. Without that outside validation, recommendations stall, no matter how strong the content or how fast the site loads.

This analysis covers the first phase of a live 90-day test examining how a new B2B brand earns visibility in AI-generated search results. Ongoing findings and final results will be published as the experiment concludes.

Image Credits

Featured Image: Image by No Fluff. Used with permission.

In-Post Images: Images by No Fluff. Used with permission.