Cloudflare’s New Markdown for AI Bots: What You Need To Know via @sejournal, @MattGSouthern

Cloudflare launched a feature that converts HTML pages to markdown when AI systems request it. Sites on its network can now serve lighter content to bots without building separate pages.

The feature, called Markdown for Agents, works through HTTP content negotiation. An AI crawler sends a request with Accept: text/markdown in the header. Cloudflare intercepts it, fetches the original HTML from the origin server, converts it to markdown, and delivers the result.

The launch arrives days after Google’s John Mueller called the idea of serving markdown to AI bots “a stupid idea” and questioned whether bots can even parse markdown links properly.

What’s New

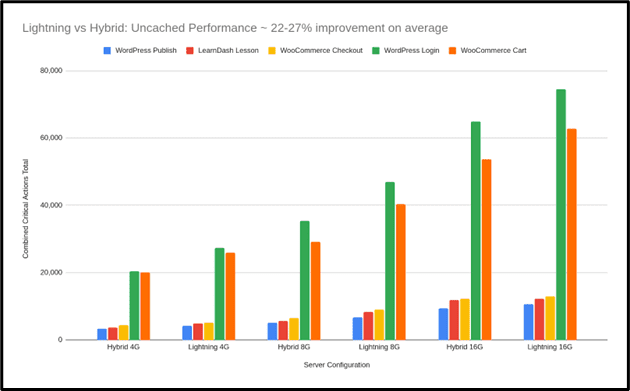

Cloudflare described the feature as treating AI agents as “first-class citizens” alongside human visitors. The company used its own blog post as an example. The HTML version consumed 16,180 tokens while the markdown conversion used 3,150 tokens.

“Feeding raw HTML to an AI is like paying by the word to read packaging instead of the letter inside,” the company wrote.

The conversion happens at Cloudflare’s edge network, not at the origin server. Websites enable it per zone through the dashboard, and it’s available in beta at no additional cost for Pro, Business, and Enterprise plan customers, plus SSL for SaaS customers.

Cloudflare noted that some AI coding tools already send the Accept: text/markdown header. The company named Claude Code and OpenCode as examples.

Each converted response includes an x-markdown-tokens header that estimates the token count of the markdown version. Developers can use this to manage context windows or plan chunking strategies.

Content-Signal Defaults

Converted responses include a Content-Signal header set to ai-train=yes, search=yes, ai-input=yes by default, signaling the content can be used for AI training, search use, and AI input (including agentic use). Whether a given bot honors those signals depends on the bot operator. Cloudflare said the feature will offer custom Content-Signal policies in the future.

The Content Signals framework, which Cloudflare announced during Birthday Week 2025, lets site owners set preferences for how their content gets used. Enabling markdown conversion also applies a default usage signal, not just a format change.

How This Differs From What Mueller Criticized

Mueller was criticizing a different practice. Some site owners build separate markdown pages and serve them to AI user agents through middleware. Mueller raised concerns about cloaking and broken linking, and questioned whether bots could even parse markdown properly.

Cloudflare’s feature uses a different mechanism. Instead of detecting user agents and serving alternate pages, it relies on content negotiation. The same URL serves different representations based on what the client requests in the header.

Mueller’s comments addressed user-agent-based serving, not content negotiation. In a Reddit thread about Cloudflare’s feature, Mueller responded with the same position. He wrote, “Why make things even more complicated (parallel version just for bots) rather than spending a bit of time improving the site for everyone?”

Google defines cloaking as showing different content to users and search engines with the intent to manipulate rankings and mislead users. The cloaking concern may apply differently here. With user-agent sniffing, the server decides what to show based on who’s asking. With content negotiation, the client requests a format and the server responds. The content is the same information in a different format, not different content for different visitors.

The practical result is still similar from a crawler’s perspective. Googlebot requesting standard HTML would see a full webpage. An AI agent requesting markdown would see a stripped-down text version of the same page.

New Radar Tracking

Cloudflare also added content type tracking to Cloudflare Radar for AI bot traffic. The data shows the distribution of content types returned to AI agents and crawlers, broken down by MIME type.

You can filter by individual bot to see what content types specific crawlers receive. Cloudflare showed OAI-SearchBot as an example, displaying the volume of markdown responses served to OpenAI’s search crawler.

The data is available through Cloudflare’s public APIs and Data Explorer.

Why This Matters

If you already run your site through Cloudflare, you can enable markdown conversion with a single toggle instead of building separate markdown pages.

Enabling Markdown for Agents also sets the Content-Signal header to ai-train=yes, search=yes, ai-input=yes by default. Publishers who have been careful about AI access to their content should review those defaults before toggling the feature on.

Looking Ahead

Cloudflare said it plans to add custom Content-Signal policy options to Markdown for Agents in the future.

Mueller’s criticism focused on separate markdown pages, not on standard content negotiation. Google hasn’t addressed whether serving markdown through content negotiation falls under its cloaking guidelines.

The feature is opt-in and limited to paid Cloudflare plans. Review the Content-Signal defaults before enabling it.