Declan would never have found out his therapist was using ChatGPT had it not been for a technical mishap. The connection was patchy during one of their online sessions, so Declan suggested they turn off their video feeds. Instead, his therapist began inadvertently sharing his screen.

“Suddenly, I was watching him use ChatGPT,” says Declan, 31, who lives in Los Angeles. “He was taking what I was saying and putting it into ChatGPT, and then summarizing or cherry-picking answers.”

Declan was so shocked he didn’t say anything, and for the rest of the session he was privy to a real-time stream of ChatGPT analysis rippling across his therapist’s screen. The session became even more surreal when Declan began echoing ChatGPT in his own responses, preempting his therapist.

“I became the best patient ever,” he says, “because ChatGPT would be like, ‘Well, do you consider that your way of thinking might be a little too black and white?’ And I would be like, ‘Huh, you know, I think my way of thinking might be too black and white,’ and [my therapist would] be like, ‘Exactly.’ I’m sure it was his dream session.”

Among the questions racing through Declan’s mind was, “Is this legal?” When Declan raised the incident with his therapist at the next session—“It was super awkward, like a weird breakup”—the therapist cried. He explained he had felt they’d hit a wall and had begun looking for answers elsewhere. “I was still charged for that session,” Declan says, laughing.

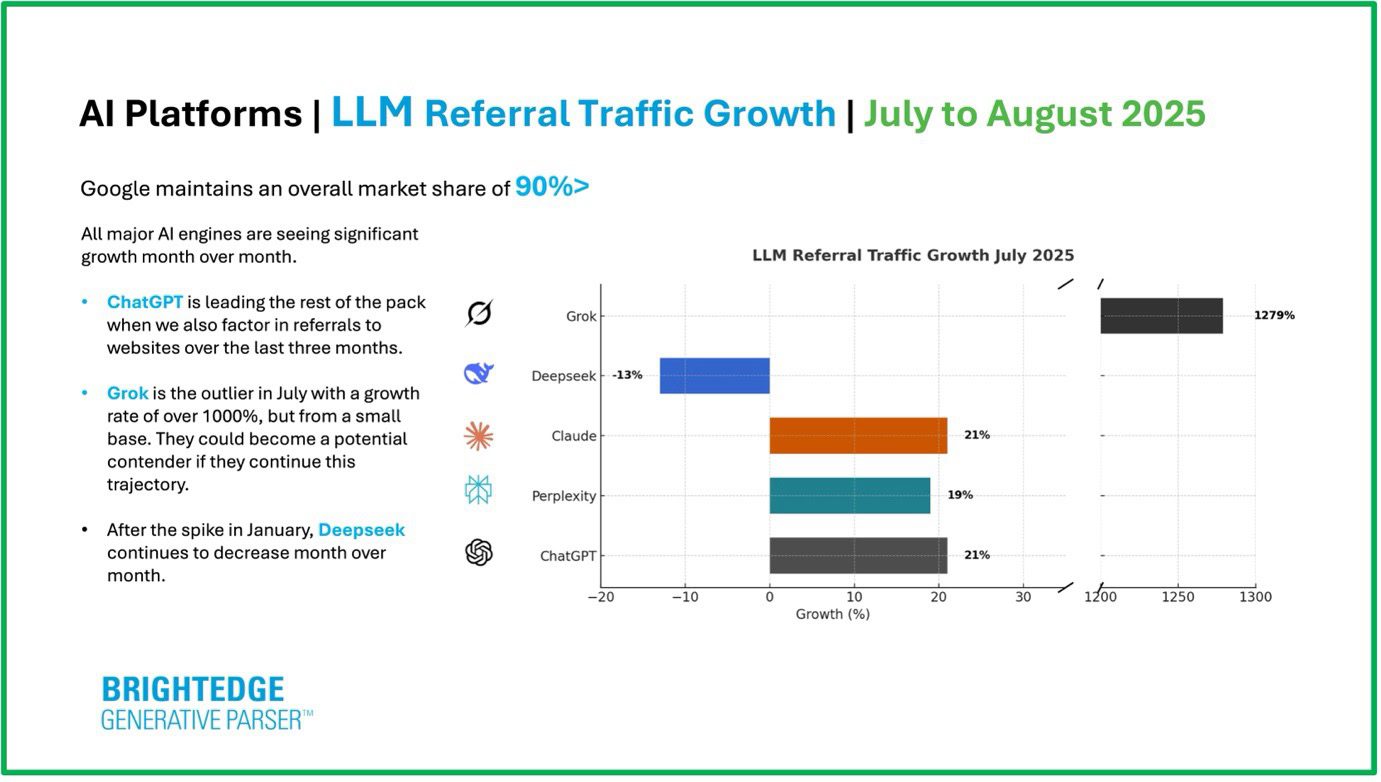

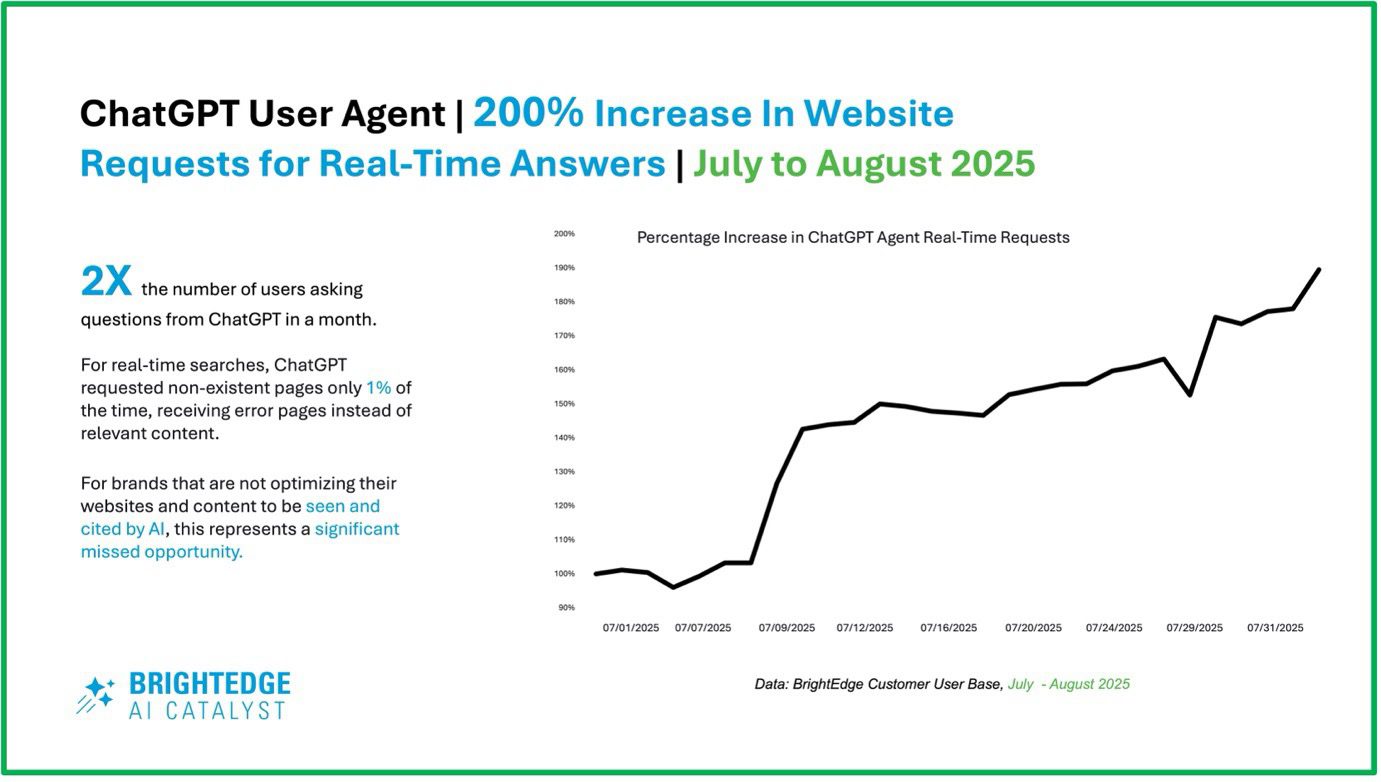

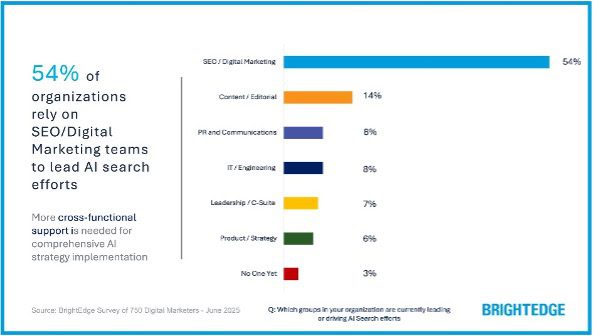

The large language model (LLM) boom of the past few years has had unexpected ramifications for the field of psychotherapy, mostly due to the growing number of people substituting the likes of ChatGPT for human therapists. But less discussed is how some therapists themselves are integrating AI into their practice. As in many other professions, generative AI promises tantalizing efficiency savings, but its adoption risks compromising sensitive patient data and undermining a relationship in which trust is paramount.

Suspicious sentiments

Declan is not alone, as I can attest from personal experience. When I received a recent email from my therapist that seemed longer and more polished than usual, I initially felt heartened. It seemed to convey a kind, validating message, and its length made me feel that she’d taken the time to reflect on all of the points in my (rather sensitive) email.

On closer inspection, though, her email seemed a little strange. It was in a new font, and the text displayed several AI “tells,” including liberal use of the Americanized em dash (we’re both from the UK), the signature impersonal style, and the habit of addressing each point made in the original email line by line.

My positive feelings quickly drained away, to be replaced by disappointment and mistrust, once I realized ChatGPT likely had a hand in drafting the message—which my therapist confirmed when I asked her.

Despite her assurance that she simply dictates longer emails using AI, I still felt uncertainty over the extent to which she, as opposed to the bot, was responsible for the sentiments expressed. I also couldn’t entirely shake the suspicion that she might have pasted my highly personal email wholesale into ChatGPT.

When I took to the internet to see whether others had had similar experiences, I found plenty of examples of people receiving what they suspected were AI-generated communiqués from their therapists. Many, including Declan, had taken to Reddit to solicit emotional support and advice.

So had Hope, 25, who lives on the east coast of the US, and had direct-messaged her therapist about the death of her dog. She soon received a message back. It would have been consoling and thoughtful—expressing how hard it must be “not having him by your side right now”—were it not for the reference to the AI prompt accidentally preserved at the top: “Here’s a more human, heartfelt version with a gentle, conversational tone.”

Hope says she felt “honestly really surprised and confused.” “It was just a very strange feeling,” she says. “Then I started to feel kind of betrayed. … It definitely affected my trust in her.” This was especially problematic, she adds, because “part of why I was seeing her was for my trust issues.”

Hope had believed her therapist to be competent and empathetic, and therefore “never would have suspected her to feel the need to use AI.” Her therapist was apologetic when confronted, and she explained that because she’d never had a pet herself, she’d turned to AI for help expressing the appropriate sentiment.

A disclosure dilemma

Betrayal or not, there may be some merit to the argument that AI could help therapists better communicate with their clients. A 2025 study published in PLOS Mental Health asked therapists to use ChatGPT to respond to vignettes describing problems of the kind patients might raise in therapy. Not only was a panel of 830 participants unable to distinguish between the human and AI responses, but AI responses were rated as conforming better to therapeutic best practice.

However, when participants suspected responses to have been written by ChatGPT, they ranked them lower. (Responses written by ChatGPT but misattributed to therapists received the highest ratings overall.)

Similarly, Cornell University researchers found in a 2023 study that AI-generated messages can increase feelings of closeness and cooperation between interlocutors, but only if the recipient remains oblivious to the role of AI. The mere suspicion of its use was found to rapidly sour goodwill.

“People value authenticity, particularly in psychotherapy,” says Adrian Aguilera, a clinical psychologist and professor at the University of California, Berkeley. “I think [using AI] can feel like, ‘You’re not taking my relationship seriously.’ Do I ChatGPT a response to my wife or my kids? That wouldn’t feel genuine.”

In 2023, in the early days of generative AI, the online therapy service Koko conducted a clandestine experiment on its users, mixing in responses generated by GPT-3 with ones drafted by humans. They discovered that users tended to rate the AI-generated responses more positively. The revelation that users had unwittingly been experimented on, however, sparked outrage.

The online therapy provider BetterHelp has also been subject to claims that its therapists have used AI to draft responses. In a Medium post, photographer Brendan Keen said his BetterHelp therapist admitted to using AI in their replies, leading to “an acute sense of betrayal” and persistent worry, despite reassurances, that his data privacy had been breached. He ended the relationship thereafter.

A BetterHelp spokesperson told us the company “prohibits therapists from disclosing any member’s personal or health information to third-party artificial intelligence, or using AI to craft messages to members to the extent it might directly or indirectly have the potential to identify someone.”

All these examples relate to undisclosed AI usage. Aguilera believes time-strapped therapists can make use of LLMs, but transparency is essential. “We have to be up-front and tell people, ‘Hey, I’m going to use this tool for X, Y, and Z’ and provide a rationale,” he says. People then receive AI-generated messages with that prior context, rather than assuming their therapist is “trying to be sneaky.”

Psychologists are often working at the limits of their capacity, and levels of burnout in the profession are high, according to 2023 research conducted by the American Psychological Association. That context makes the appeal of AI-powered tools obvious.

But lack of disclosure risks permanently damaging trust. Hope decided to continue seeing her therapist, though she stopped working with her a little later for reasons she says were unrelated. “But I always thought about the AI Incident whenever I saw her,” she says.

Risking patient privacy

Beyond the transparency issue, many therapists are leery of using LLMs in the first place, says Margaret Morris, a clinical psychologist and affiliate faculty member at the University of Washington.

“I think these tools might be really valuable for learning,” she says, noting that therapists should continue developing their expertise over the course of their career. “But I think we have to be super careful about patient data.” Morris calls Declan’s experience “alarming.”

Therapists need to be aware that general-purpose AI chatbots like ChatGPT are not approved by the US Food and Drug Administration and are not HIPAA compliant, says Pardis Emami-Naeini, assistant professor of computer science at Duke University, who has researched the privacy and security implications of LLMs in a health context. (HIPAA is a set of US federal regulations that protect people’s sensitive health information.)

“This creates significant risks for patient privacy if any information about the patient is disclosed or can be inferred by the AI,” she says.

In a recent paper, Emami-Naeini found that many users wrongly believe ChatGPT is HIPAA compliant, creating an unwarranted sense of trust in the tool. “I expect some therapists may share this misconception,” she says.

As a relatively open person, Declan says, he wasn’t completely distraught to learn how his therapist was using ChatGPT. “Personally, I am not thinking, ‘Oh, my God, I have deep, dark secrets,’” he said. But it did still feel violating: “I can imagine that if I was suicidal, or on drugs, or cheating on my girlfriend … I wouldn’t want that to be put into ChatGPT.”

When using AI to help with email, “it’s not as simple as removing obvious identifiers such as names and addresses,” says Emami-Naeini. “Sensitive information can often be inferred from seemingly nonsensitive details.”

She adds, “Identifying and rephrasing all potential sensitive data requires time and expertise, which may conflict with the intended convenience of using AI tools. In all cases, therapists should disclose their use of AI to patients and seek consent.”

A growing number of companies, including Heidi Health, Upheal, Lyssn, and Blueprint, are marketing specialized tools to therapists, such as AI-assisted note-taking, training, and transcription services. These companies say they are HIPAA compliant and store data securely using encryption and pseudonymization where necessary. But many therapists are still wary of the privacy implications—particularly of services that necessitate the recording of entire sessions.

“Even if privacy protections are improved, there is always some risk of information leakage or secondary uses of data,” says Emami-Naeini.

A 2020 hack on a Finnish mental health company, which resulted in tens of thousands of clients’ treatment records being accessed, serves as a warning. People on the list were blackmailed, and subsequently the entire trove was publicly released, revealing extremely sensitive details such as peoples’ experiences of child abuse and addiction problems.

What therapists stand to lose

In addition to violation of data privacy, other risks are involved when psychotherapists consult LLMs on behalf of a client. Studies have found that although some specialized therapy bots can rival human-delivered interventions, advice from the likes of ChatGPT can cause more harm than good.

A recent Stanford University study, for example, found that chatbots can fuel delusions and psychopathy by blindly validating a user rather than challenging them, as well as suffer from biases and engage in sycophancy. The same flaws could make it risky for therapists to consult chatbots on behalf of their clients. They could, for example, baselessly validate a therapist’s hunch, or lead them down the wrong path.

Aguilera says he has played around with tools like ChatGPT while teaching mental health trainees, such as by entering hypothetical symptoms and asking the AI chatbot to make a diagnosis. The tool will produce lots of possible conditions, but it’s rather thin in its analysis, he says. The American Counseling Association recommends that AI not be used for mental health diagnosis at present.

A study published in 2024 of an earlier version of ChatGPT similarly found it was too vague and general to be truly useful in diagnosis or devising treatment plans, and it was heavily biased toward suggesting people seek cognitive behavioral therapy as opposed to other types of therapy that might be more suitable.

Daniel Kimmel, a psychiatrist and neuroscientist at Columbia University, conducted experiments with ChatGPT where he posed as a client having relationship troubles. He says he found the chatbot was a decent mimic when it came to “stock-in-trade” therapeutic responses, like normalizing and validating, asking for additional information, or highlighting certain cognitive or emotional associations.

However, “it didn’t do a lot of digging,” he says. It didn’t attempt “to link seemingly or superficially unrelated things together into something cohesive … to come up with a story, an idea, a theory.”

“I would be skeptical about using it to do the thinking for you,” he says. Thinking, he says, should be the job of therapists.

Therapists could save time using AI-powered tech, but this benefit should be weighed against the needs of patients, says Morris: “Maybe you’re saving yourself a couple of minutes. But what are you giving away?”